What is Local III?

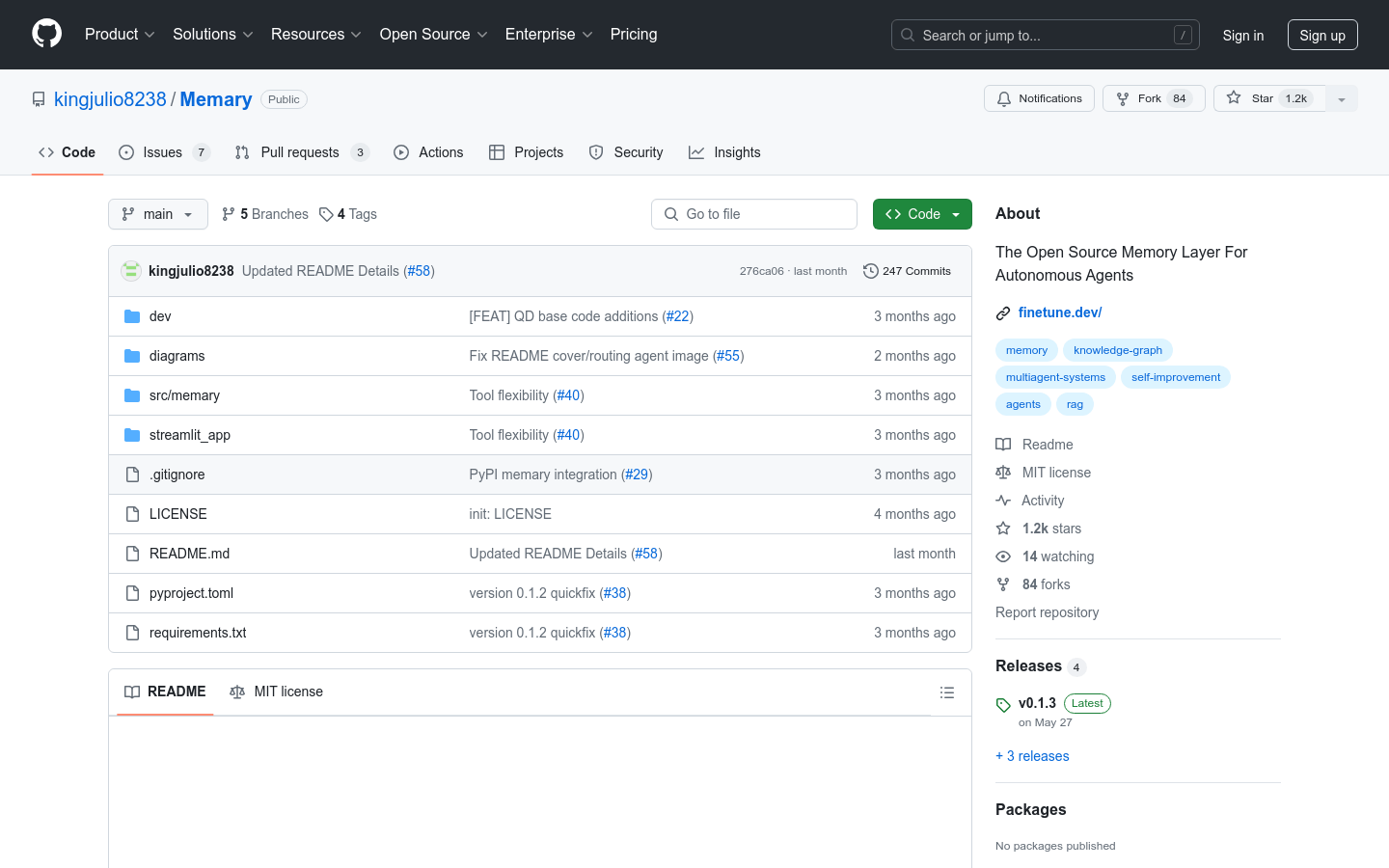

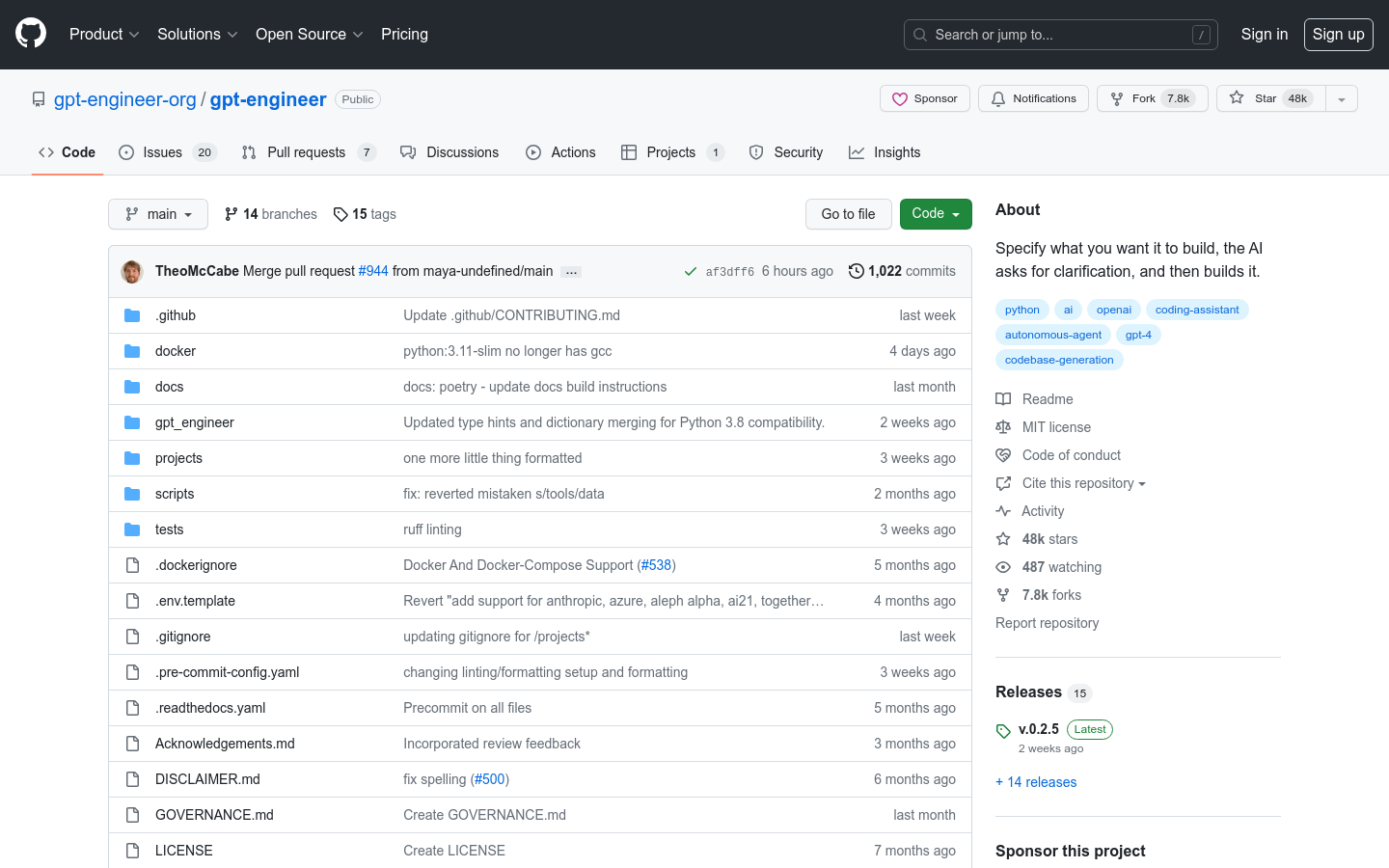

Local III is an advanced update developed by over 100 global contributors. It introduces a user-friendly local model browser and integrates deeply with inference engines like Ollama. Local III supports popular open models such as Llama3, Moondream, and Codestral with customized configurations. It offers settings that enhance offline code interpretation reliability.

Local III also includes a free, hosted optional model called Model i. Engaging in conversations with Model i helps train an open-source computer control language model.

Target Audience:

Local III aims to serve developers and technical professionals who want to access machine intelligence locally for code interpretation and execution. It is particularly beneficial for users who prioritize privacy and need to use AI models without internet connectivity.

Usage Scenarios:

Developers can use Local III to write and test code locally, eliminating the need for online services.

Data scientists can analyze and process data using Local III in environments without internet access.

Educational institutions can employ Local III as a teaching tool to instruct students on using AI technologies in a local setting.

Key Features:

Interactive setup options to choose inference providers, models, and download new models.

Introduction of Model i for a seamless experience while contributing to training local language models.

Deep integration with Ollama models simplifies model configuration commands.

Optimized configuration files for top-tier local language models such as Codestral, Llama3, and Qwen.

Local visual support converts images into descriptions generated by Moondream and extracts text via OCR.

Experimental local OS mode allows Open Interpreter to control mouse, keyboard, and screen viewing.

Getting Started:

1. Visit the Local III website and download the plugin.

2. Install the plugin and launch it. Choose your preferred inference provider and model.

3. Use interactive settings to download any required new models.

4. Configure recommended settings to optimize specific model performance.

5. Utilize Local Explorer for managing and using models locally.

6. Enable local visual and OS modes when needed for more advanced interactions.

7. Participate in dialogues and contribute to model training for open-source AI development.