What is Lumina-mGPT?

Lumina-mGPT is a family of multi-modal self-attentive models that excel at various visual and language tasks, particularly in generating realistic images from text descriptions. The model is built using the xllmx module and supports LLM-centric multi-modal tasks, making it ideal for deep exploration and quick familiarization with its capabilities.

Who Is It For?

Researchers and developers interested in deep learning and artificial intelligence can benefit from Lumina-mGPT. It is suitable for users needing advanced AI technologies for image generation, image understanding, and multi-modal tasks.

Example Usage Scenarios

Researchers can use Lumina-mGPT to generate specific scene images.

Developers can apply the model for tasks like style transfer between images.

Educators can utilize the model to teach students about AI image processing fundamentals.

Key Features

Text-to-image generation: Users provide text descriptions and get corresponding images.

Image-to-image tasks: The model supports multiple downstream tasks, allowing easy switching between them.

Flexible input formats: Supports minimal constraints on input formats, ideal for in-depth exploration.

Simple inference code: Provides basic Lumina-mGPT inference code examples.

Image understanding: The model can describe the content of input images in detail.

Multi-modal task support: The model supports various multi-modal tasks including depth estimation.

Getting Started Tutorial

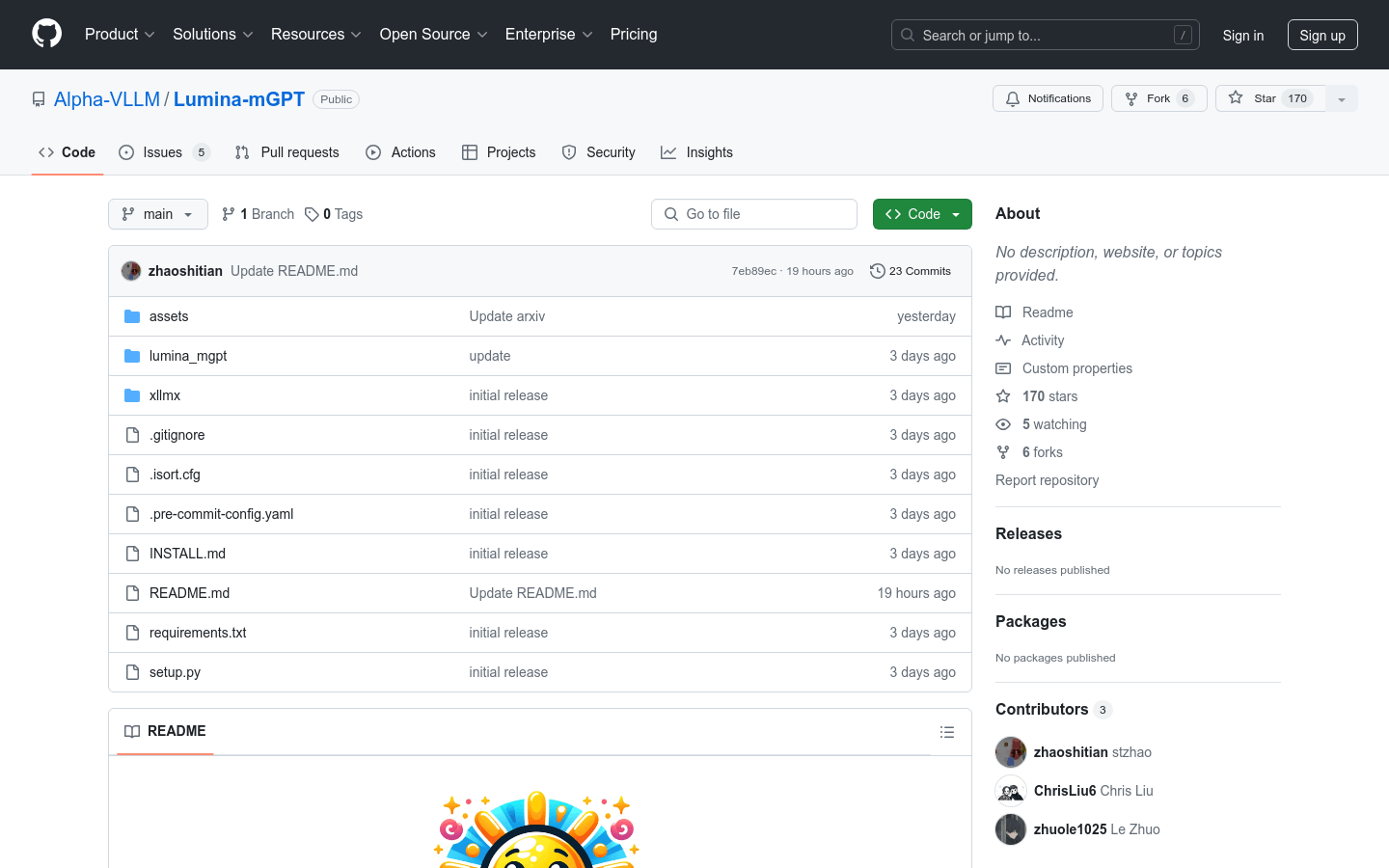

1. Visit the Lumina-mGPT GitHub page and clone or download the code.

2. Ensure you have all necessary dependencies installed, such as the xllmx module.

3. Follow the instructions in INSTALL.md to install Lumina-mGPT.

4. Run the Gradio demo or use the provided simple inference code to test the model.

5. Adjust model parameters as needed, such as target size and temperature.

6. Use the model for image generation, image understanding, or other multi-modal tasks.