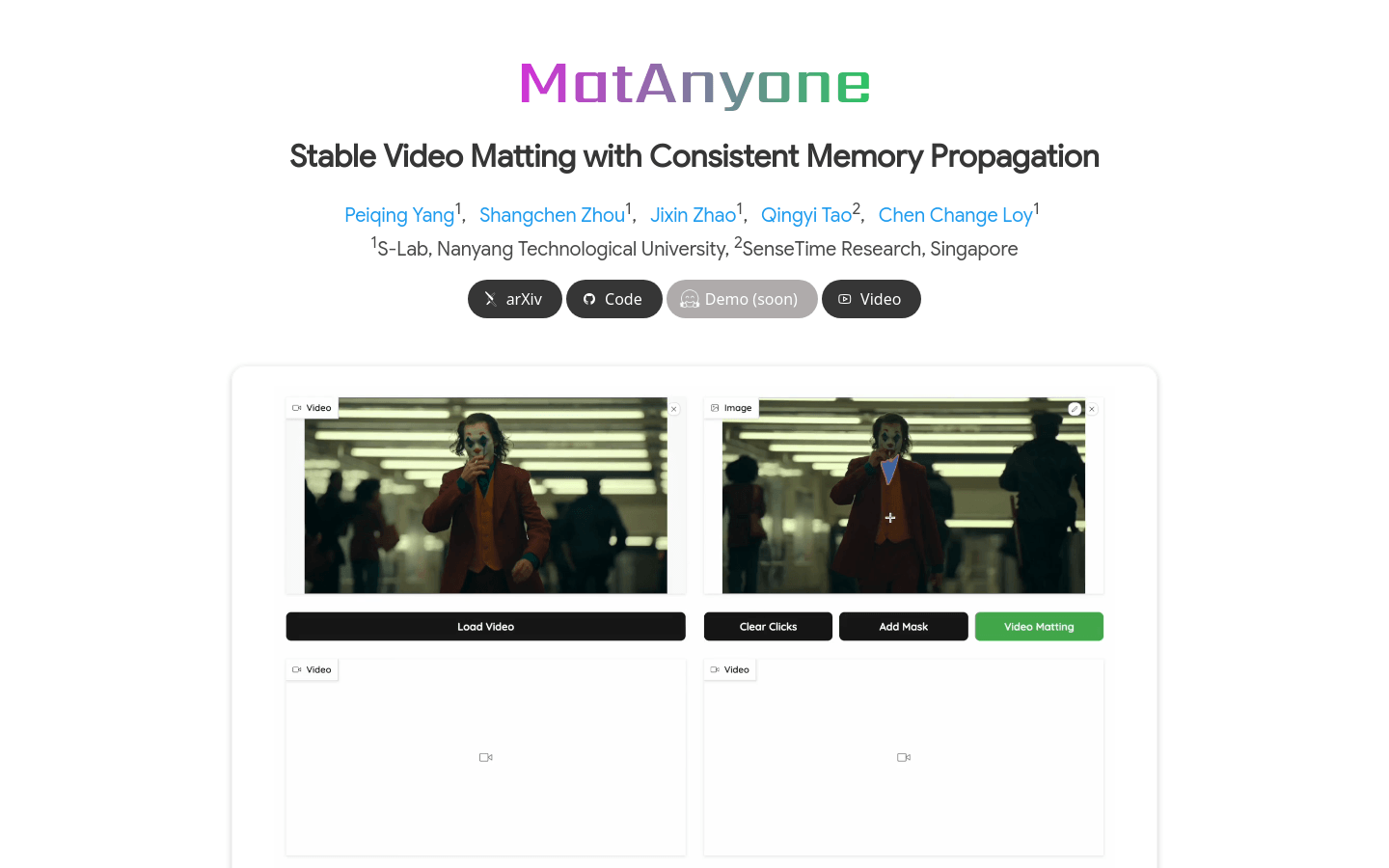

What is MatAnyone?

MatAnyone is an advanced video compositing technology that focuses on achieving stable video compositing through consistent memory propagation. It uses a region adaptive memory fusion module combined with target-specific segmentation maps to maintain semantic stability and detail integrity in complex backgrounds. This technology is crucial for providing high-quality video compositing solutions, especially for precise compositing needs in video editing, special effects creation, and content production.

Key Benefits of MatAnyone:

Maintains semantic stability in core regions.

Provides fine detail handling at object boundaries.

Developed by researchers from Nanyang Technological University and SenseTime.

Target Users:

Video editors

Special effects artists

Content creators

Businesses needing high-quality video compositing solutions

Ideal for scenarios requiring accurate compositing such as film post-production, advertising video creation, and game video development.

Usage Scenarios:

In film post-production, for quick background replacement.

In advertising videos, separating products from their original backgrounds for use in different scenes.

In game videos, real-time compositing to separate game characters from game environments.

Features:

Supports target-specific video compositing where users can specify the target object in the first frame.

Ensures semantic consistency across the video sequence using consistent memory propagation.

Uses region adaptive memory fusion to preserve fine details at object boundaries.

Trained on large-scale segmentation data to enhance semantic stability.

Compatible with various video types including live-action videos, AI-generated videos, and game videos.

Produces high-quality alpha channel output for easy compositing.

Supports instance-based and interactive video compositing.

Enables recursive optimization during inference without retraining to improve detail quality.

Tutorial:

1. Visit the MatAnyone project page and download the relevant code and models.

2. Prepare your video素材 and specify the segmentation map of the target object in the first frame.

3. Use the MatAnyone model to process the video, which will automatically propagate memory and perform compositing.

4. Adjust model parameters if needed to optimize the compositing results.

5. Output the alpha channel and composite the composited video with new backgrounds.