What is MiniCPM-V 2.6?

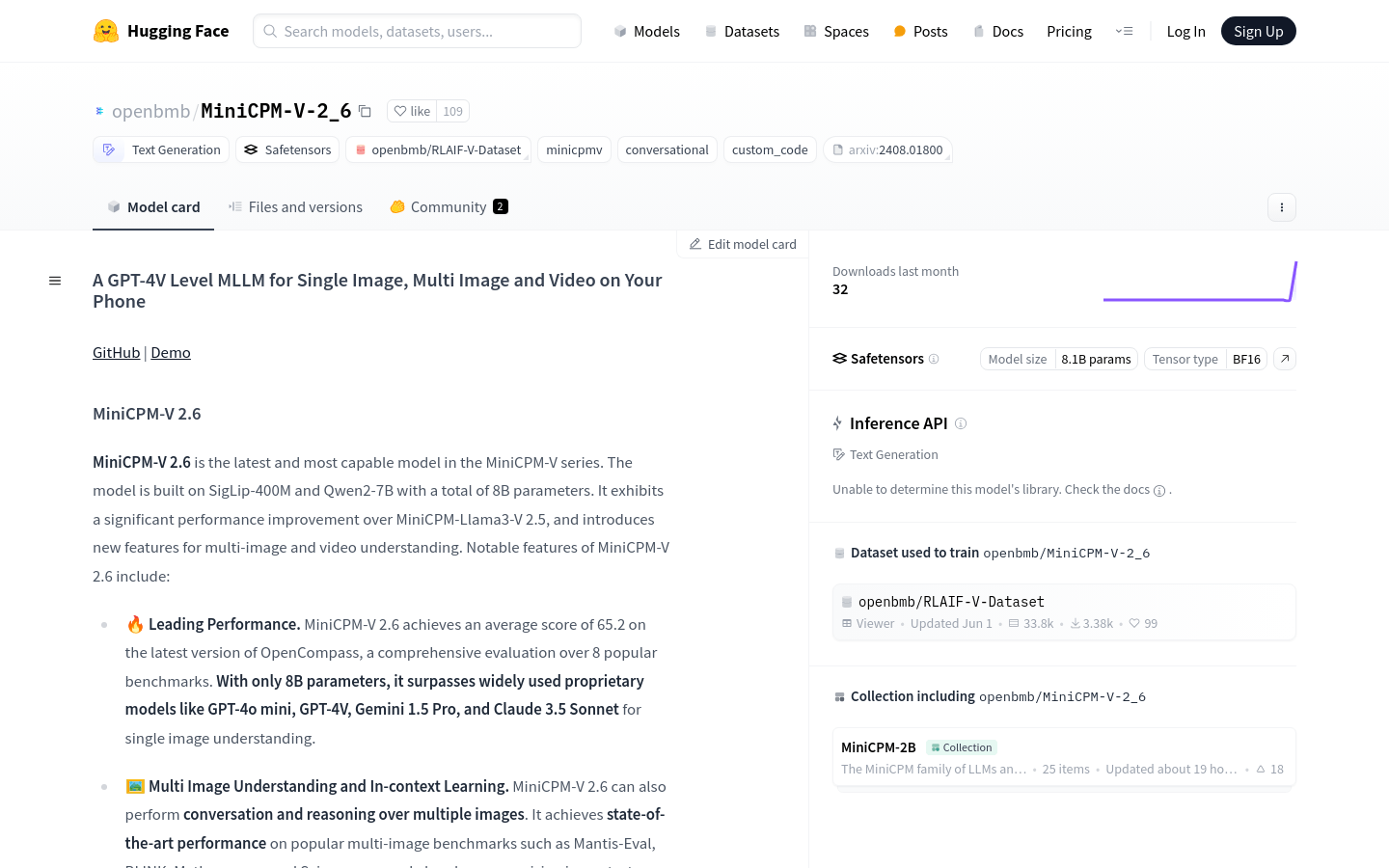

MiniCPM-V 2.6 is an advanced large language model with 800 million parameters, excelling in single image understanding, multi-image understanding, and video understanding. It has achieved top scores on multiple benchmarks like OpenCompass, outperforming many proprietary models. The model features robust OCR capabilities, supports multiple languages, and demonstrates high efficiency, enabling real-time video understanding on devices like iPads.

Who Should Use MiniCPM-V 2.6?

Researchers and developers looking for high-performance solutions in image and video understanding, multi-language processing, and OCR will find MiniCPM-V 2.6 valuable.

Example Scenarios:

Researchers can use MiniCPM-V 2.6 for image recognition and classification tasks.

Developers can leverage the model for real-time video captioning and content analysis.

Enterprises can integrate the model into their products to enhance image and video processing functionalities.

Key Features:

Achieves leading scores on popular benchmarks such as OpenCompass.

Supports multi-image understanding and context learning.

Can process video inputs, engage in dialogues, and provide detailed captions.

Has strong OCR capabilities, capable of handling images up to 1.8 million pixels.

Utilizes RLAIF-V and VisCPM technologies for reliable behavior and low hallucination rates.

Demonstrates efficient performance by generating fewer tokens than most models, improving inference speed and reducing power consumption.

How to Use MiniCPM-V 2.6:

1. Load the MiniCPM-V 2.6 model using the Hugging Face Transformers library.

2. Prepare input data, which can be a single image, multiple images, or a video file.

3. Input questions or instructions via the model's chat function to receive responses.

4. For video processing, use the provided encode_video function.

5. Leverage the model’s multi-language capabilities for analyzing images or videos in different languages.

6. Fine-tune the model as needed to fit specific applications or tasks.