What is StackBlitz?

StackBlitz is a cutting-edge web-based Integrated Development Environment (IDE) tailored for the JavaScript ecosystem. It utilizes WebContainers, a technology powered by WebAssembly, to generate instant Node.js environments directly in your browser. This setup offers exceptional speed and security, making it an ideal tool for developers.

---

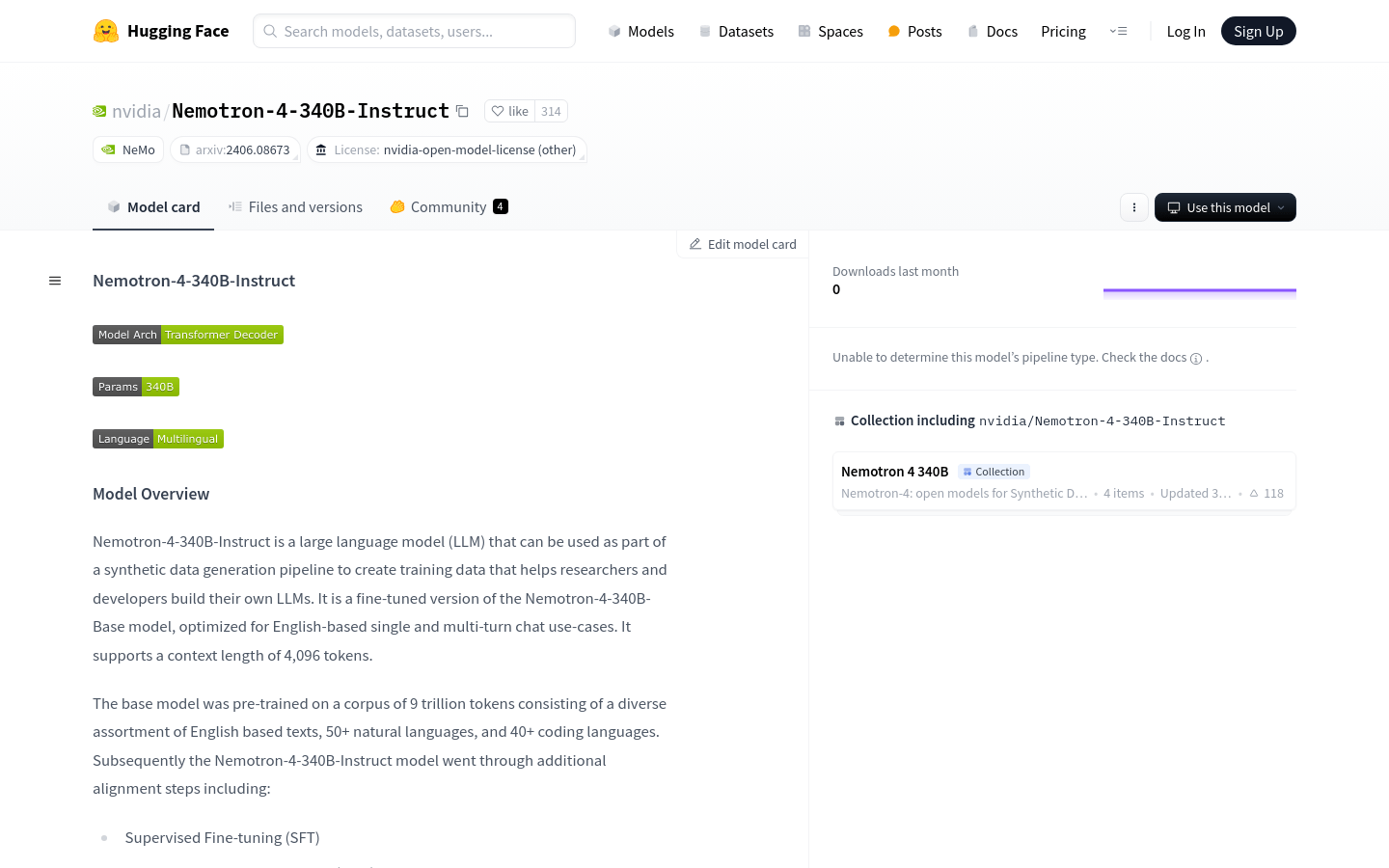

Nemotron-4-340B-Instruct: An Advanced Language Model

Nemotron-4-340B-Instruct is a large language model developed by NVIDIA, specifically optimized for English single-turn and multi-turn dialogues. The model supports up to 4096 tokens of context length and has undergone additional alignment steps like supervised fine-tuning (SFT), direct preference optimization (DPO), and reward-optimized preference optimization (RPO).

It was trained on a combination of approximately 20K human-labeled data and synthetic data generated through a dedicated pipeline, which constitutes more than 98% of the training data. This approach ensures that the model performs well in areas such as human dialogue preferences, mathematical reasoning, coding, and instruction-following.

Who Can Use It?

Developers and enterprises looking to build or customize large language models can benefit from Nemotron-4-340B-Instruct. It is particularly useful for those working on AI applications in English conversations, mathematical problem-solving, and programming guidance.

Example Usage Scenarios

Generating Training Data: Helps developers train customized conversational systems.

Math Problem Solving: Provides accurate logical reasoning and solution generation.

Programming Assistance: Aids programmers in understanding code logic, offering guidance and code generation.

Key Features

Supports up to 4096 tokens of context length, suitable for handling long texts.

Enhanced with SFT, DPO, and RPO, improving dialogue and instruction-following capabilities.

Generates high-quality synthetic data, assisting developers in building their own LLMs.

Utilizes Grouped-Query Attention (GQA) and Rotary Position Embeddings (RoPE).

Compatible with NeMo Framework's custom tools, including parameter-efficient fine-tuning and model alignment.

Performs excellently across various benchmarks, including MT-Bench, IFEval, and MMLU.

Using the Model

1. Use the NeMo Framework to create a Python script for interacting with the deployed model.

2. Create a Bash script to start the inference server.

3. Distribute the model across multiple nodes using the Slurm job scheduler and link it with the inference server.

4. Define a text generation function in your Python script, setting request headers and data structures.

5. Call the text generation function with prompts and generation parameters to get model responses.

6. Adjust generation parameters such as temperature, topk, and topp to control the style and diversity of the generated text.

7. Optimize the model output by adjusting system prompts to achieve better dialogue results.