One Shot One Talk

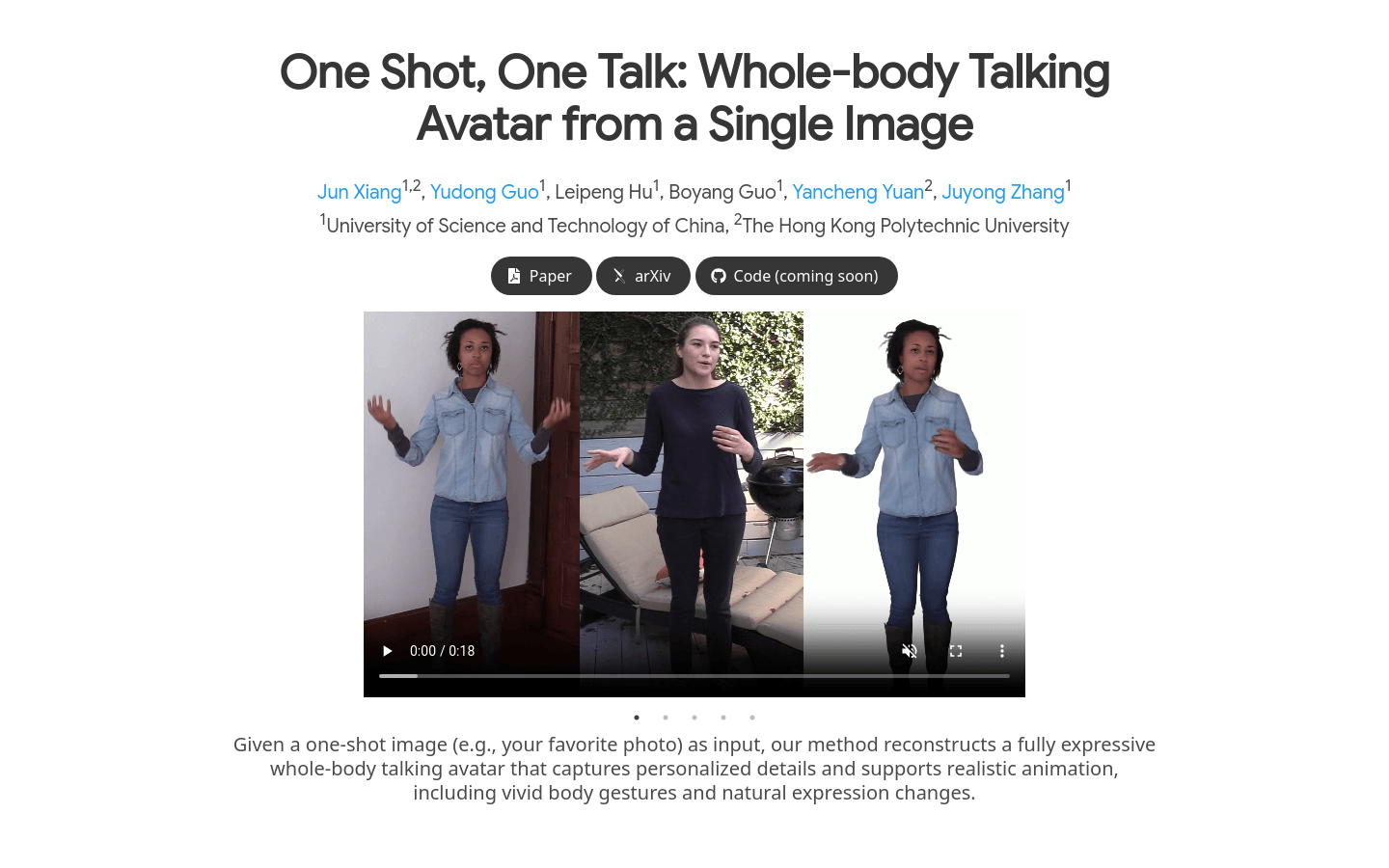

One Shot One Talk is a deep learning-based image generation technology. It reconstructs full-body dynamic talking avatars from a single image, featuring personalized details and realistic animation including body movements and facial expressions. This technology significantly lowers the barrier to creating realistic, movable virtual characters. Users can generate highly personalized and expressive virtual avatars from just one image. Developed by researchers from the University of Science and Technology of China and Hong Kong Polytechnic University, it combines advanced image-to-video diffusion models and 3DGS-mesh hybrid avatar representation. Key regularization techniques reduce inconsistencies caused by imperfect labels.

Target Audience

Virtual reality, augmented reality, game development, social media, and entertainment professionals and enthusiasts. It offers a fast and efficient way to create personalized virtual avatars for virtual anchors, virtual customer service, game character design, and more.

Use Cases

Game developers can quickly generate dynamic game character models for trailers.

Social media platforms can provide users with personalized virtual avatars to enhance user interaction.

Film production teams can create realistic dynamic models for movie characters in special effects.

Product Features

Single image input

Realistic animation of body movements and facial expressions

Personalized detail capture

Dynamic modeling for natural movements

Pseudo-label generation using pre-trained models

3DGS-mesh hybrid avatar representation for realism

Key regularization techniques for improved avatar quality

Cross-identity motion retargeting

How to Use

1 Access the product page and download the code.

2 Prepare a personal image as input.

3 Configure the environment and parameters according to the documentation.

4 Run the code with your image to generate a dynamic avatar.

5 Adjust parameters like movement and expressions.

6 Save and use the avatar.