What is OuteTTS-0.2-500M?

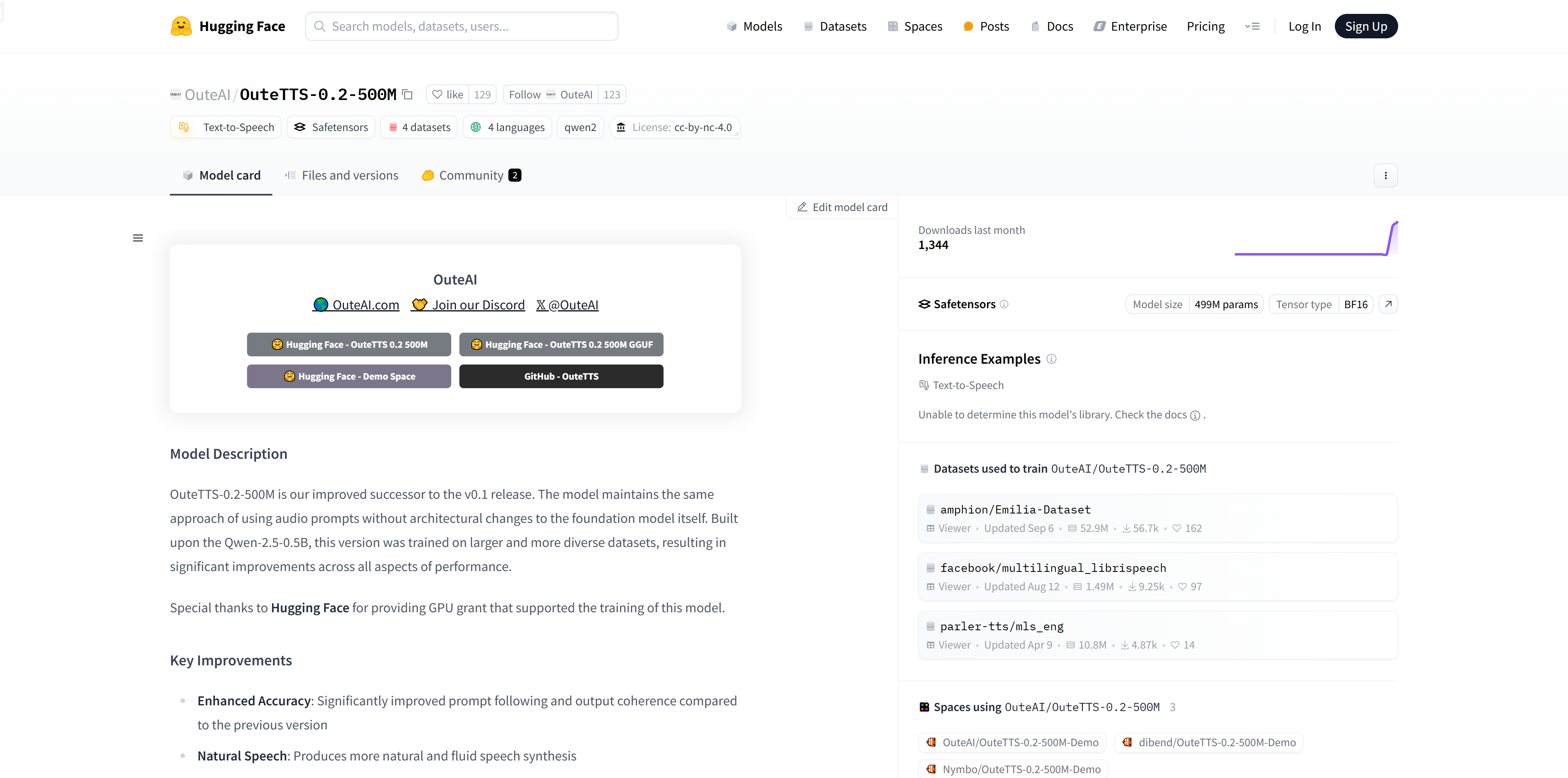

OuteTTS-0.2-500M is a text-to-speech synthesis model built on Qwen-2.5-0.5B. It has been trained on a larger dataset, improving accuracy, naturalness, vocabulary, voice cloning capabilities, and multilingual support. The model was supported by GPU funding from Hugging Face.

Who Can Benefit from OuteTTS-0.2-500M?

This model is ideal for developers and enterprises needing high-quality speech synthesis, such as those creating voice assistants, producing audiobooks, or developing speech synthesis applications.

Example Scenarios:

Developers can use OuteTTS-0.2-500M to provide natural and smooth voice output for voice assistants.

Audiobook producers can convert text into high-quality audio books using this model.

Companies can offer multilingual voice synthesis services with OuteTTS-0.2-500M.

Key Features:

Enhanced Accuracy: Improved prompt following and output coherence compared to previous versions.

Natural Voice: Generates more natural and fluent speech.

Expanded Vocabulary: Trained on over 5 billion audio prompts.

Improved Voice Cloning: Offers greater diversity and accuracy in voice cloning.

Multilingual Support: Adds experimental support for Chinese, Japanese, and Korean.

High Performance: Based on a 500M parameter model for top-notch speech synthesis.

User-Friendly: Simple interface for generating speech with adjustable parameters for optimal output.

How to Use OuteTTS-0.2-500M:

1. Install OuteTTS: Install the outetts library via pip.

2. Configure Model: Create a model configuration object, specifying the model path and language.

3. Initialize Interface: Initialize the OuteTTS interface based on the configuration.

4. Generate Speech: Provide text content, set relevant parameters (such as temperature and repetition penalty), and call the generation method to get the speech output.

5. Save or Play: Save the synthesized speech to a file or play it directly.

6. Optional: Create and use voice cloning configurations to achieve specific voice characteristics.