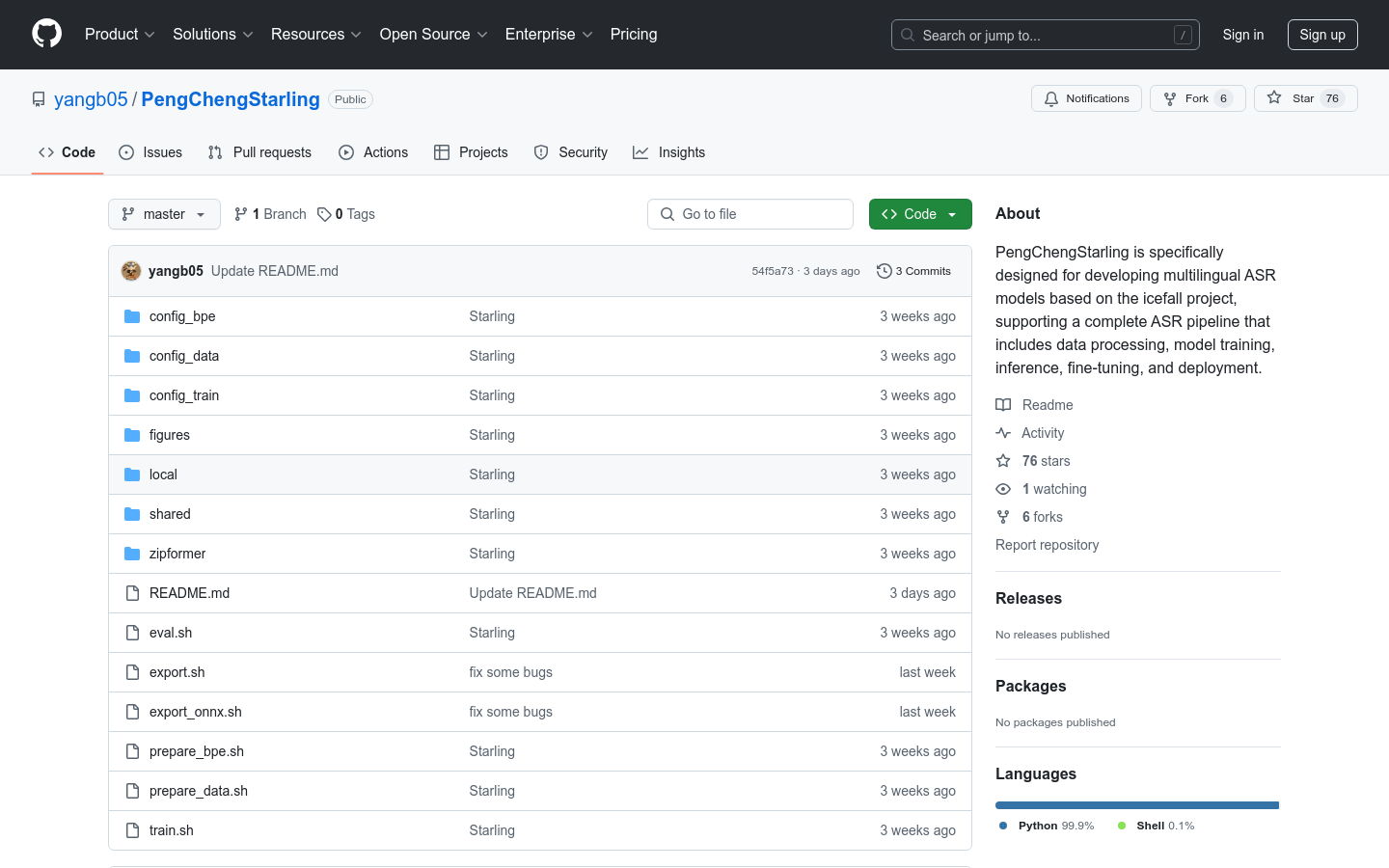

What is PengChengStarling ?

PengChengStarling is an open source toolkit focusing on multilingual automatic speech recognition (ASR). It is based on icefall project development and provides a complete ASR process from data processing to model deployment. PengChengStarling significantly improves the performance of multilingual ASR systems by optimizing parameter configuration and integrating language ID into the RNN-Transducer architecture. Its characteristics are efficient, flexible and fast inference, which is particularly suitable for scenarios where real-time voice recognition is required.

Who needs PengChengStarling ?

PengChengStarling is perfect for the following groups:

Developer: A technical team that needs to build a multilingual speech recognition system.

Researchers: Exploring the cutting-edge areas of multilingual ASR technology.

Enterprise: Provide efficient solutions for smart voice assistants, customer service systems or voice-to-text applications.

Example of usage scenario

1. Intelligent voice assistant: Develop voice assistants that support multiple languages to convert voice into text in real time.

2. Multilingual customer service system: Quickly identify customer consultations in different languages to improve response efficiency.

3. Conference transcription: Transcribing voice content in real time in multilingual conferences, supporting multiple language input.

Product Features

Multilingual support: Overview of Chinese, English, Russian, Vietnamese, Japanese, Thai, Indonesian and Arabic.

Flexible configuration: Decouple configuration and functional code, easily adapt to tasks in different languages.

Efficient reasoning: Streaming ASR model is 7 times faster than Whisper-Large v3, and the model size is only 20%.

Complete process: Supports data processing, model training, inference, fine-tuning and deployment.

Usage tutorial

1. Installation dependencies: Install required dependencies according to the official documentation.

2. Data preparation: Use the zipformer/prepare.py script to preprocess the raw data.

3. BPE model training: Run zipformer/prepare_bpe.py to train multilingual BPE models.

4. Model training: After configuring the parameters, execute zipformer/train.py to start training.

5. Model fine-tuning: Set do_finetune to true and fine-tune the model using a specific dataset.

6. Model evaluation: Use zipformer/streaming_decode.py to evaluate model performance.

7. Model export: Export the model through zipformer/export.py or zipformer/export-onnx-streaming.py for deployment.

Why choose PengChengStarling ?

PengChengStarling is not only powerful in performance, but also provides a complete tool chain to help developers quickly build and deploy multilingual ASR systems. Whether beginners or experienced developers, they can easily achieve voice recognition needs through their flexible configuration and efficient reasoning capabilities.