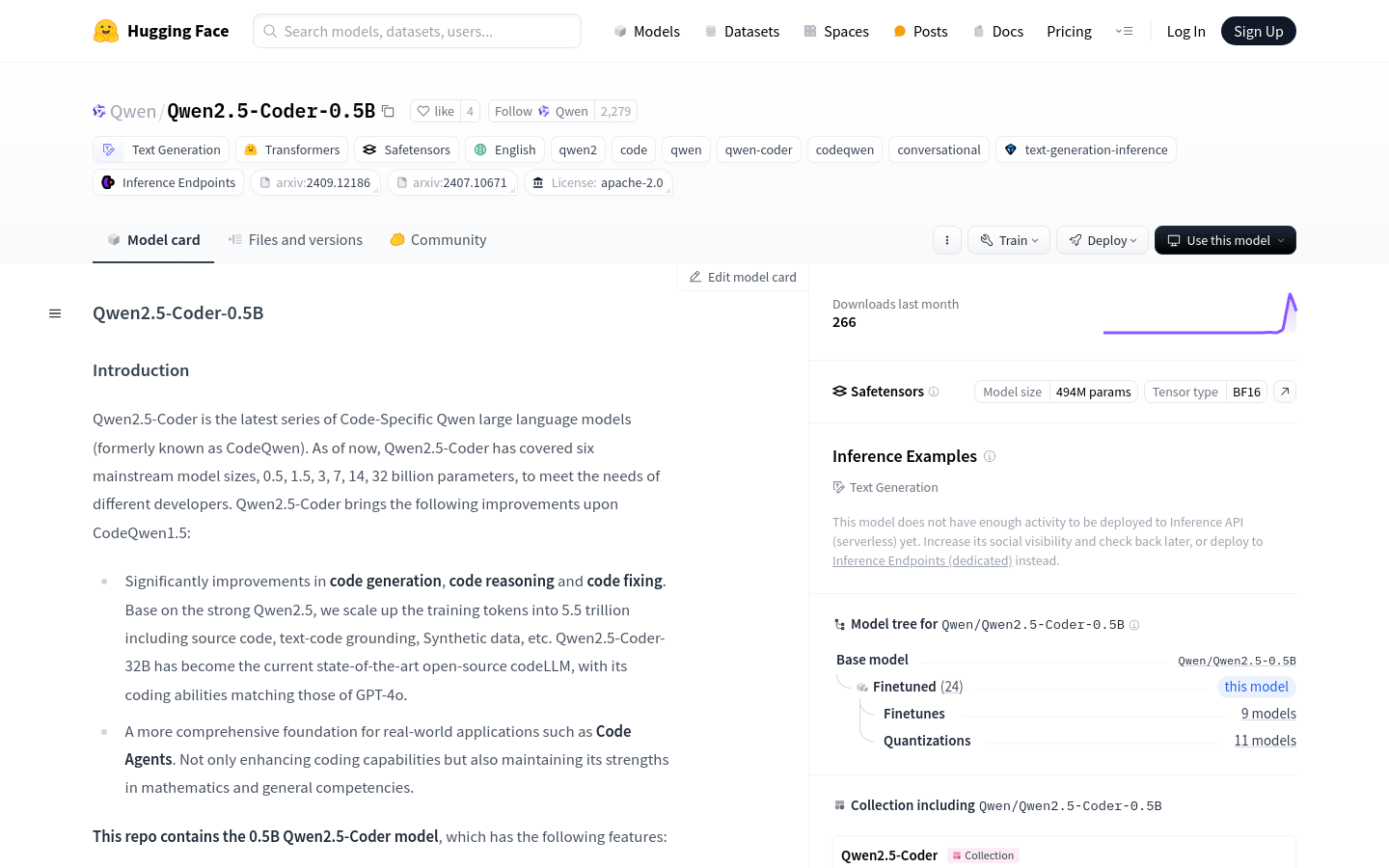

Qwen2.5-Coder is the latest series of Qwen large-scale language models, focusing on code generation, code reasoning and code repair. Based on the powerful Qwen2.5, this series of models significantly improves coding capabilities by increasing training tokens to 5.5 trillion, including source code, text code base, synthetic data, etc. Qwen2.5-Coder-32B has become the most advanced large-scale language model for open source code, with coding capabilities equivalent to GPT-4o. In addition, Qwen2.5-Coder also provides a more comprehensive foundation for practical applications such as code agents, which not only enhances coding capabilities, but also maintains its advantages in mathematics and general capabilities.

Demand group:

"The target audience is developers, programming enthusiasts and software engineers. The Qwen2.5-Coder series models are particularly suitable for professionals who need efficient coding, code analysis and optimization. It can help them reduce coding time, improve code quality, and improve the quality of code during development Solve problems quickly during the process."

Example of usage scenario:

Developers use Qwen2.5-Coder-0.5B to generate new code modules to improve development efficiency.

Software engineers use the model to conduct code reviews and automatically discover potential code problems.

Programming enthusiasts use this model to learn programming languages and quickly understand language features through code examples generated by the model.

Product features:

Code generation: Significantly improve code generation capabilities and help developers quickly implement code logic.

Code reasoning: Enhance the model’s understanding of code logic and improve code analysis and optimization capabilities.

Code repair: Automatically detect and repair errors in the code, improving code quality and development efficiency.

Supports multiple programming languages: Suitable for multiple mainstream programming languages to meet different development needs.

Powerful pre-training base: Based on 5.5 trillion training tokens, including source code and text code base data.

High-performance architecture: adopts transformers architecture, including advanced technologies such as RoPE, SwiGLU, and RMSNorm.

Long text processing capability: supports context lengths up to 32,768 tokens, suitable for processing complex code tasks.

Open source and community support: As an open source project, it has active community support and continuous updates.

Usage tutorial:

1. Visit the Hugging Face platform and search for the Qwen2.5-Coder-0.5B model.

2. Read the model documentation to understand the specific usage and parameter configuration of the model.

3. Based on project requirements, set model inputs, such as code snippets or programming problem descriptions.

4. Use the API provided by Hugging Face or download the model to local for inference.

5. Analyze model outputs, such as generated code or code fix recommendations, and apply them to real projects.

6. Fine-tune the model as needed to suit specific development scenarios or improve performance.

7. Participate in community discussions, share usage experiences, and obtain model updates and technical support.