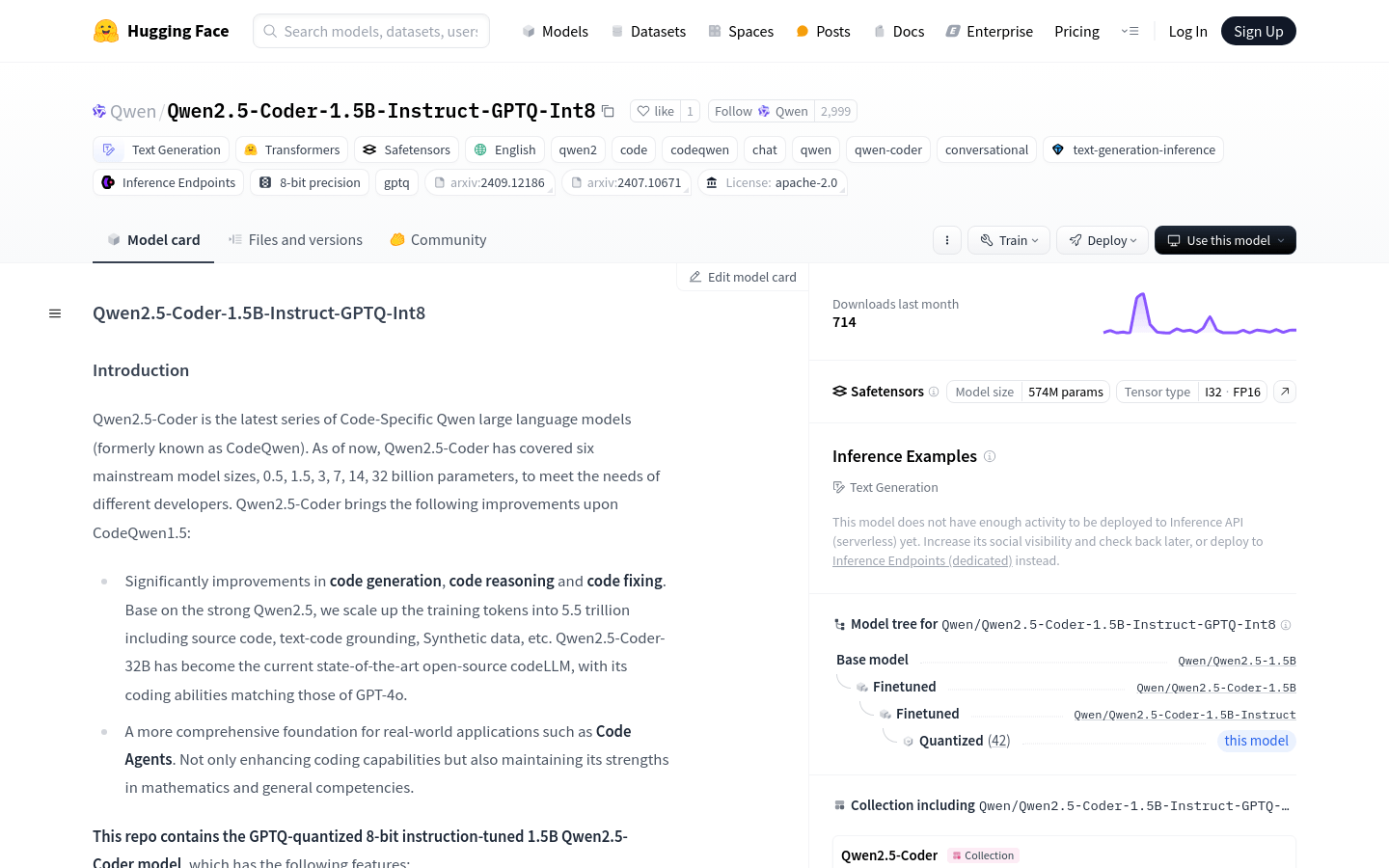

Qwen2.5-Coder is the latest series of Qwen's large language models, focusing on code generation, code reasoning and code repair. Based on the powerful Qwen2.5, the model uses 5.5 trillion source code, text code association, synthetic data, etc. during training, making it the leader among the current open source code language models. The model not only enhances programming capabilities, but also maintains its advantages in math and general abilities.

Demand population:

"The target audience is for developers and programming enthusiasts, especially those who need to quickly generate, understand and repair code. This product helps them improve development efficiency and code quality by providing strong code generation and understanding."

Example of usage scenarios:

The developer uses Qwen2.5-Coder to generate code for a quick sorting algorithm.

Software engineers use models to fix bugs in existing code.

Programming educators use this model to assist students in understanding complex programming concepts.

Product Features:

Code generation: significantly improves code generation capabilities and helps developers quickly implement programming tasks.

Code reasoning: Enhance the model's understanding of code logic and improve the accuracy of code analysis.

Code repair: Automatically detect and fix errors in the code to improve code quality.

Full parameter scale coverage: Provides different model scales from 50 million to 3.2 billion parameters to meet the needs of different developers.

Practical application basis: Provide more comprehensive capability support for practical applications such as code proxy.

8-bit quantization: Use GPTQ 8-bit quantization technology to optimize model performance and resource consumption.

Long context support: Supports context lengths up to 32,768 tokens, suitable for handling complex code.

Tutorials for use:

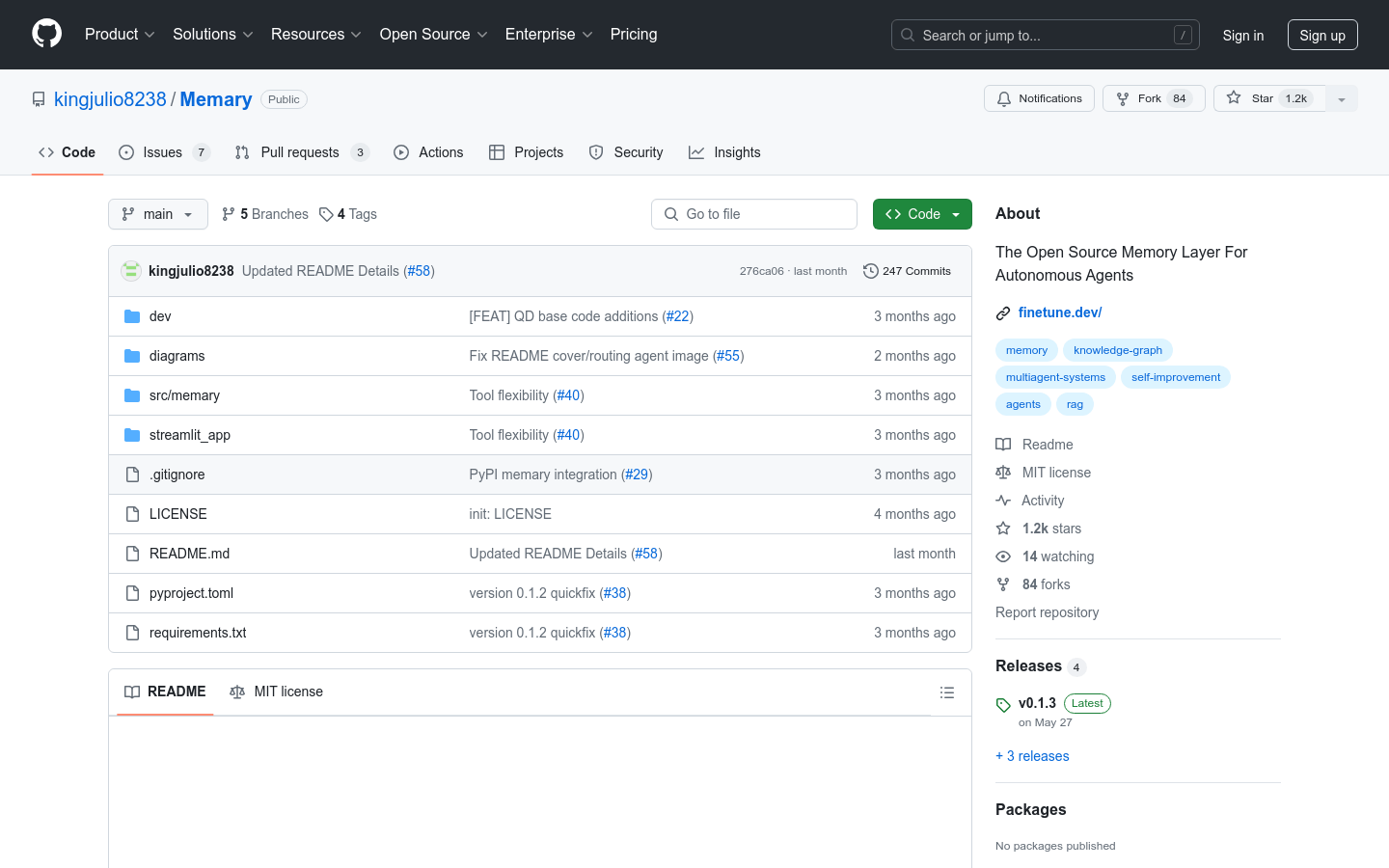

1. Visit the Hugging Face platform and find Qwen2.5-Coder-1.5B-Instruct-GPTQ-Int8 model.

2. Import the necessary libraries and modules according to the code examples provided on the page.

3. Load the model and word participle, using AutoModelForCausalLM and AutoTokenizer.from_pretrained methods.

4. Prepare input prompts, such as writing a code request for a specific function.

5. Use the model to generate code, use the model.generate method and set the max_new_tokens parameter.

6. Get the generated code ID and convert the ID into readable code text using the tokenizer.batch_decode method.

7. Analyze the generated code, adjust it as needed or use it directly.