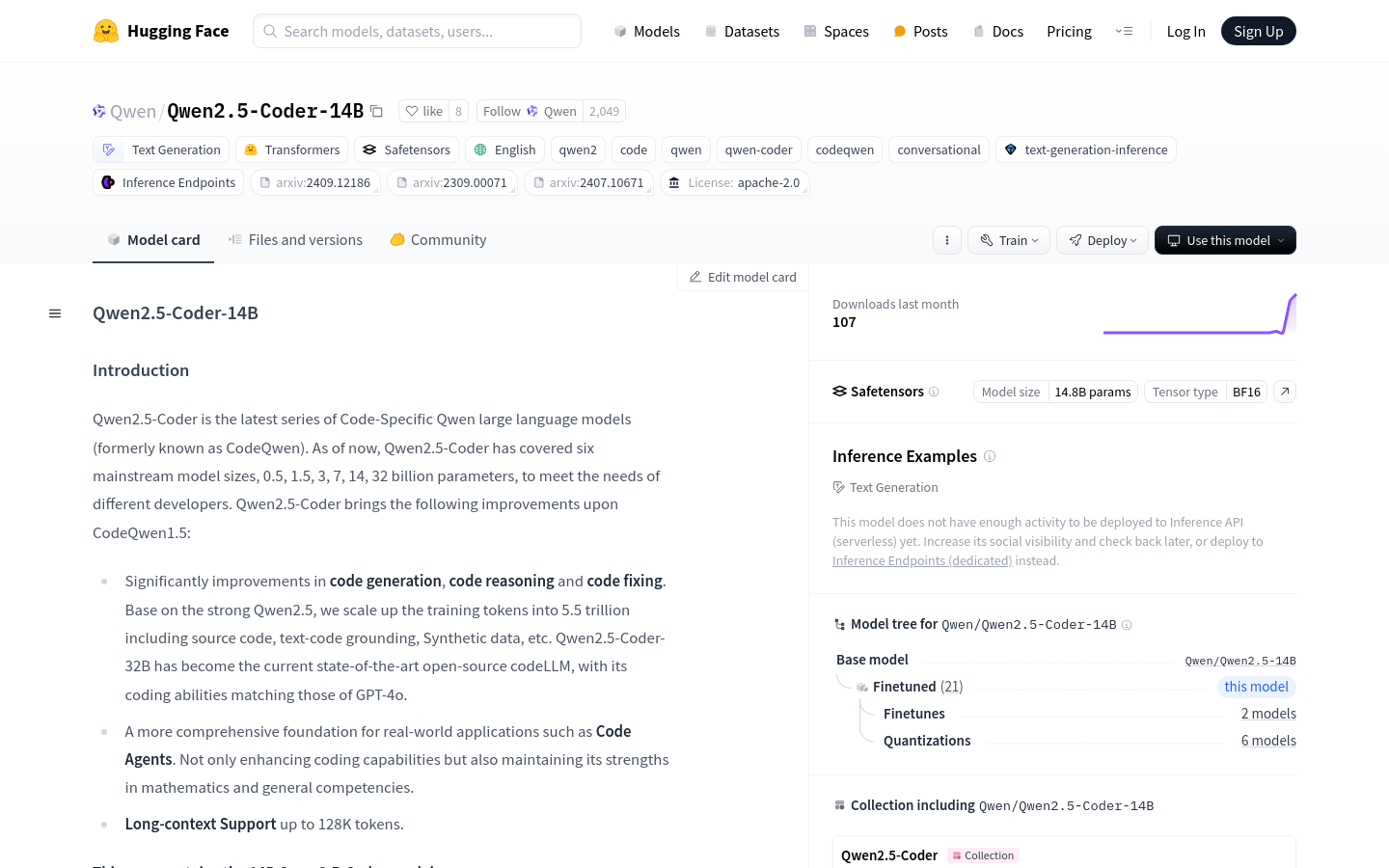

Qwen2.5-Coder-14B is a large-scale language model in the Qwen series that focuses on code, covering different model sizes from 0.5 to 3.2 billion parameters to meet the needs of different developers. The model has significant improvements in code generation, code reasoning and code repair. Based on the powerful Qwen2.5, the training tokens are expanded to 5.5 trillion, including source code, text code grounding, synthetic data and more. Qwen2.5-Coder-32B has become the most advanced open source code LLM currently, and its coding capabilities match GPT-4o. In addition, it provides a more comprehensive foundation for real-world applications such as code agents, not only enhancing coding capabilities but also maintaining advantages in mathematical and general abilities. Supports long contexts up to 128K tokens.

Demand group:

"The target audience is developers, programming enthusiasts, and professionals who need to deal with large amounts of code. Qwen2.5-Coder-14B helps them improve development efficiency, reduce errors, and handle complexities by providing powerful code generation, reasoning, and repair capabilities. programming tasks."

Example of usage scenario:

Developers use Qwen2.5-Coder-14B to generate new code modules to improve development efficiency.

During the code review process, use models to reason about code logic and discover potential problems in advance.

When maintaining an old code base, use the model to fix discovered code errors and reduce maintenance costs.

Product features:

Code generation: Significantly improve code generation capabilities and help developers quickly implement code logic.

Code reasoning: Enhance the model’s understanding of code logic and improve the accuracy of code analysis.

Code repair: Assist developers to find and fix errors in the code and improve code quality.

Long context support: Supports long contexts up to 128K tokens, suitable for handling large projects.

Based on Transformers architecture: Adopt advanced Transformers architecture to improve model performance.

Parameter scale: It has a parameter scale of 14.7B, providing powerful model capabilities.

Multi-field application: Excellent performance not only in programming, but also in mathematics and general fields.

Usage tutorial:

1. Visit the Hugging Face platform and search for the Qwen2.5-Coder-14B model.

2. Select appropriate code generation, inference, or repair tasks based on project needs.

3. Prepare input data, such as code snippets or programming problem descriptions.

4. Input the input data into the model and obtain the output results of the model.

5. Analyze the model output and carry out the next step of code development or maintenance based on the output results.

6. If necessary, the model can be fine-tuned to suit specific development environments or needs.

7. Continuously monitor model performance and optimize based on feedback.