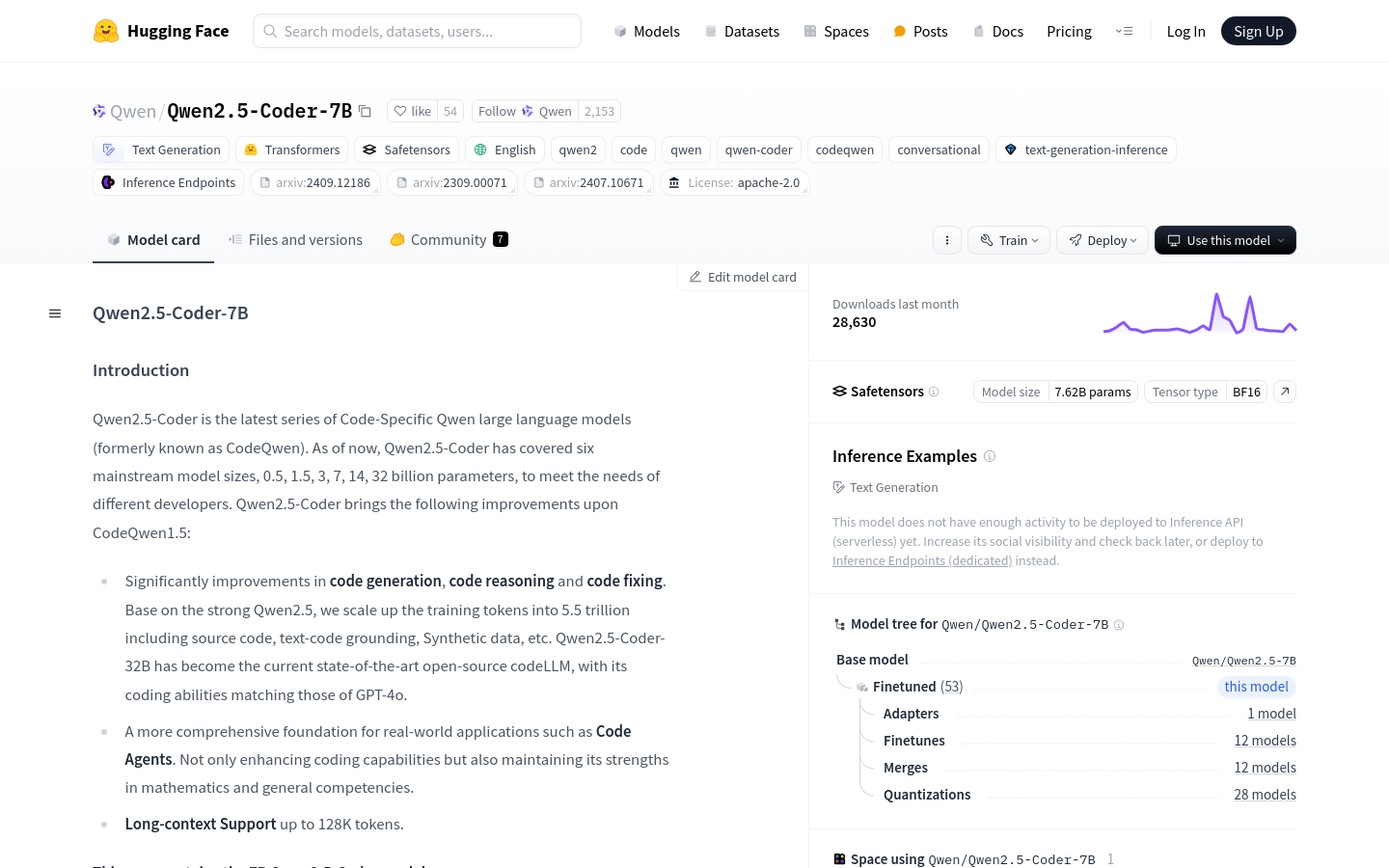

Qwen2.5-Coder-7B is a large language model based on Qwen2.5, focusing on code generation, code reasoning and code repair. It has expanded on 5.5 trillion training tokens, including source code, text code grounding, synthetic data, etc., which is the latest development in the current open source code language model. The model not only matches GPT-4o in programming capabilities, but also maintains its advantages in mathematical and general capabilities, and supports long contexts up to 128K tokens.

Demand population:

"The target audience is for developers and programmers, especially those who need to deal with large amounts of code and complex projects. Qwen2.5-Coder-7B helps them improve development efficiency and code quality by providing powerful code generation, reasoning and repair capabilities."

Example of usage scenarios:

Developers use Qwen2.5-Coder-7B to automatically complete the code to improve coding speed.

During the code review process, the model's code reasoning ability is used to discover potential code problems.

When maintaining large code bases, the long context support functionality of the model is used to handle complex code dependencies.

Product Features:

Code generation: significantly improves code generation capabilities and helps developers quickly implement code logic.

Code reasoning: Enhance the model's understanding of code logic and improve the efficiency of code review and optimization.

Code repair: Automatically detect and fix errors in the code to reduce debugging time.

Long context support: Supports contexts up to 128K tokens, suitable for handling large code bases.

Based on Transformers architecture: Adopt advanced RoPE, SwiGLU, RMSNorm and Attention QKV biasing technology.

Number of parameters: Have 7.61B parameters, of which the non-embedded parameter is 6.53B.

Number of layers and attention heads: There are 28 layers in total, and the attention heads of Q and KV are 28 and 4 respectively.

Tutorials for use:

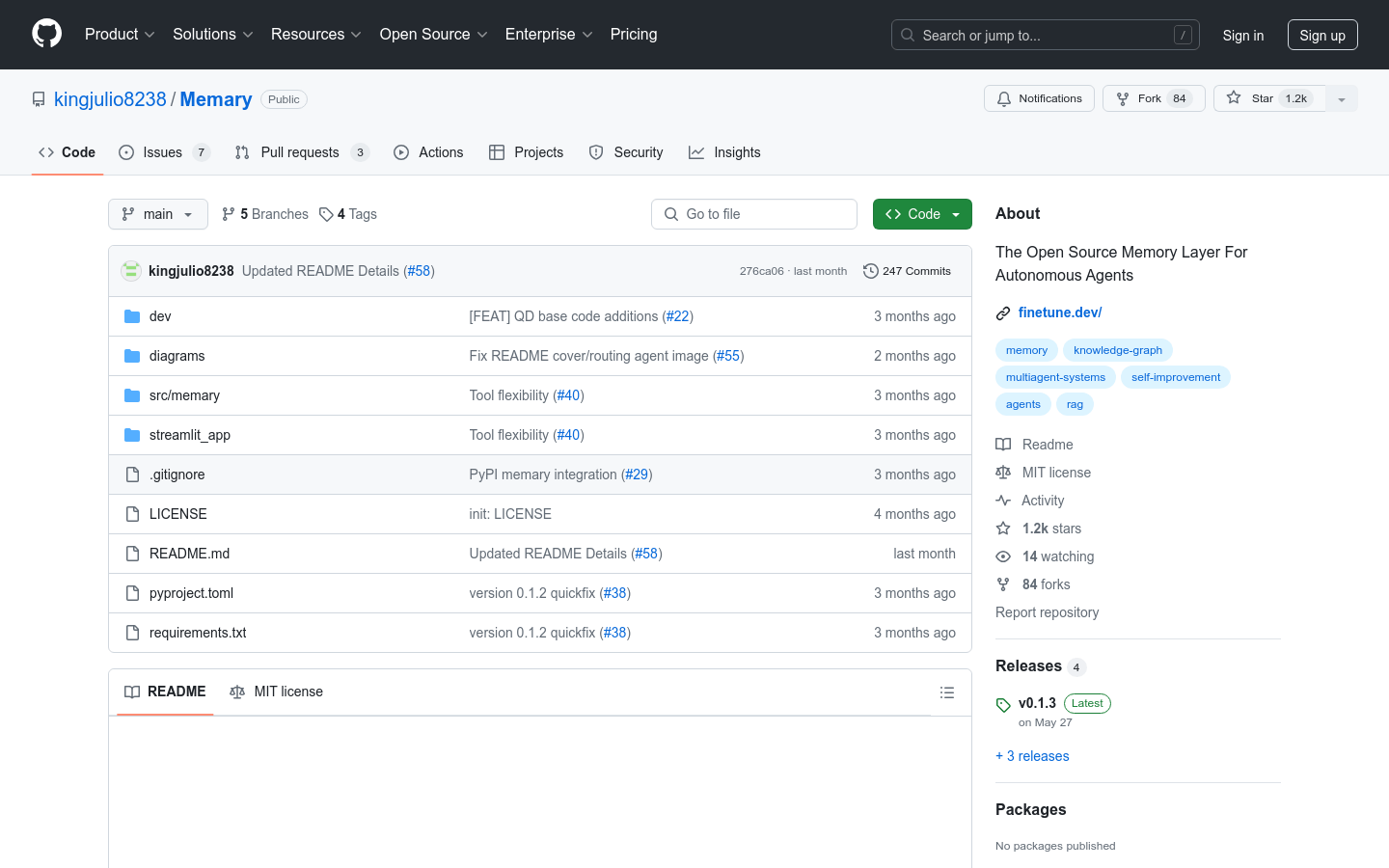

1. Visit the Hugging Face platform and search for Qwen2.5-Coder-7B model.

2. Read the model card to understand the detailed information and usage conditions of the model.

3. Download or deploy the model directly on the platform according to project requirements.

4. Use Hugging Face's Transformers library to load the model and configure the environment.

5. Enter a query or directive related to the code, and the model will generate the corresponding code or provide code-related reasoning.

6. Make necessary adjustments and optimizations based on the results of the model output.

7. Apply generated or optimized code in actual projects to improve development efficiency.

8. Fine-tune the model as needed to suit specific development environments or requirements.