What is Sana?

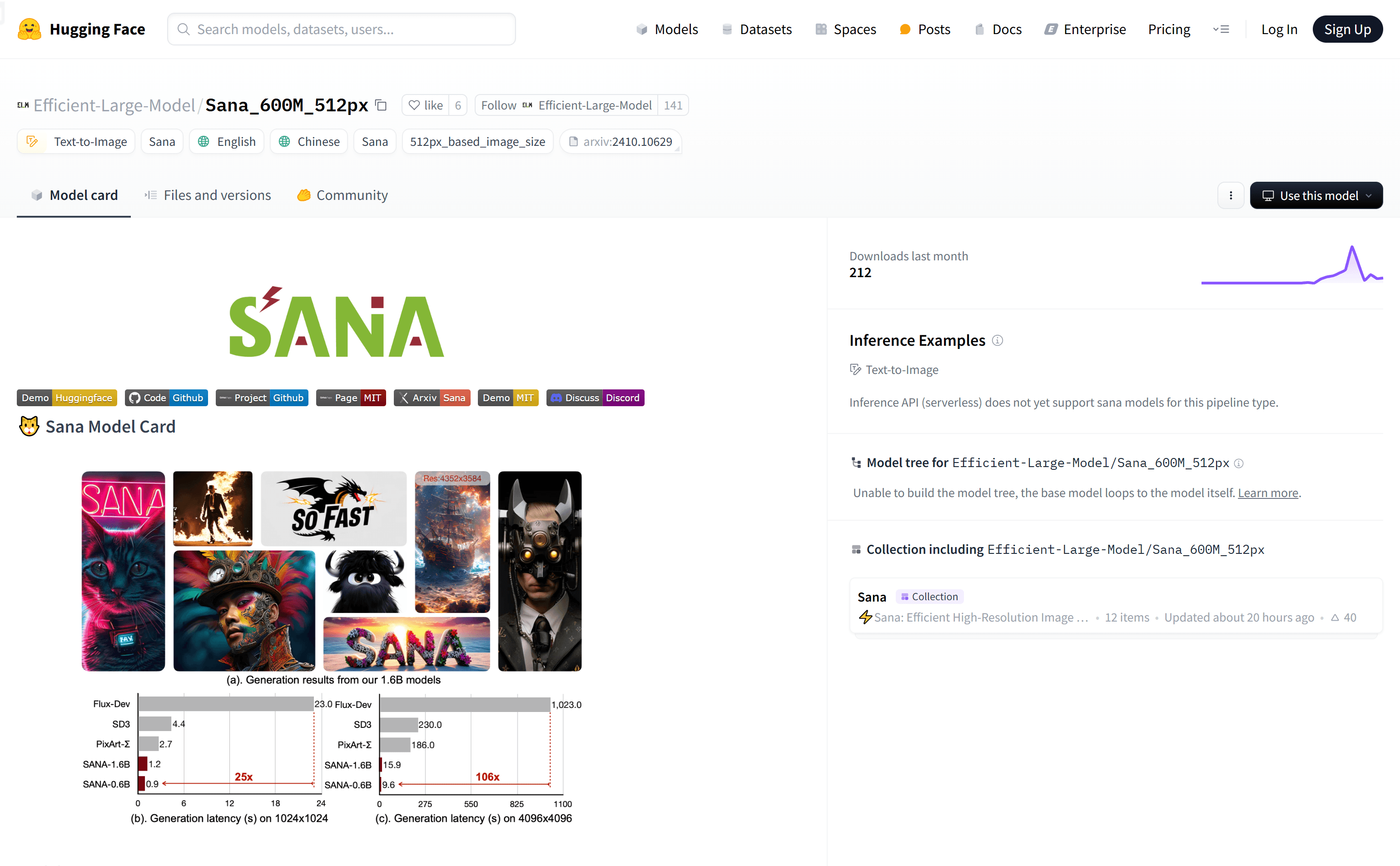

Sana is a text-to-image generation framework developed by NVIDIA. It can efficiently produce images up to 4096x4096 resolution. Known for its fast speed and strong text-image alignment capabilities, Sana can be deployed on laptop GPUs, marking significant progress in image generation technology. The model uses linear diffusion transformers with pre-trained text encoders and spatially compressed latent feature encoders to generate and modify images based on text prompts.

The Sana model is ideal for researchers, artists, designers, and educators. Researchers can use it to explore and improve image generation techniques. Artists and designers can quickly create high-quality artworks and design sketches. Educators can utilize it as a teaching aid to help students understand the basics of image generation and its applications.

Use Case Examples:

Artists can use Sana to generate art pieces based on specific textual descriptions.

Designers can use Sana to rapidly create product prototypes, speeding up the design process.

Educators can demonstrate how to generate images from text in classrooms, enhancing students' understanding of AI technologies.

Key Features:

High-Resolution Image Generation: Generates detailed images up to 4096x4096.

Fast Text-Image Alignment: Quickly aligns text prompts with generated images.

Laptop GPU Deployment: Optimized for efficient performance on laptop GPUs.

Linear Diffusion Transformers: Utilizes advanced technology for better quality and speed.

Pre-Trained Text Encoders: Improves the model’s generalization ability.

Spatially Compressed Latent Feature Encoders: Enhances handling of high-resolution images.

Open Source Code: Available on GitHub for research and further development.

Using Sana:

1. Visit the Sana model page on Hugging Face to learn about basic information and usage conditions.

2. Read and understand the model’s usage scope and limitations to ensure compliance.

3. Download and install necessary software and dependencies from the Sana code repository on GitHub.

4. Set up text prompts and parameters according to the documentation and start the image generation process.

5. Evaluate the generated images for quality and accuracy, adjusting parameters if needed.

6. Apply the generated images to research, art creation, design, or education.

7. Engage in community discussions, share experiences, and provide feedback on usage.