ShieldGemma

ShieldGemma models, offered by Google, are text-based safety filters designed to detect harmful content across four categories, aiding developers and platforms in maintaining compliance and quality.

What is ShieldGemma?

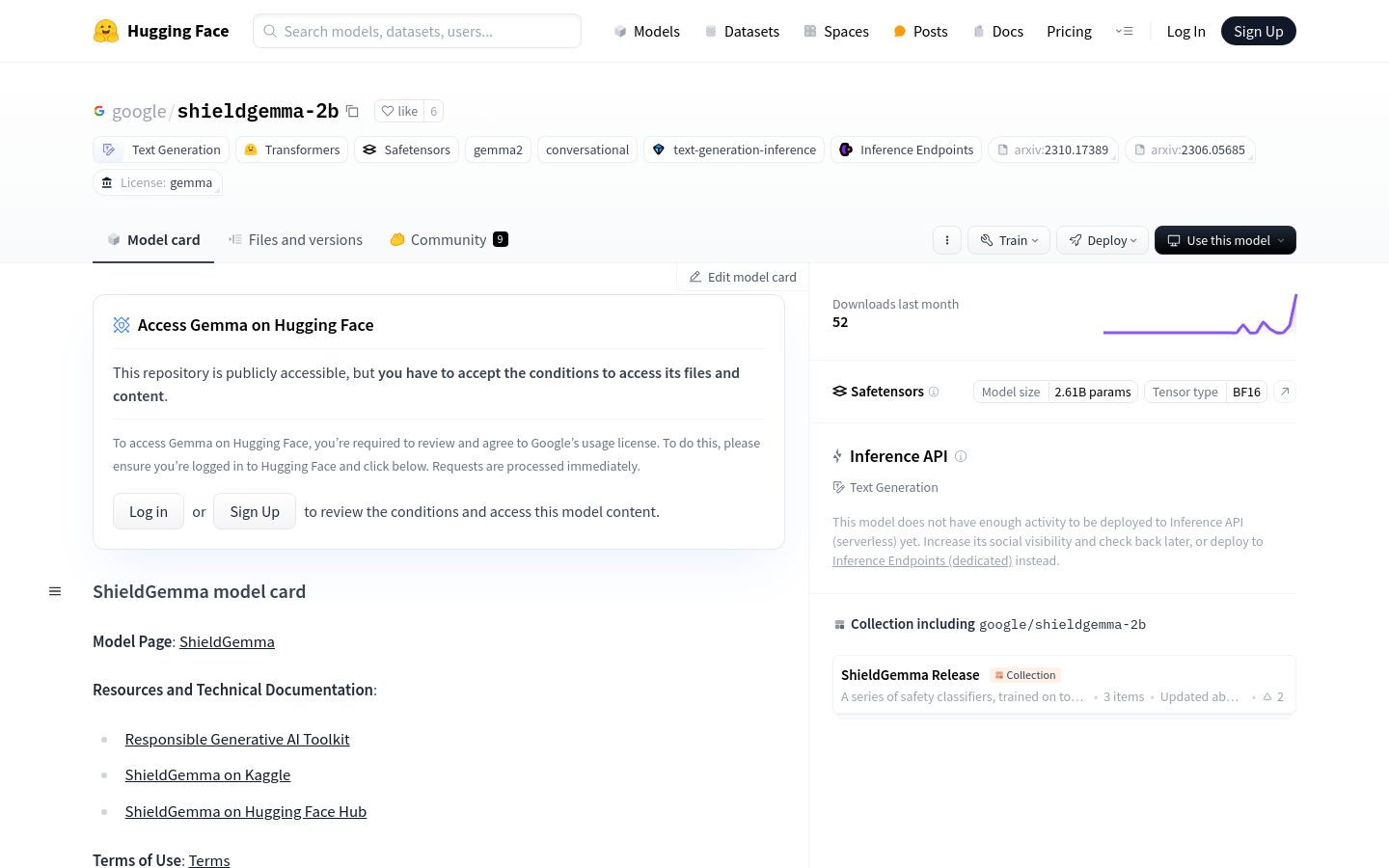

ShieldGemma is a text-to-text decoder-only large language model developed by Google for content moderation. It focuses on four categories including inappropriate content for children, dangerous content, hate speech, and harassment. Available in English only, it offers models with 2B, 9B, and 27B parameters. It is designed to help developers and enterprises ensure compliance and quality in their platforms by filtering out policy-violating content.