What is Show-o?

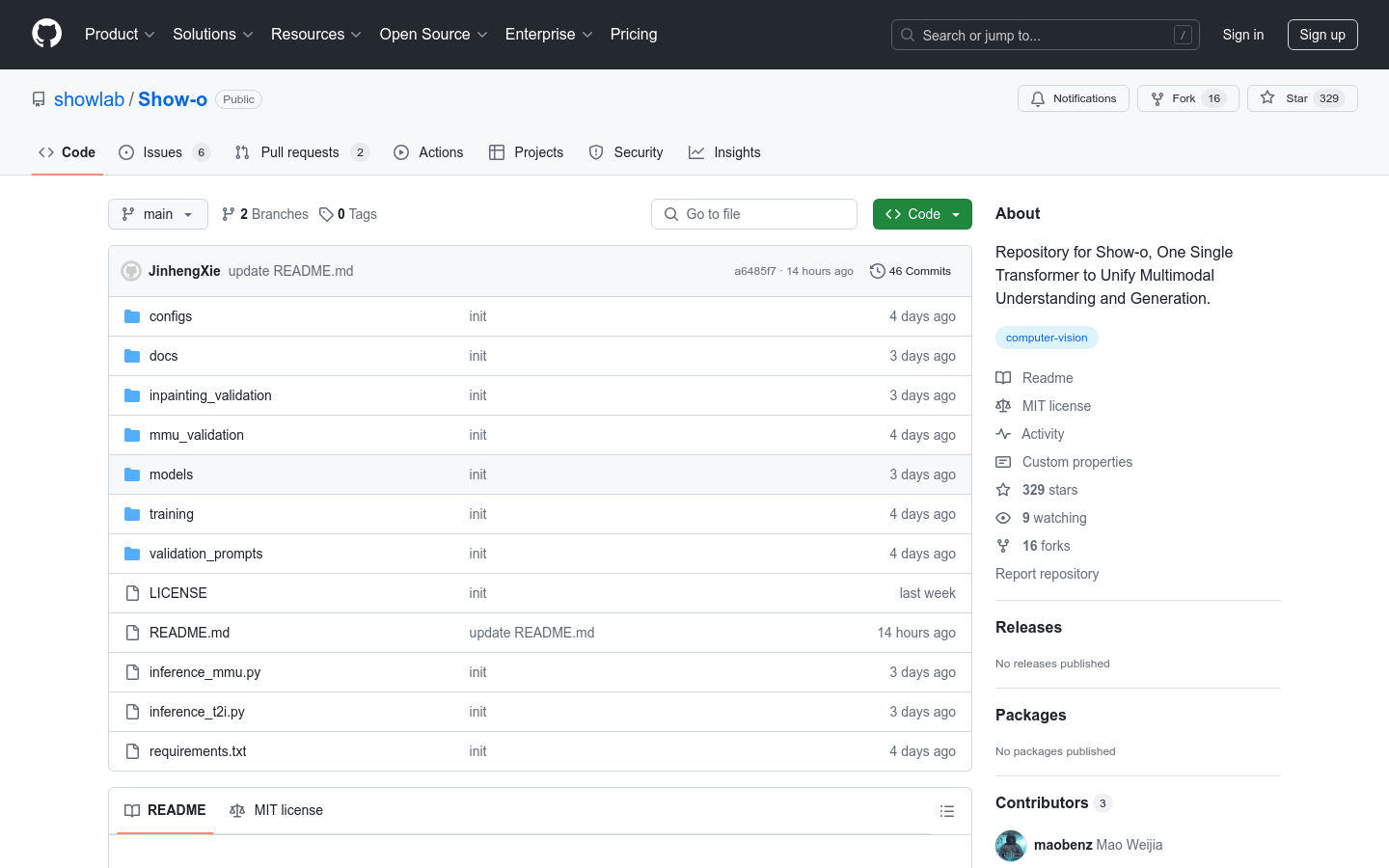

Show-o is a cutting-edge transformer model developed jointly by Show Lab at the National University of Singapore and ByteDance. It excels in understanding and generating multimodal data, supporting tasks like image captioning, visual question answering, text-to-image generation, text-guided image inpainting and expansion, and hybrid multimodal generation.

Who Can Use Show-o?

The primary audience for Show-o includes researchers and developers in the AI field, especially those focused on computer vision and natural language processing. This model can enhance their efficiency in analyzing and generating multimodal data, driving advancements in AI technology.

Example Scenarios:

Researchers can use Show-o to automatically generate descriptive captions for large sets of images.

Developers can leverage Show-o to build more accurate visual question answering systems for intelligent customer service.

Artists can utilize Show-o’s text-to-image generation capabilities to create unique artworks.

Key Features:

Image Captioning: Automatically generates descriptive text for images.

Visual Question Answering: Answers questions based on image content.

Text-to-Image Generation: Creates corresponding images based on text descriptions.

Text-Guided Inpainting: Repairs damaged parts of images guided by text.

Text-Guided Expansion: Expands images creatively guided by text.

Hybrid Multimodal Generation: Produces new multimodal content combining text and images.

How to Use Show-o:

1. Install necessary environment and dependencies.

2. Download and configure pre-trained model weights.

3. Log in to your wandb account to view inference demo results.

4. Run inference demos for multimodal understanding.

5. Run inference demos for text-to-image generation.

6. Run inference demos for text-guided inpainting and expansion.

7. Adjust model parameters as needed to optimize performance.