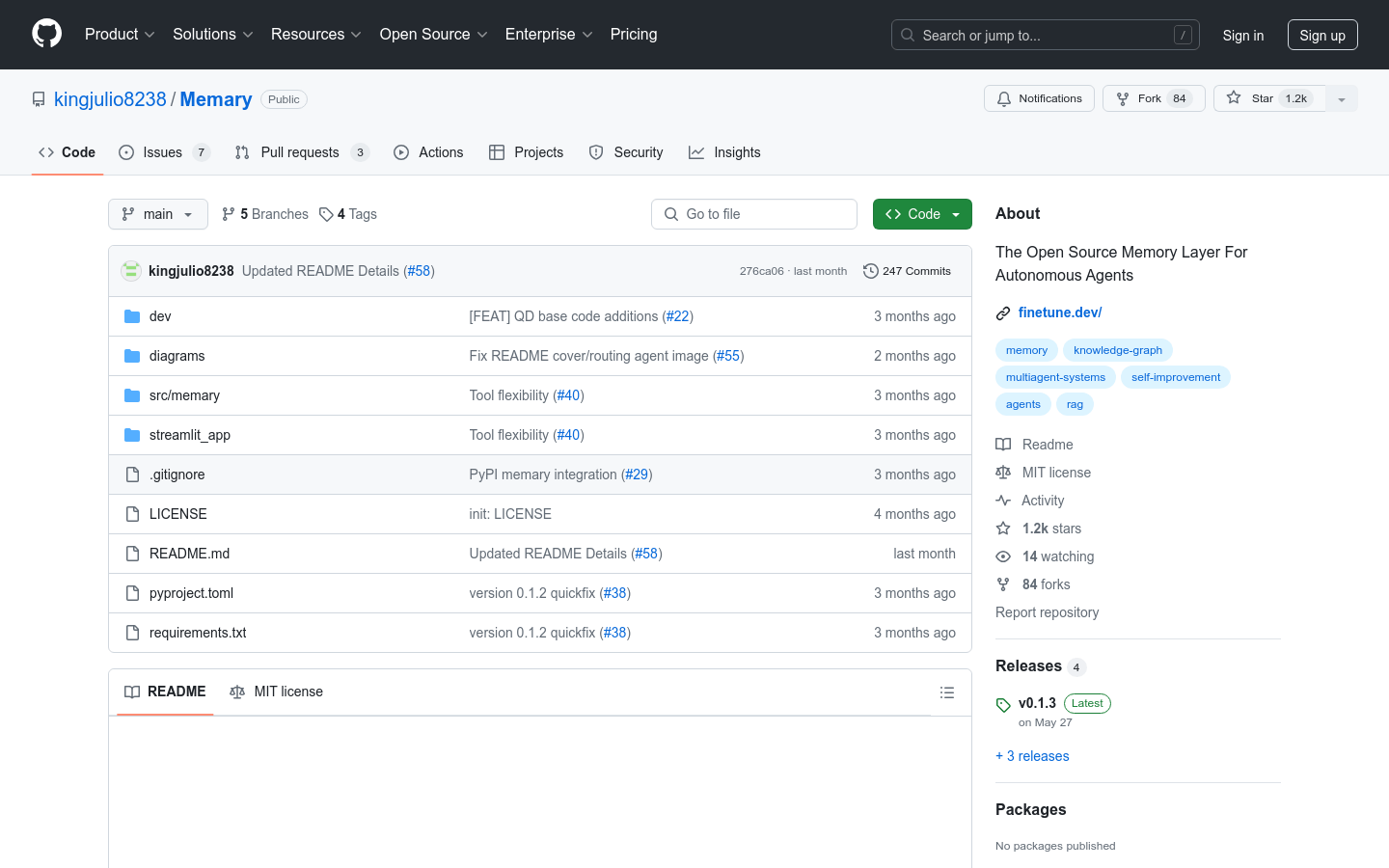

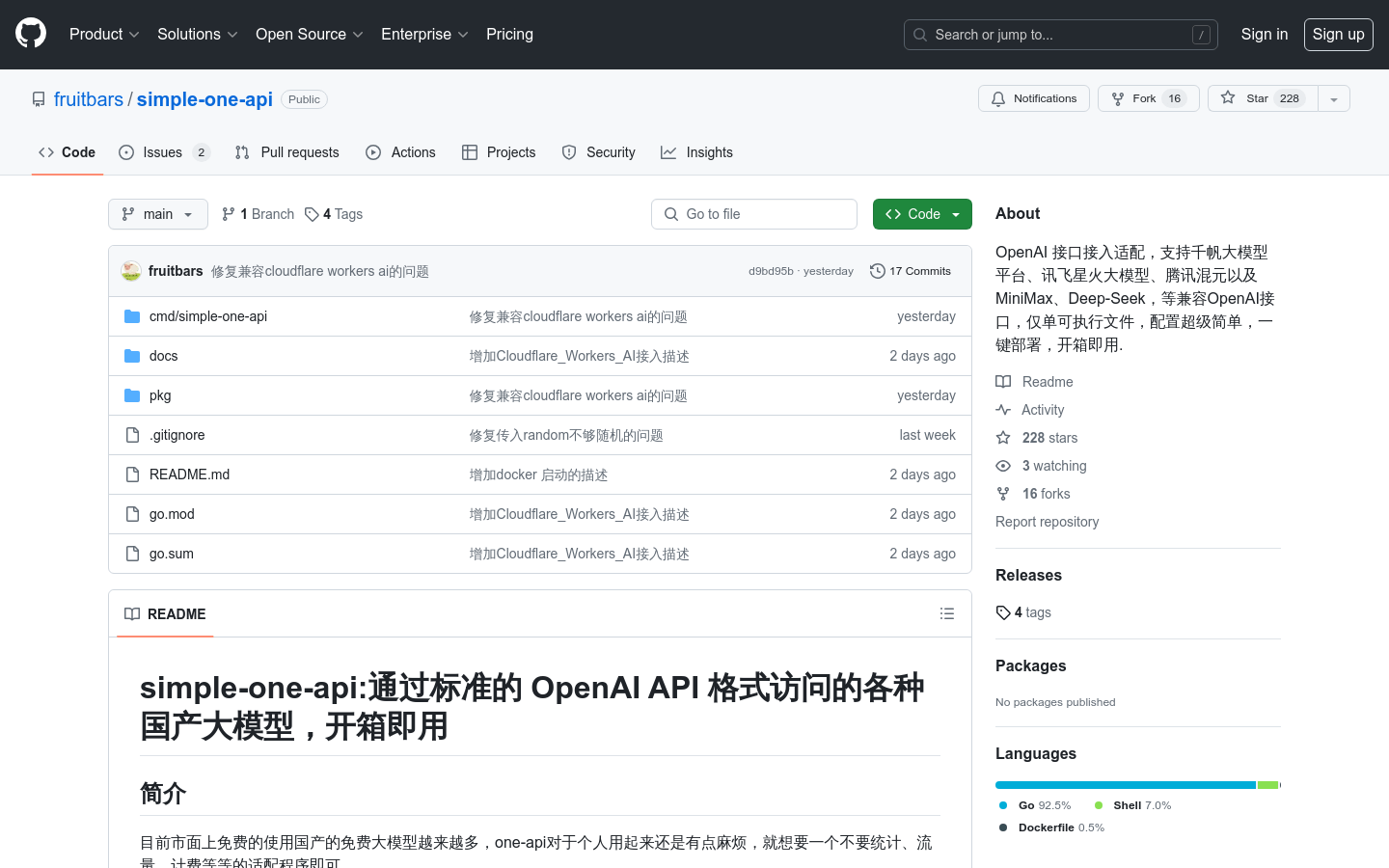

simple-one-api is a program that is adapted to multiple large-model interfaces. It supports OpenAI interface and allows users to call different large-model services through a unified API format, simplifying the complexity brought about by the differences in interfaces of different platforms. It supports multiple platforms including Qianfan big model platform, iFLYTEK Spark big model, Tencent Hunyuan big model, etc., and provides one-click deployment and out-of-the-box convenience.

Demand population:

"The target audience is mainly developers and enterprise users who need to integrate the big model API services. This product is suitable for them because it provides a quick and easy way to integrate and call multiple big models without caring about the differences in the underlying platform, so that you can focus more on the development of business logic."

Example of usage scenarios:

Developers use simple-one-api to quickly integrate Baidu Qianfan models into their projects.

Enterprise users use this API adapter to call iFLYTEK and Tencent Hunyuan models at different time periods to carry out AI-related business processing.

Educational institutions use the unified interface provided by simple-one-api to teach students how to use different AI models.

Product Features:

Supports a variety of large models, including Baidu Smart Cloud Qianfan, iFLYTEK Spark, Tencent Hunyuan, etc.

The unified OpenAI interface format simplifies the use of APIs on different platforms.

One-click deployment and quickly start the service.

Simple configuration, you can configure model services and credentials through the config.json file.

Supports load balancing strategies, including 'first' and 'random' modes.

Provide detailed documentation and update logs to facilitate users to understand product updates and usage.

Tutorials for use:

Clone the simple-one-api repository to local.

Modify the config.json file as needed, configure model service and credential information.

Execute the compiled script and generate the executable file.

Start the simple-one-api service and call various model services through OpenAI-compatible interfaces.

Check the service log to ensure that the service is running normally.

Adjust load balancing policies and model configuration as needed.