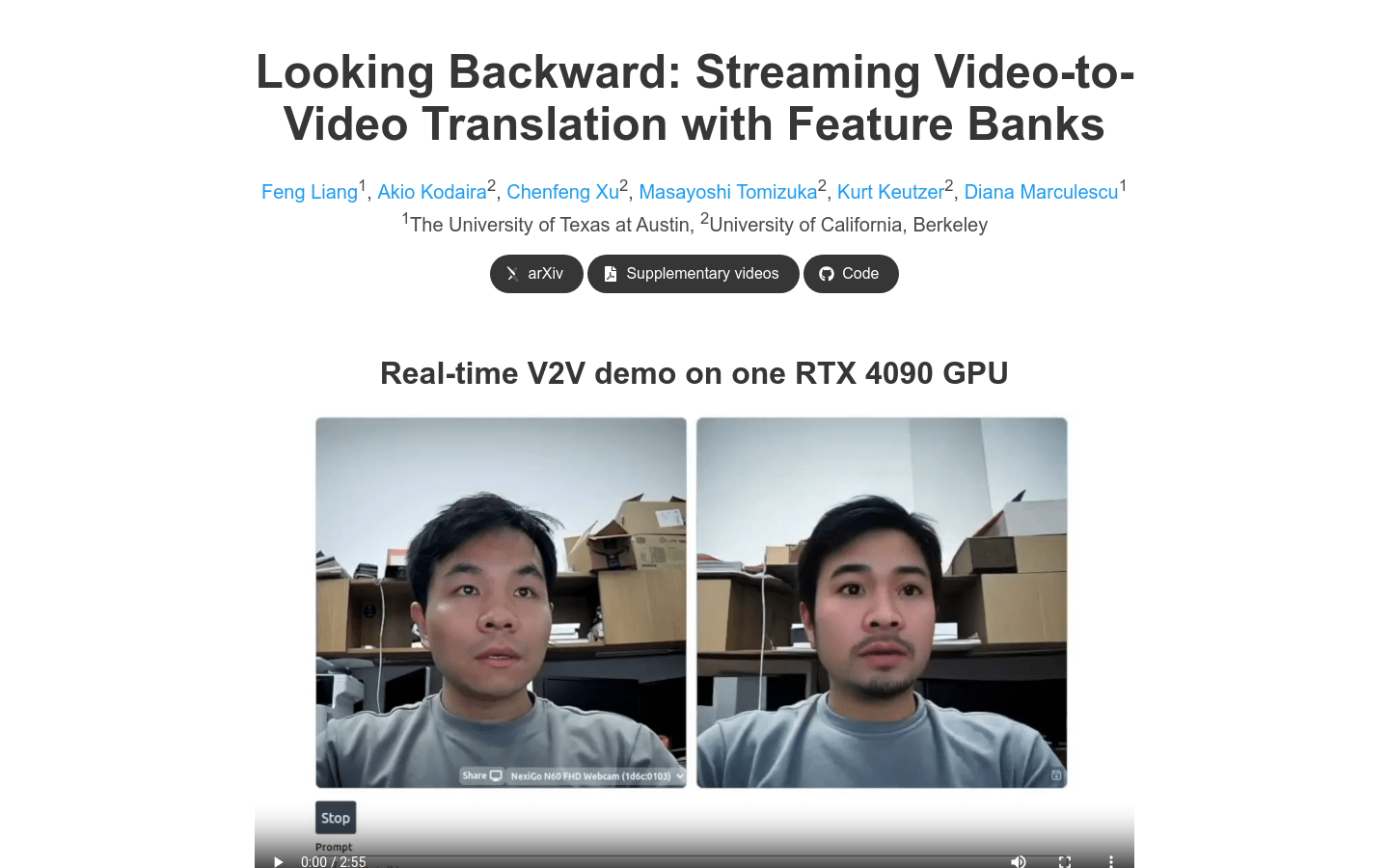

StreamV2V is a diffusion model that enables real-time video-to-video (V2V) translation via user prompts. Unlike traditional batch processing methods, StreamV2V adopts streaming processing and is able to process infinite frames of video. Its core is to maintain a feature library that stores information from past frames. For newly incoming frames, StreamV2V directly fuses similar past features into the output by extending self-attention and direct feature fusion technology. The feature library is continuously updated by merging stored and new features, keeping it compact and information-rich. StreamV2V stands out for its adaptability and efficiency, seamlessly integrating with image diffusion models without the need for fine-tuning.

Demand group:

" StreamV2V is suitable for professionals and researchers who require real-time video processing and translation. It is particularly suitable for areas such as video editing, film post-production, real-time video enhancement and virtual reality because of its ability to provide fast and seamless video processing capabilities, while maintaining high quality output."

Example of usage scenario:

Video editors use StreamV2V to adjust video styles and effects in real time.

The film post-production team uses StreamV2V for real-time preview and adjustment of special effects.

Virtual reality developers use StreamV2V to provide dynamic adjustment of real-time video content for VR experiences.

Product features:

Real-time video-to-video translation: supports unlimited frames of video processing.

User Tip: Allows users to enter instructions to guide video translation.

Feature library maintenance: Stores intermediate transformer features from past frames.

Extended Self-Attention (EA): Connect stored keys and values directly into the self-attention calculation of the current frame.

Direct feature fusion (FF): Retrieve similar features in the bank through cosine similarity matrix and perform weighted sum fusion.

High efficiency: Runs at 20 FPS on a single A100 GPU, 15x, 46x, 108x and 158x faster than FlowVid, CoDeF, Rerender and TokenFlow.

Excellent time consistency: confirmed by quantitative metrics and user research.

Usage tutorial:

Step 1: Visit StreamV2V ’s official website.

Step 2: Read the introduction and features of the model.

Step 3: Set user prompts as needed to guide the direction of video translation.

Step 4: Upload or connect the video source that needs to be translated.

Step 5: Start the StreamV2V model and start real-time video translation.

Step 6: Observe the video output during the translation process and adjust parameters as needed.

Step 7: After completing the translation, download or use the translated video content directly.