What is SyncAnimation?

SyncAnimation is an innovative audio-driven technology that generates highly realistic speaking avatars and upper body movements in real time. By combining audio with synchronized gestures and expressions, it addresses the limitations of traditional methods in terms of real-time performance and detail representation. This technology is aimed at applications requiring high-quality real-time animation generation such as virtual broadcasting, online education, remote meetings, and more, making it valuable for these fields.

The product suits industries needing to quickly produce high-quality animations with limited resources, like virtual broadcasting, online education, film and television production, and game development. These sectors can benefit from SyncAnimation’s ability to generate lifelike animations driven by audio.

Example Scenarios:

In news reporting, SyncAnimation can be used to create virtual journalists who can synchronize their head and upper body movements with audio for dialogue.

On online education platforms, this technology can generate animated virtual teachers, enhancing the learning experience with more engaging and interactive content.

In game development, SyncAnimation can drive real-time facial expressions and movements based on audio, improving immersion.

Key Features:

Generates highly realistic speaking avatars and upper body movements using audio.

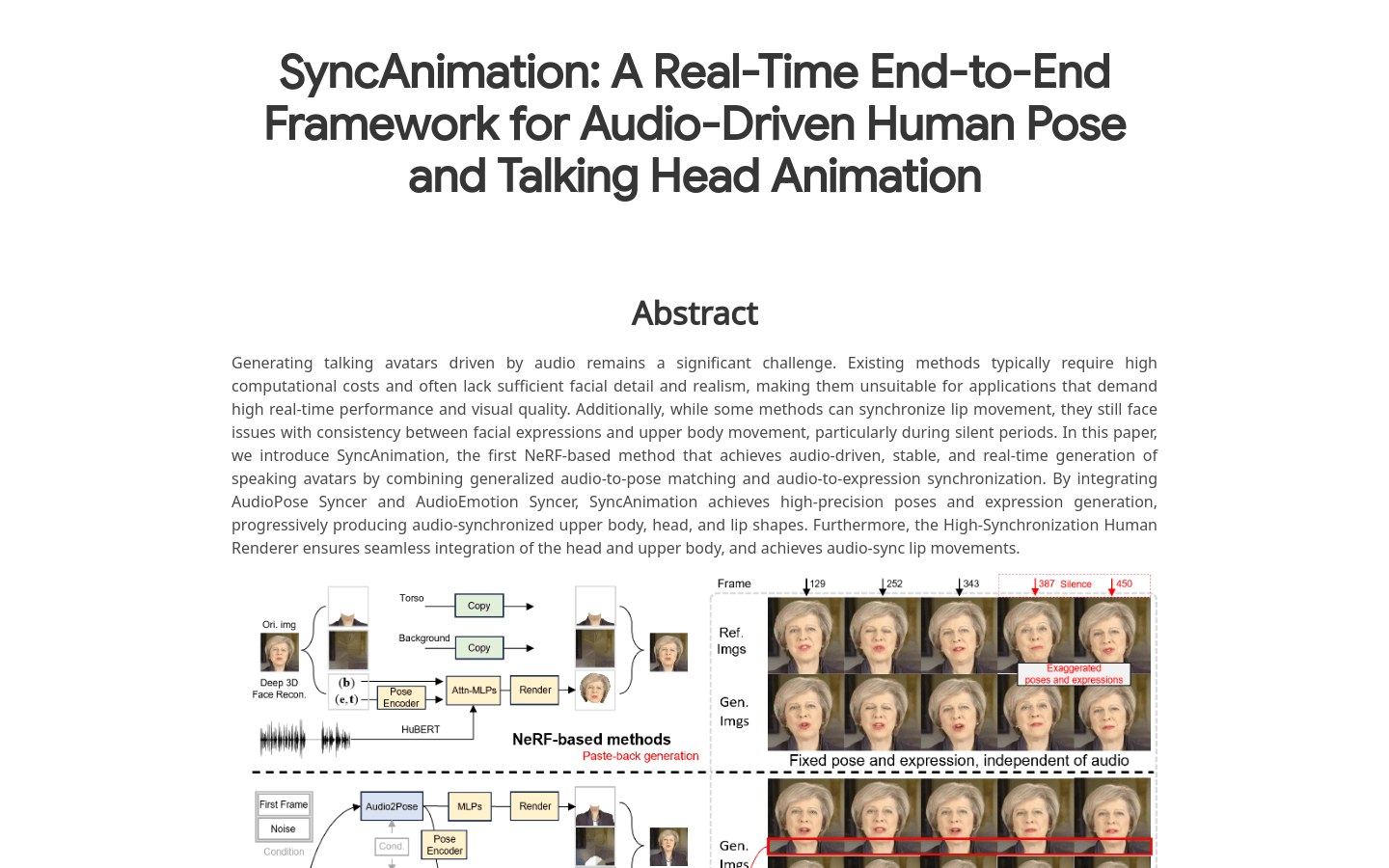

Uses AudioPose Syncer and AudioEmotion Syncer for precise gesture and expression generation.

Supports dynamic and clear lip movements that stay in sync with audio.

Produces full-body animations with rich expressions and posture changes.

Can extract identity information from a single image or noise to personalize animations.

How to Use SyncAnimation:

1. Prepare input data: Provide a character image (for extracting identity) and an audio file (to drive the animation).

2. Preprocess: Extract 3DMM parameters as a reference for Audio2Pose and Audio2Emotion (or use noise).

3. Generate gestures and expressions: Use AudioPose Syncer and AudioEmotion Syncer to create synchronized upper body gestures and expressions based on the audio.

4. Render animation: Use High-Synchronization Human Renderer to integrate the generated gestures and expressions into a complete animation.

5. Output result: The generated animation can be used directly for video creation, live streaming, or other applications.