What is StackBlitz?

StackBlitz is a web-based IDE tailored for the JavaScript ecosystem. It uses WebContainers, powered by WebAssembly, to provide instant Node.js environments directly in your browser. This setup offers exceptional speed and security.

---

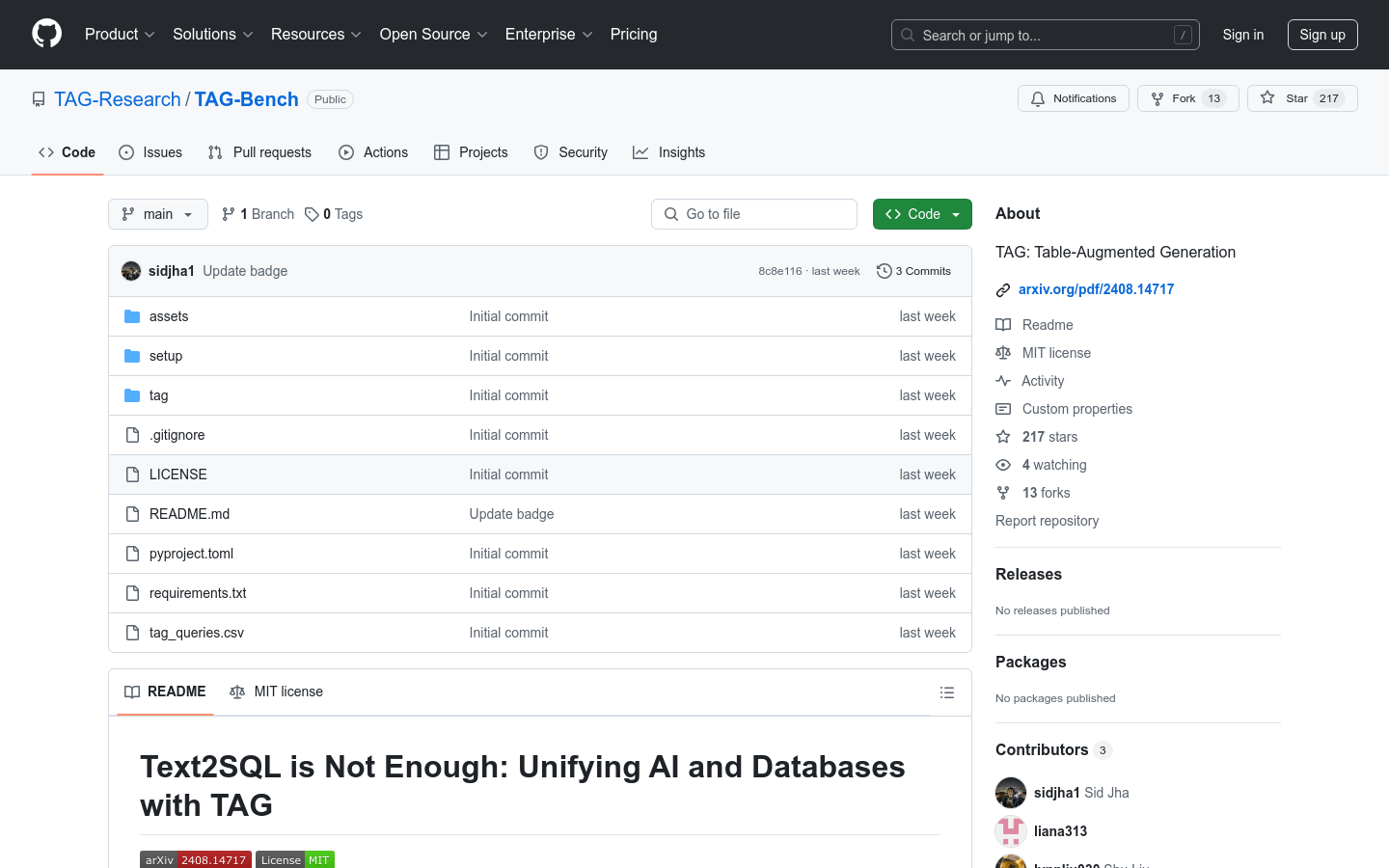

TAG-Bench is a benchmark designed to evaluate and research natural language processing models in handling database queries. Built on top of BIRD Text2SQL, it introduces more complex queries that require semantic reasoning beyond the explicit information in the database. This benchmark aims to advance AI and database technologies by simulating realistic query scenarios.

Who Can Benefit from TAG-Bench?

Researchers in natural language processing and database fields.

Developers looking to test and improve their systems for handling complex database queries.

Educators using it as a teaching tool to help students understand the application of NLP in database queries.

Example Scenarios:

Researchers can use TAG-Bench to assess new natural language processing models.

Developers can utilize it to optimize their database query processing systems.

Educational institutions can employ it to teach students about NLP applications in databases.

Key Features:

Includes 80 complex queries covering various types like matching, comparison, ranking, and aggregation.

Requires models to use world knowledge or perform advanced semantic reasoning.

Supports Pandas DataFrames for simulating database environments.

Recommends using GPU for creating table indexes to enhance query efficiency.

Offers detailed setup guidelines including environment creation, database conversion, and index creation.

Supports multiple evaluation methods such as hand-written TAG, Text2SQL, Text2SQL+LM, RAG, and retrieval+LM ranking.

Provides comprehensive documentation via LOTUS for configuring models and evaluating methods.

Getting Started with TAG-Bench:

1. Create a conda environment and install dependencies.

2. Download the BIRD database and convert it into Pandas DataFrames.

3. Create indexes for each table (using GPU is recommended).

4. Obtain Text2SQL prompts and modify the tag_queries.csv file.

5. Run the evaluation commands in the tag directory to replicate results from the paper.

6. Adjust the lm object to point to your chosen language model server.

7. Configure models and evaluate methods using LOTUS documentation for accuracy and latency.