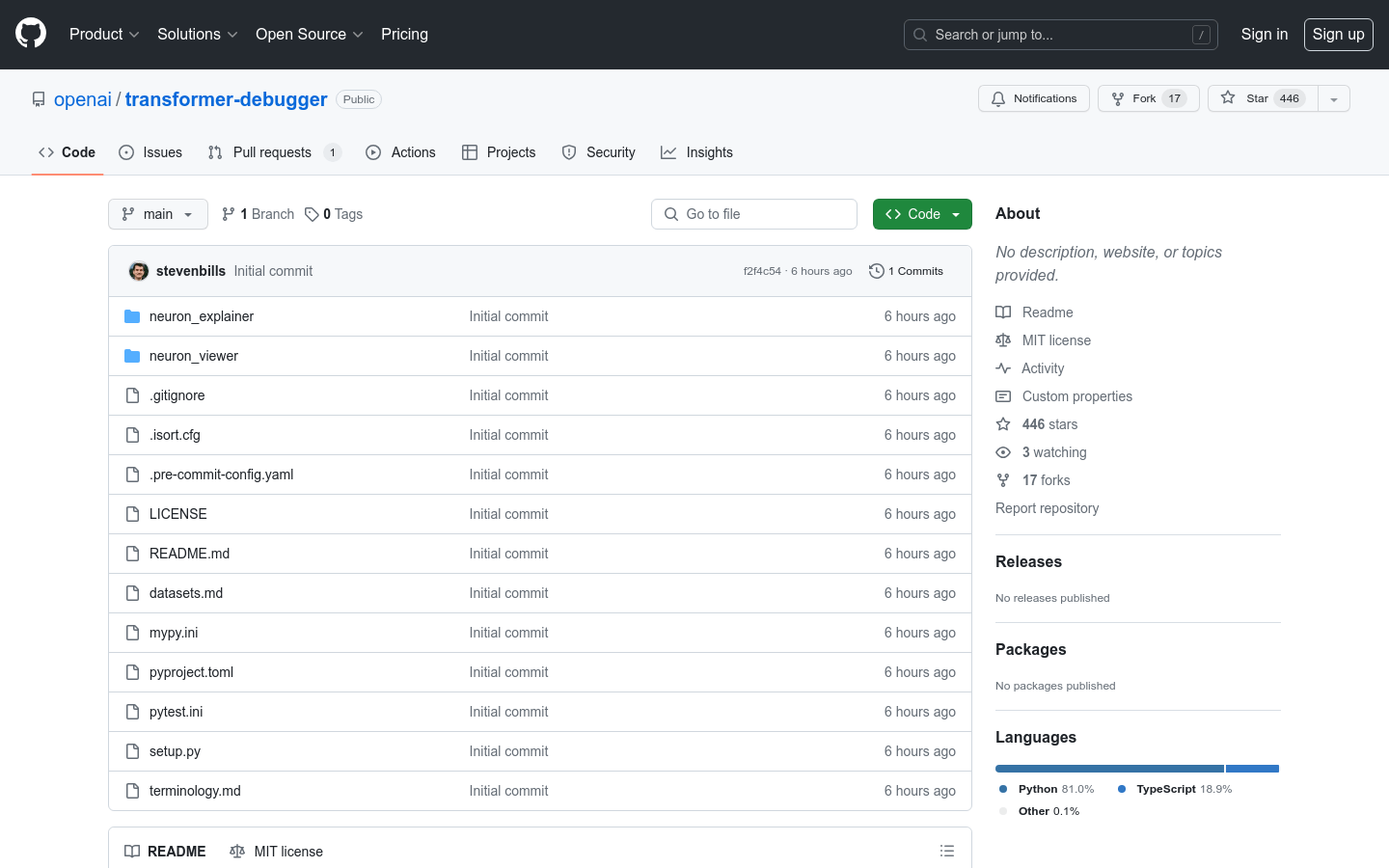

Transformer Debugger combines automated interpretability and sparse autoencoder technology to support rapid exploration before writing code and the ability to intervene in forward passes to see how it affects specific behaviors. It shows why these components are strongly activated by identifying specific components that contribute to behavior (neurons, attention heads, potential representations of autoencoders), displaying automatic generation of explanations to illustrate why these components are strongly activated, and tracking connections between components to help discover circuitry.

Demand population:

"Applicable to researchers and developers, to investigate and understand the behavior of language models, as well as to debug and optimize models."

Example of usage scenarios:

Use TDB to investigate why the model outputs a specific vocabulary for a prompt

Explore why attention heads focus on specific vocabulary

Understanding the activation patterns of neurons in the model through TDB

Product Features:

Automated interpretation of behavior of small language models

Intervention forward pass to observe changes in model behavior

Identify and explain why specific components are activated in the model

Track the connections between components to discover circuits in the model