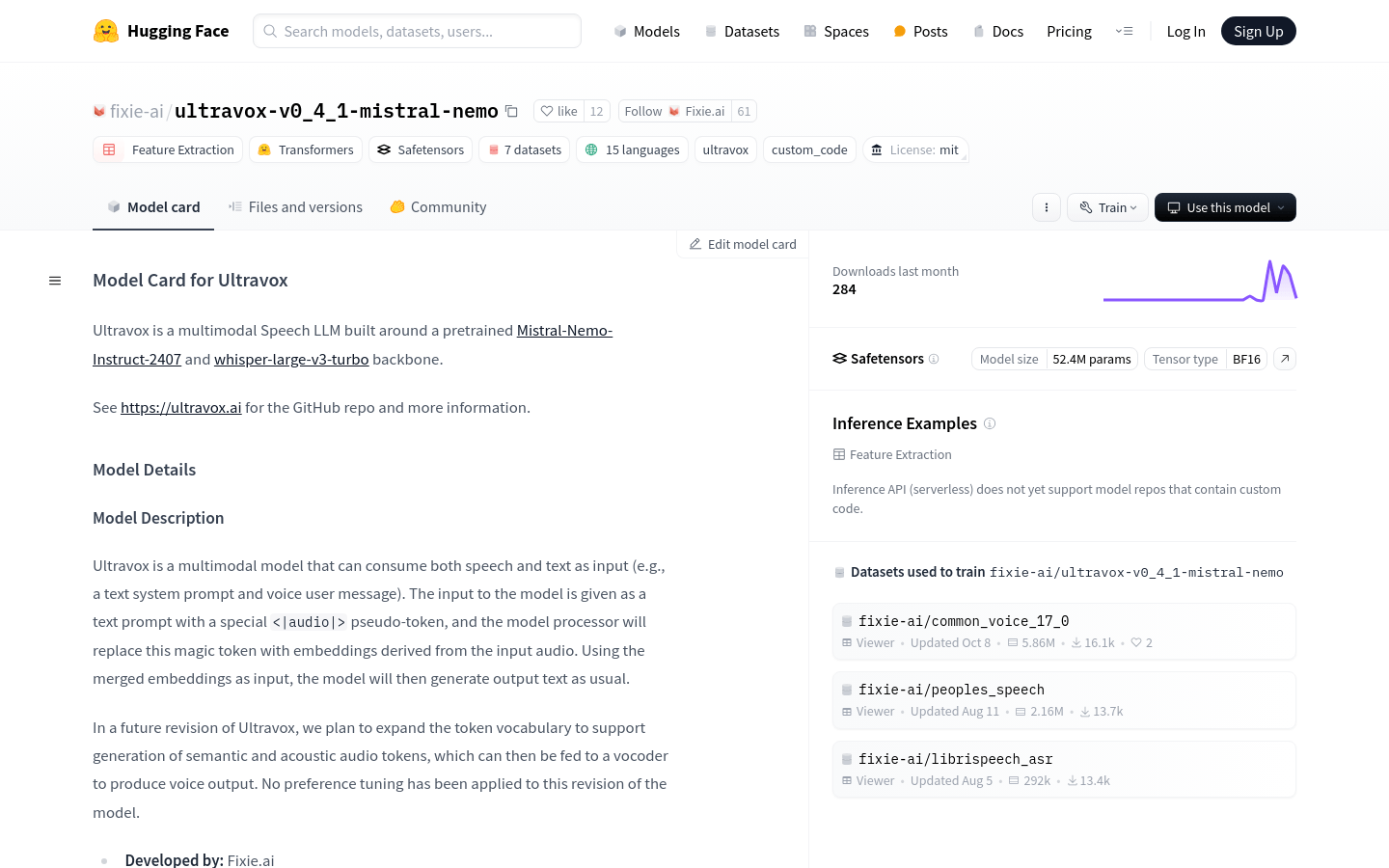

Ultravox - Large-scale language model for multimodal speech

Product overview

Ultravox is a multi-modal speech large language model (LLM) based on pre-trained Mistral-Nemo-Instruct-2407 and whisper-large-v3-turbo. It is capable of handling both voice and text input, such as text system prompts and voice user messages. Ultravox converts the input audio into an embed via the special <|audio|> pseudo-tag and generates output text. Future releases plan to extend the token vocabulary to support the generation of semantic and acoustic audio tokens that can be fed into a vocoder to produce speech output.

Development team and licensing

This model was developed by Fixie.ai and is licensed under the MIT license.

target audience

Ultravox's target audience includes developers and enterprises that need to process speech and text data, such as professional users in speech recognition, speech translation, speech analysis and other fields. Due to its multi-modal processing capabilities and efficient training methods, this product is particularly suitable for users who need to process and generate speech and text information quickly and accurately.

Usage scenario examples

Acts as a voice agent: handles the user's voice commands.

Speech-to-speech translation: Helps communicate across languages.

Speech analysis: Extract key information for security monitoring or customer service.

Product features

Voice and text input processing: Able to process voice and text input at the same time, suitable for a variety of application scenarios.

Audio embedding replacement: Use the <|audio|> pseudo-tag to convert input audio into embeddings to improve the model's multi-modal processing capabilities.

Speech-to-speech translation: suitable for speech translation, speech audio analysis and other scenarios.

Model generated text: Generates output text based on merged embedding inputs.

Future support for semantic and acoustic audio tagging: It is planned to support the generation of semantic and acoustic audio tags in future versions to further expand model capabilities.

Knowledge distillation loss training: Training using knowledge distillation loss causes the Ultravox model to try to match the logits of the text-based Mistral backbone.

Mixed precision training: Use BF16 mixed precision training to improve training efficiency.

Tutorial

1. Install necessary libraries

- Use pip to install the transformers, peft and librosa libraries.

2. Import library

- Import transformers, numpy and librosa libraries into your code.

3. Load the model

- Use transformers.pipeline to load the 'fixie-ai/ultravox-v041-mistral-nemo' model.

4. Prepare audio input

- Use librosa.load to load audio files and obtain audio data and sample rate.

5. Define dialogue turns

- Create a dialogue turn list containing system roles and content.

6. Call the model

- Call the model to generate output text using audio data, dialogue turns and sampling rate as parameters.

7. Get results

- The model takes the generated text as output, which can be used for further processing or display.