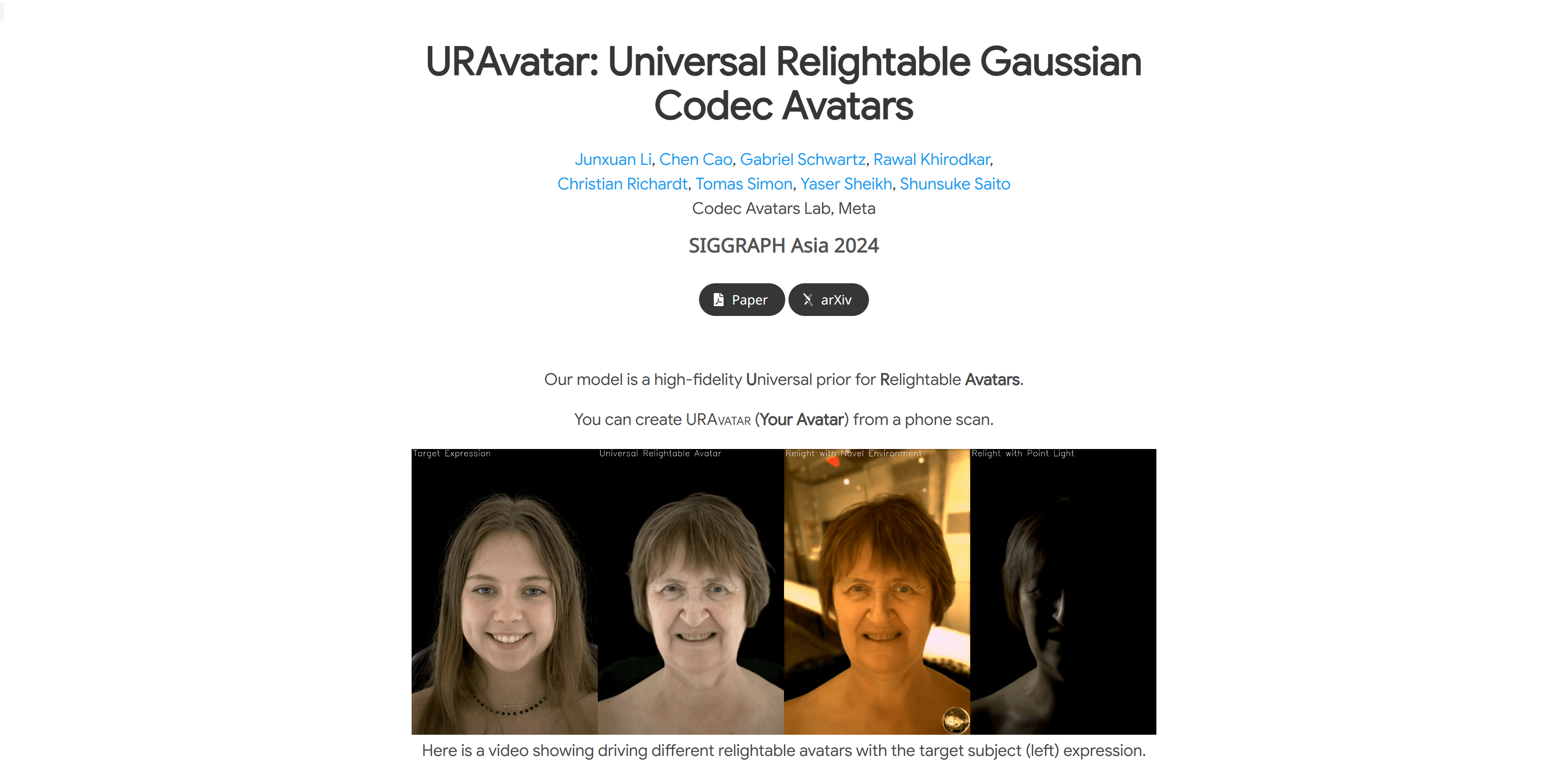

URAvatar is a new avatar generation technology that can create realistic, reilluminated head avatars under unknown lighting conditions through a mobile phone. Unlike the traditional method of estimating parameter reflectivity parameters through reverse rendering, URAvatar directly simulates learning radiation delivery, effectively integrating global illumination transmission into real-time rendering. The importance of this technology is its ability to reconstruct head models from mobile phone scans in a single environment that look realistic in multiple environments and can drive and re-illuminate in real time.

Demand population:

"The target audience is professionals who need to create realistic avatars, such as game developers, movie effects artists, virtual reality content creators, etc. URAvatar technology is particularly suitable for application scenarios that need to quickly generate realistic avatars and be able to adjust under different lighting conditions due to its high fidelity and real-time rendering capabilities."

Example of usage scenarios:

Game developers use URAvatar technology to create realistic facial expressions and dynamics for game characters.

Movie effects artists use URAvatar technology to achieve realistic character facial animation in movies.

Virtual reality content creators use URAvatar technology to provide virtual characters with more realistic facial expressions and lighting effects.

Product Features:

- High fidelity: Ability to create realistic head avatar models.

- Universality: Avatar reconstruction for multiple identities and environments.

- Real-time rendering: The avatar can be animated and re-illuminated in real time.

- Radiation transfer learning: Directly simulate learning radiation transfer to improve rendering efficiency.

- Multi-view training: Use multi-view facial performance data to train cross-identity decoders.

- Personalized fine-tuning: Fine-tune the pretrained model with reverse rendering to obtain a personalized reilluminable avatar.

- Decoupled Control: Provides decoupled control of re-illumination, gaze and neck control.

Tutorials for use:

1. Prepare a mobile phone and the scanned object.

2. Scan the scanned object by mobile phone in natural light environment.

3. Use URAvatar technology to process the scanned data and reconstruct the head pose, geometry, and albedo texture.

4. Fine-tune the scan results with a pre-trained model to obtain a personalized reilluminable avatar.

5. Re-illuminate, gazing and neck control of the avatar through decoupling control.

6. Apply avatar to different virtual environments to achieve real-time animation and lighting effects.