What is Valley-Eagle-7B?

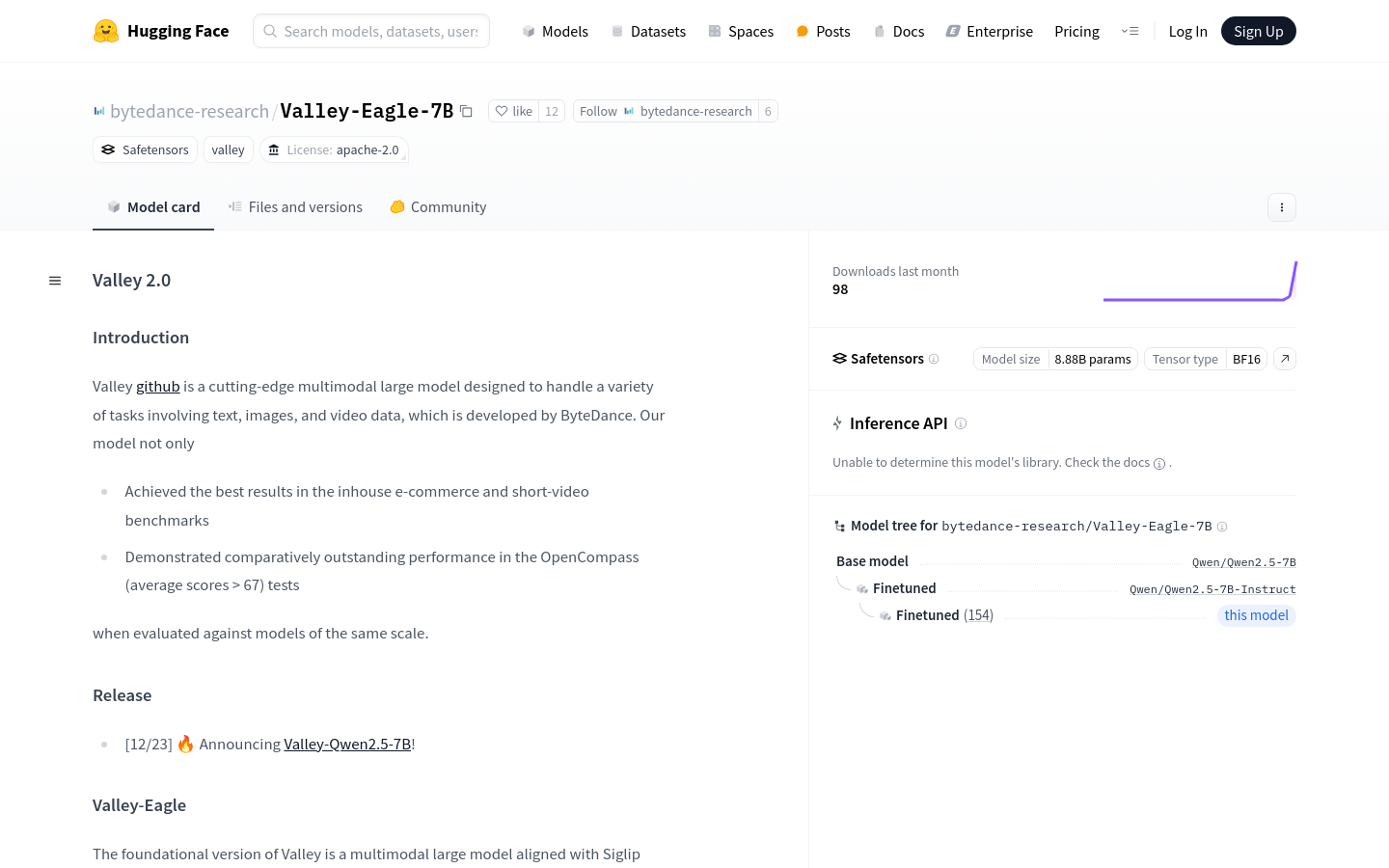

Valley-Eagle-7B is a multi-modal large model developed by ByteDance. It is designed to handle various tasks involving text, image, and video data. The model has achieved top results in internal e-commerce and short video benchmarks and has shown excellent performance in OpenCompass tests compared to models of similar scale. Valley-Eagle-7B uses LargeMLP and ConvAdapter to build projectors and introduces VisionEncoder to enhance its performance in extreme scenarios.

Who is it suitable for?

Valley-Eagle-7B is ideal for businesses and research institutions that need to process and analyze large amounts of multi-modal data. This includes e-commerce platforms, video content analysis platforms, and more. Users can leverage Valley-Eagle-7B to improve data processing efficiency and accuracy, thereby enhancing user experience and business decision-making.

Example Scenarios

E-commerce platforms can use Valley-Eagle-7B to analyze user comments and product images, optimizing product recommendation algorithms.

Video platforms can utilize Valley-Eagle-7B for content moderation, automatically identifying and filtering inappropriate content.

Research institutions can employ Valley-Eagle-7B for multi-modal data research, exploring new data analysis methods.

Key Features

Achieved best results on e-commerce and short video benchmarks

Scored over 67 on average in OpenCompass tests

Combines LargeMLP and ConvAdapter to build projectors

Introduces VisionEncoder to enhance performance in extreme scenarios

Flexible model structure allowing adjustment of visual token count

Supports multi-modal data processing including text, images, and videos

Using the Model

1. Visit the Hugging Face website and search for the Valley-Eagle-7B model.

2. Use pip to install the required dependencies as per the code examples provided on the page.

3. Set up your environment according to the guide, including installing torch, torchvision, and torchaudio.

4. Download and install the Valley-Eagle-7B model.

5. Write code based on specific multi-modal data tasks according to the model's usage cases.

6. Run the code and analyze the model output to get the desired results.

7. Adjust model parameters as needed to optimize performance.