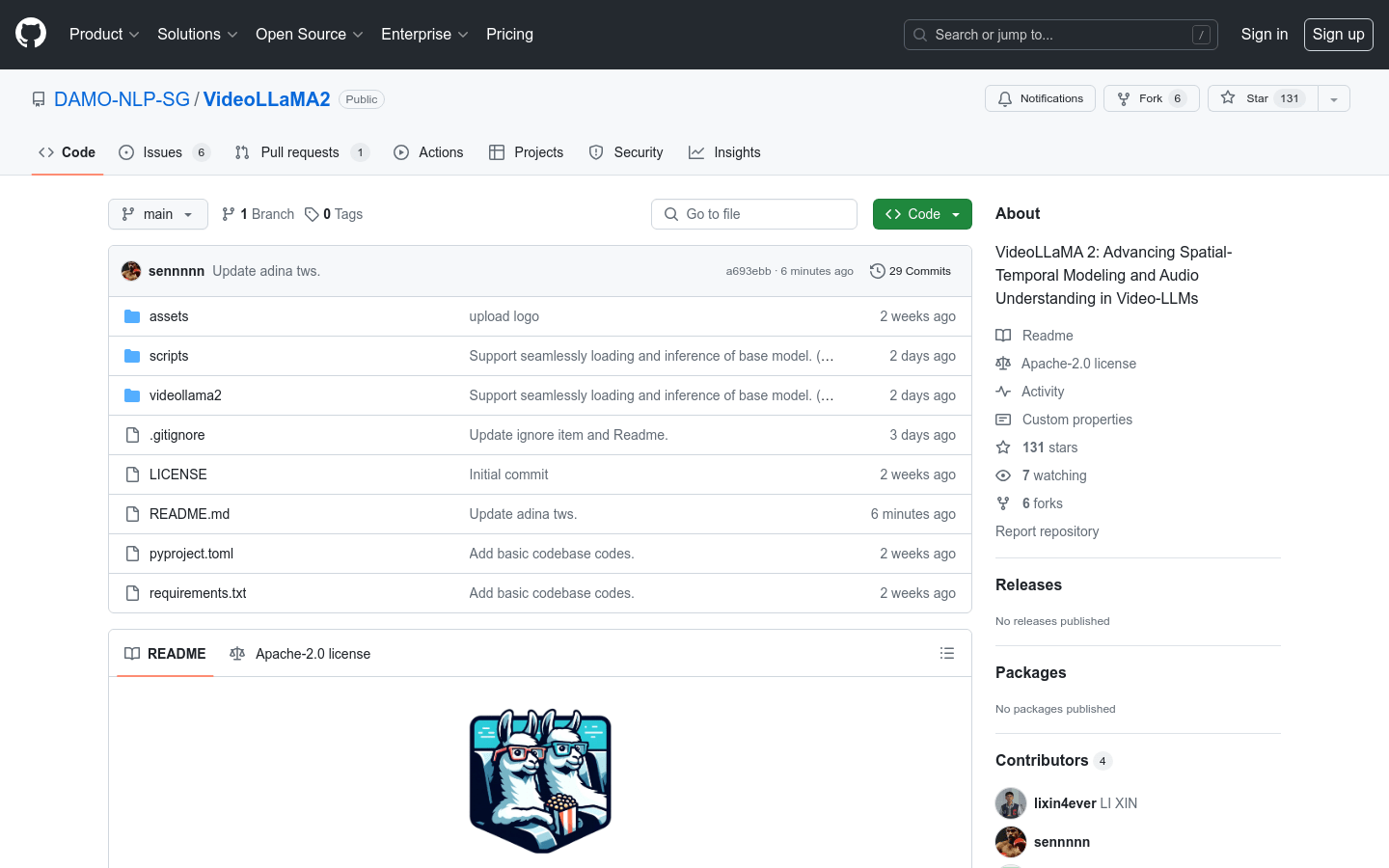

What is VideoLLaMA 2?

VideoLLaMA 2 is a large language model specifically designed for understanding video content. It excels at analyzing videos by using advanced methods to understand both the visual aspects (what's happening) and the audio (what's being said). This makes it significantly better at tasks like answering questions about videos and creating accurate subtitles.

Who is VideoLLaMA 2 For?

VideoLLaMA 2 is perfect for researchers and developers who need a powerful and efficient way to analyze video data. It's particularly useful for tasks such as video question answering, automatic subtitle generation, and more.

Use Cases: Real-World Applications

- Research: Researchers can use VideoLLaMA 2 to build advanced video question-answering systems, pushing the boundaries of AI-powered video understanding.

- Content Creation: Content creators can leverage VideoLLaMA 2 to automatically generate accurate and high-quality subtitles, saving valuable time and resources.

- Businesses: Companies can integrate VideoLLaMA 2 into video surveillance systems to improve event detection and response times, leading to increased efficiency and security.

Key Features: What Makes VideoLLaMA 2 Stand Out?

- Easy Integration: Seamlessly load and use the core model for your projects.

- Interactive Demo: A readily available online demo allows you to quickly explore VideoLLaMA 2's capabilities.

- Versatile Functionality: Provides robust video question answering and automatic subtitle generation features.

- Comprehensive Support: Includes code for training, evaluation, and model serving.

- Customization: Supports training and evaluation using your own custom datasets.

- Detailed Documentation: We provide clear and comprehensive installation and usage guides.

Getting Started: A Step-by-Step Guide

- Install Dependencies: Make sure you have the necessary software installed, including Python, PyTorch, and CUDA (if using a GPU).

- Download the Code: Access the VideoLLaMA 2 code repository via GitHub and follow the instructions to install the required Python packages.

- Prepare Model Checkpoints: Get the necessary model checkpoints and follow the documentation to start the model service.

- Run and Refine: Use the provided scripts and command-line tools to train, evaluate, or run inference with the model. Adjust model parameters as needed to optimize performance.

- Experience VideoLLaMA 2: Use the online demo or your local model service to experience its powerful video understanding and generation capabilities.

Tags:

#VideoLLaMA2 #VideoUnderstanding #AI #LargeLanguageModel #MachineLearning #VideoAnalysis #SubtitleGeneration #VideoQA #DeepLearning