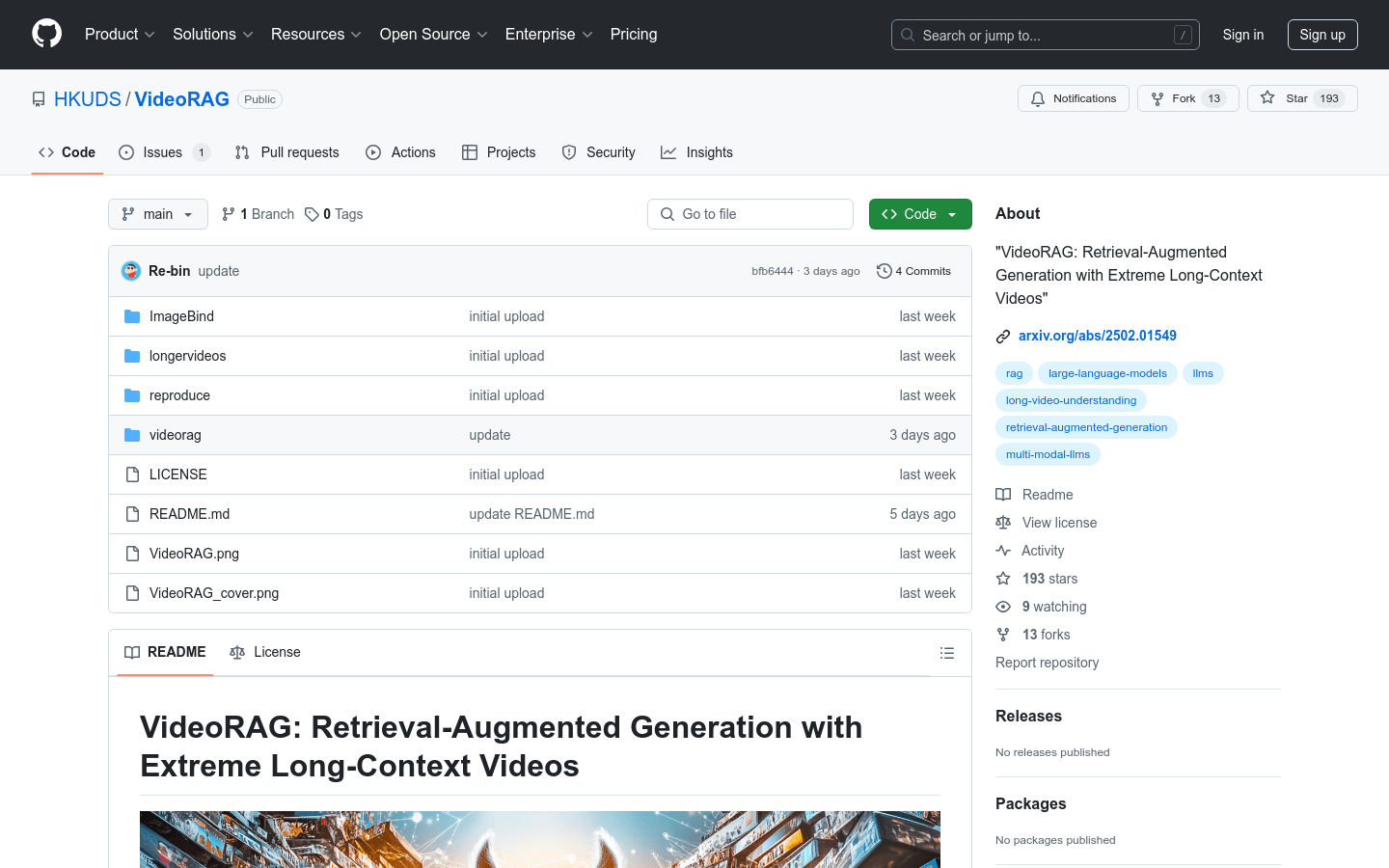

VideoRAG is an innovative search-enhanced generation framework designed specifically for understanding and processing extremely long context videos. It realizes the understanding of unlimited length videos by combining graph-driven text knowledge anchoring and hierarchical multimodal context coding. This framework is able to dynamically build knowledge graphs, maintain semantic coherence of multi-video contexts, and optimize retrieval efficiency through an adaptive multimodal fusion mechanism. The main advantages of VideoRAG include efficient and extremely long context video processing capabilities, structured video knowledge indexing, and multimodal retrieval capabilities, enabling it to provide comprehensive answers to complex queries. This framework has important technical value and application prospects in the field of long video understanding.

Demand population:

"This product is suitable for researchers, developers, and professionals in related fields who need to process and understand extremely long context videos, such as video content creators in the education field, film and television production teams, and companies that need to extract knowledge from large amounts of videos. VideoRAG can help them efficiently extract valuable information from long videos, providing powerful technical support for the analysis, summary and question-and-answer of video content."

Example of usage scenarios:

Researchers can use VideoRAG to extract key knowledge points from a large number of academic lecture videos for academic research and teaching.

Film and television production teams can use VideoRAG to quickly retrieve video clips related to specific topics to improve video editing efficiency.

Businesses can use VideoRAG to extract key information from internal training videos for employee training and knowledge management.

Product Features:

Efficient and extremely long context video processing: Process hundreds of hours of video content with a single NVIDIA RTX 3090 GPU.

Structured video knowledge index: Refining hundreds of hours of video content into a structured knowledge graph.

Multimodal search: combine text semantics and visual content to accurately retrieve relevant video clips.

Support multilingual video processing: By modifying the Whisper model, multilingual video processing is supported.

Provides long video benchmark dataset: contains more than 160 videos with a total duration of more than 134 hours, covering a variety of types such as lectures, documentaries and entertainment.

Tutorials for use:

1. Create a Conda environment and install the necessary dependencies, including PyTorch, transformers, etc.

2. Download the pretrained model checkpoints for MiniCPM-V, Whisper, and ImageBind.

3. Pass the video file path list to the VideoRAG model to extract and index video knowledge.

4. Ask a query about the video content, VideoRAG will answer questions by retrieving and generating them.

5. You can support multilingual video processing by modifying the code to adapt to video content in different languages.