What is VividTalk?

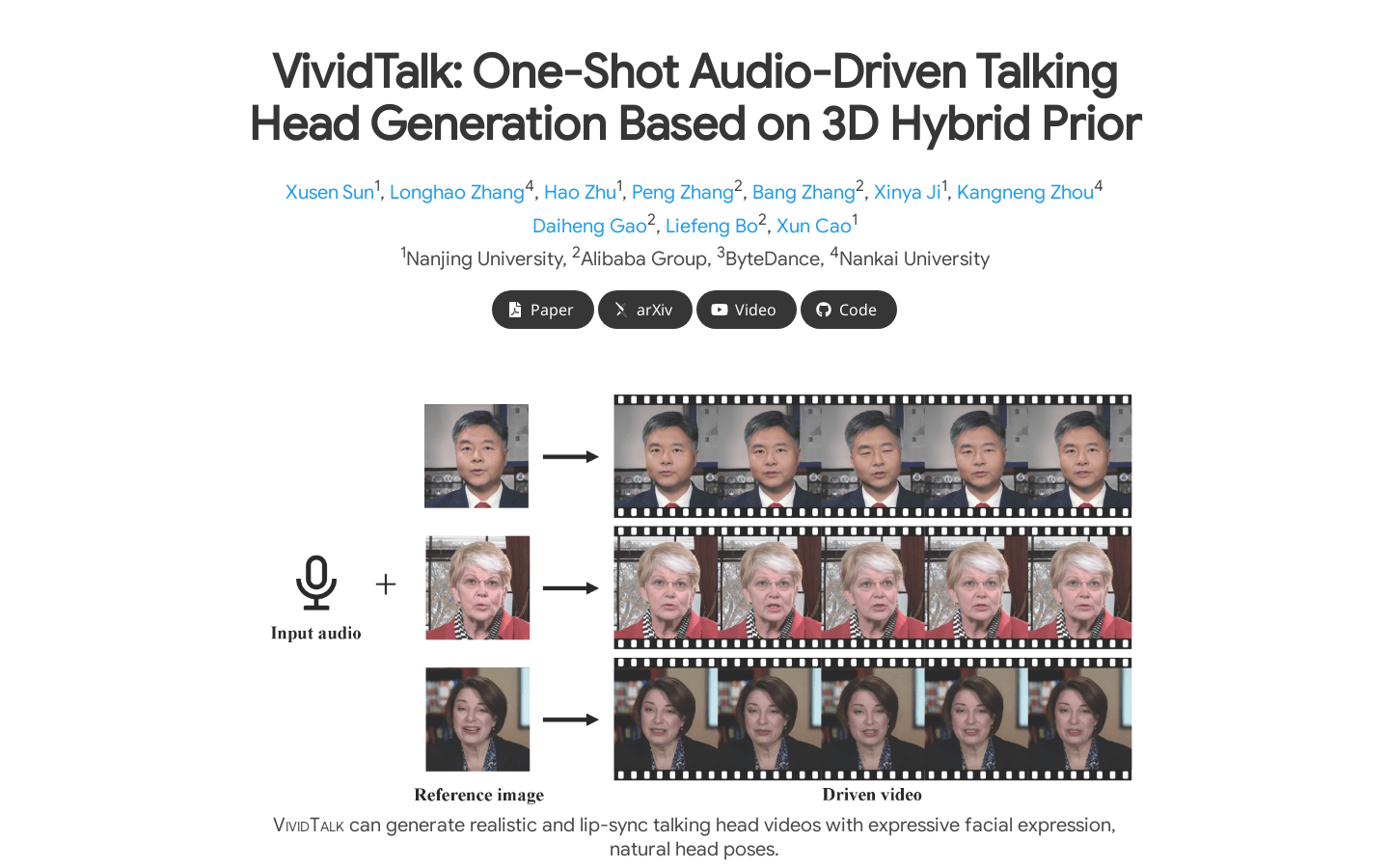

VividTalk is an advanced one-time audio-driven avatar generation technology that uses 3D hybrid priors to create lifelike rap videos with rich expressions, natural head movements, and accurate lip synchronization. This technology employs a two-stage framework to generate high-quality rap videos with all these features.

In the first stage, it maps audio to a mesh by learning non-rigid facial expressions and rigid head movements. For facial expressions, it uses a combination of blendshapes and vertices to enhance representational capabilities. For natural head movements, it introduces a learnable head pose dictionary and a two-phase training mechanism.

The second stage involves a dual-branch motion VAE and a generator that converts the mesh into dense motions and synthesizes high-quality video frames.

Extensive experiments show that VividTalk outperforms previous state-of-the-art methods in terms of lip synchronization, natural head posture, identity preservation, and video quality. The code will be released publicly after publication.

Who Can Use VividTalk?

VividTalk is useful for creating realistic rap videos and supports various styles of facial image animation, making it ideal for producing rap videos in multiple languages.

Example Scenarios

1. Use VividTalk to create realistic rap videos for virtual hosts.

2. Generate cartoon-style audio-driven avatars using VividTalk.

3. Produce multilingual audio-driven avatar videos with VividTalk.

Key Features

Generates realistic rap videos with accurate lip synchronization

Supports different styles of facial animations including human, realistic, and cartoon

Creates rap videos based on various audio inputs

Superior performance compared to the latest methods in lip synchronization, natural head posture, identity preservation, and video quality