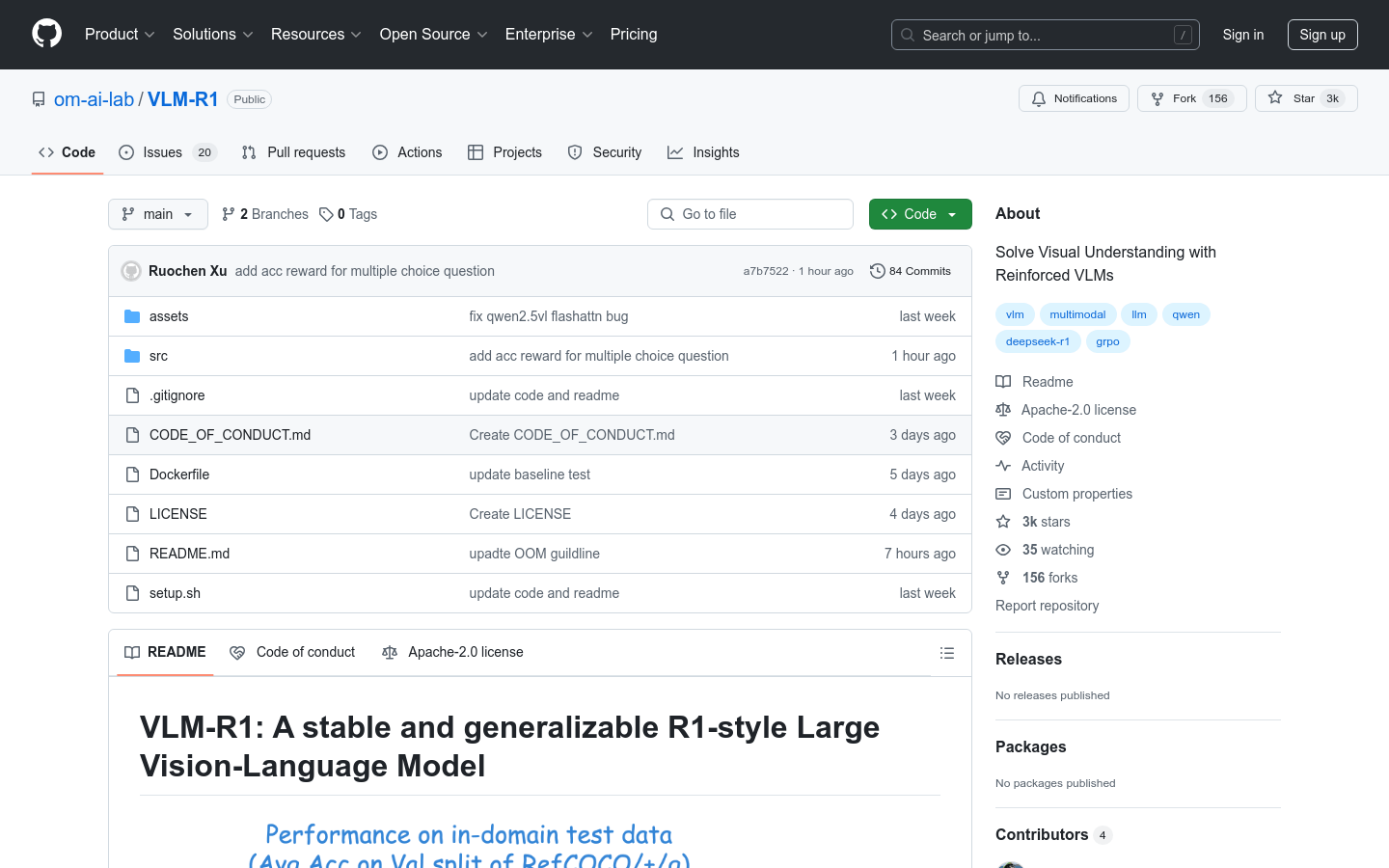

VLM-R1

VLM-R1 is a robust visual language model for tasks like referring expression comprehension, excelling in stability and generalization across various applications including image annotation and intelligent customer service.

What is VLM-R1?

VLM-R1 is a visual language model built on Qwen2.5-VL that uses reinforcement learning and supervised fine-tuning to understand complex visual scenes. It excels in tasks like referring expression comprehension, offering strong generalization and stability. Ideal for applications needing precise visual understanding, such as image annotation, smart customer service, and autonomous driving.