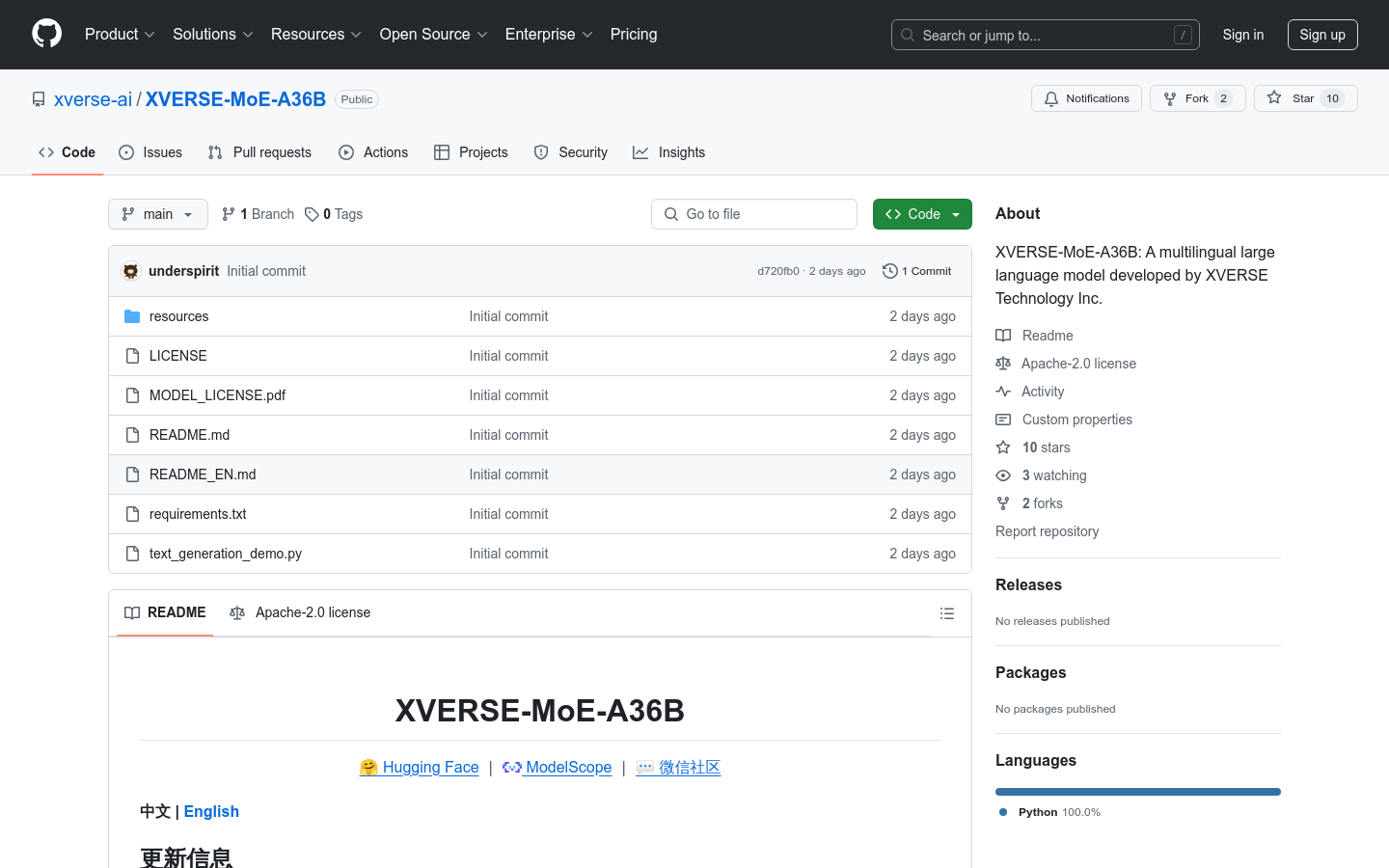

XVERSE-MoE-A36B

XVERSE-MoE-A36B is a powerful multi-language model supporting 40+ languages, optimized for efficient text generation and high throughput.

What is XVERSE-MoE-A36B?

XVERSE-MoE-A36B is a large language model developed by Shenzhen Yuanxiang Technology. It supports over 40 languages including Chinese and English. With 2554 billion total parameters and 360 billion active parameters, it uses MoE architecture to enhance performance and efficiency. It handles long texts with 8K length training samples and offers customization for better throughput. Ideal for developers and researchers in NLP and multilingual content generation.