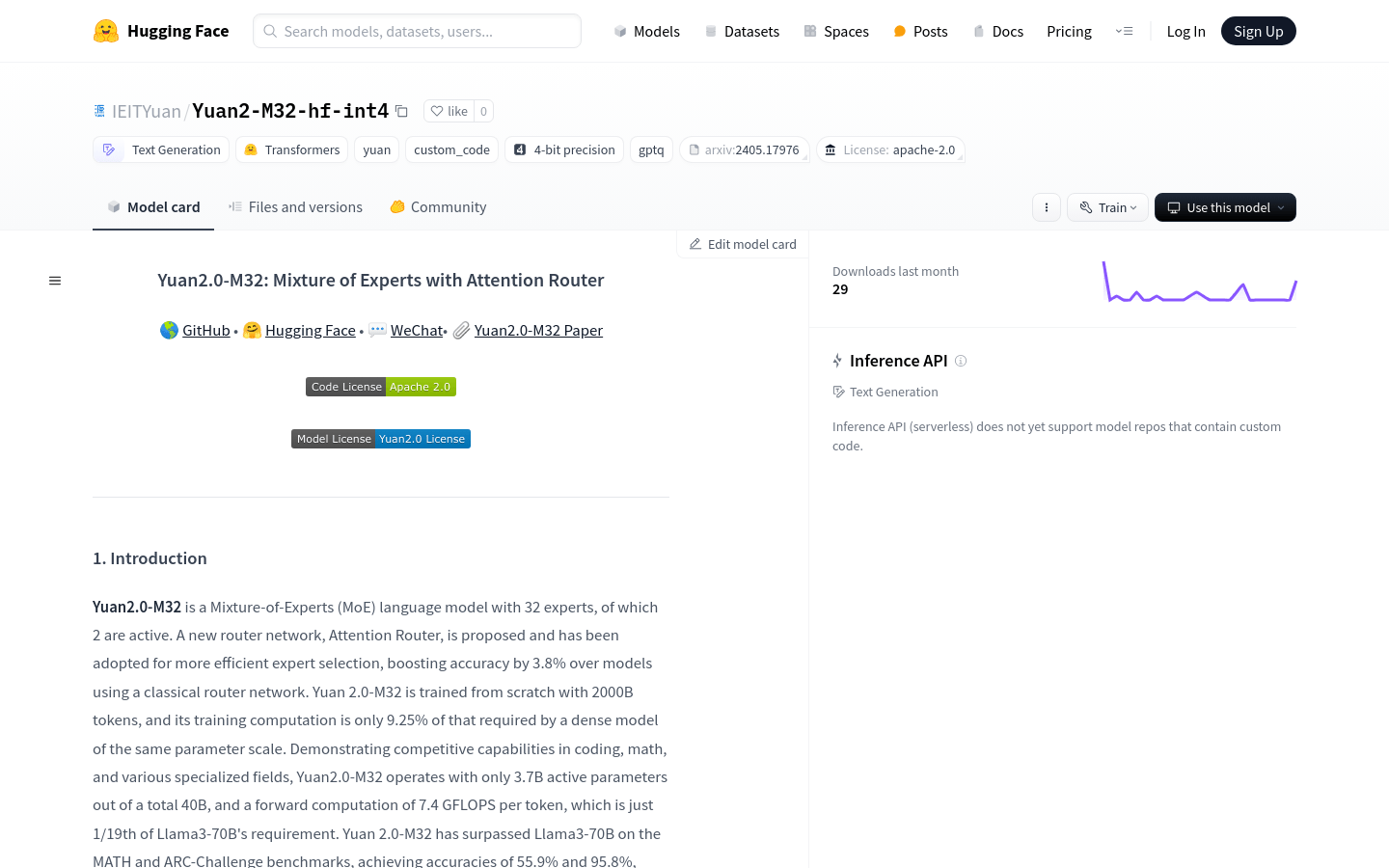

Yuan2.0-M32 is a hybrid expert (MoE) language model with 32 experts, 2 of which are active. A new routing network, attention router, was introduced to improve the efficiency of expert selection, making the model more accurate than the model using traditional router networks. Yuan2.0-M32 was trained from scratch and used 200 billion tokens. The training calculation amount was only 9.25% of the calculation amount required for a scale-intensive model of the same parameter. It has shown competitiveness in coding, mathematics and various professional fields, Yuan2.0-M32 has only 370 million active parameters out of the total parameter 4 billion, and the forward calculation volume of each token is 7.4 GFLOPS, which is only 1/19 of the Llama3-70B requirement. Yuan2.0-M32 surpassed Llama3-70B in MATH and ARC-Challenge benchmarks, with accuracy rates of 55.9% and 95.8% respectively.

Demand population:

"The Yuan2.0-M32 model is suitable for developers and researchers who need to handle large amounts of data and complex computing tasks, especially in applications of programming, mathematical computing and expertise. Its high efficiency and low computing requirements make it ideal for large-scale language model applications."

Example of usage scenarios:

In the field of programming, Yuan2.0-M32 can be used for code generation and code quality evaluation.

In the field of mathematics, models can perform complex mathematical problems and logical reasoning.

In professional fields such as medical or legal, Yuan2.0-M32 can assist professionals in knowledge retrieval and document analysis.

Product Features:

Hybrid Expert (MoE) model with 32 experts, 2 of which are active.

Use attention routers for more efficient expert choices.

Training from scratch, using 200 billion tokens.

The training calculation volume accounts for only 9.25% of the same parameter scale model.

Show competitiveness in coding, mathematics and professional fields.

With low forward computing requirements, only 7.4 GFLOPS per token is required.

Excellent in MATH and ARC-Challenge benchmarks.

Tutorials for use:

1. Configure the environment and use the recommended docker image to start the Yuan2.0 container.

2. Perform data preprocessing according to the document description.

3. Use the provided scripts to pre-train the model.

4. Refer to the detailed deployment plan of vllm to deploy the inference service.

5. Visit the GitHub repository to get more information and documentation.

6. Comply with the Apache 2.0 open source license agreement, understand and comply with the 'Yuan2.0 Model License Agreement'.