DeepSeek is a highly anticipated language model series in the field of artificial intelligence recently. During the release of different versions, it has gradually strengthened its processing capabilities for multiple tasks. This article will introduce the various versions of DeepSeek in detail, from the release time, features, advantages and shortcomings of the version, and provide a reference guide for AI technology enthusiasts and developers.

DeepSeek-V1 is the starting version of DeepSeek. I will not elaborate on it here and mainly analyze its advantages and disadvantages.

1. Release time:

January 2024

2. Features:

DeepSeek-V1 is the first version of the DeepSeek series, pre-trained in 2TB of tagged data, focusing on natural language processing and coding tasks. It supports multiple programming languages and has strong coding capabilities, suitable for program developers and technical researchers.

3. Advantages:

Strong coding capabilities: supports multiple programming languages, can understand and generate code, and is suitable for developers to automate code generation and debug.

High context window: Supports context windows with up to 128K markers, which can handle more complex text comprehension and generation tasks.

4. Disadvantages:

Multimodal capabilities are limited: This version mainly focuses on text processing, and lacks support for multimodal tasks such as images and voice.

Weak reasoning ability: Although excellent in natural language processing and coding, it is not as good as subsequent versions in complex logical reasoning and deep reasoning tasks.

As an early version of DeepSeek, the performance of DeepSeek-V2 is much improved compared to DeepSeek-V1, and its gap is the same as that of the first version of ChatGPT and ChatGPT3.5.

1. Release time:

First half of 2024

2. Features:

The DeepSeek-V2 series is equipped with 236 billion parameters, making it an efficient and powerful version. It has high performance and low training cost characteristics, supports completely open source and free commercial use, greatly promoting the popularization of AI applications.

3. Advantages:

Efficient performance and low cost: The training cost is only 1% of that of GPT-4-Turbo, which greatly reduces the development threshold and is suitable for scientific research and commercial applications.

Open source vs. Free commercial use: Compared with the previous version, V2 supports completely open source and users can freely use it for commercial use, which makes the DeepSeek ecosystem more open and diversified.

4. Disadvantages:

Slow inference speed: Despite the huge amount of parameters, DeepSeek-V2 is still slower in terms of inference speed than subsequent versions, affecting the performance of real-time tasks.

Multimodal capability limitations: Similar to V1, the V2 version does not perform well when dealing with non-text tasks (such as images, audio).

1. Release time:

September 2024

Below is the official update log for V2.5 version:

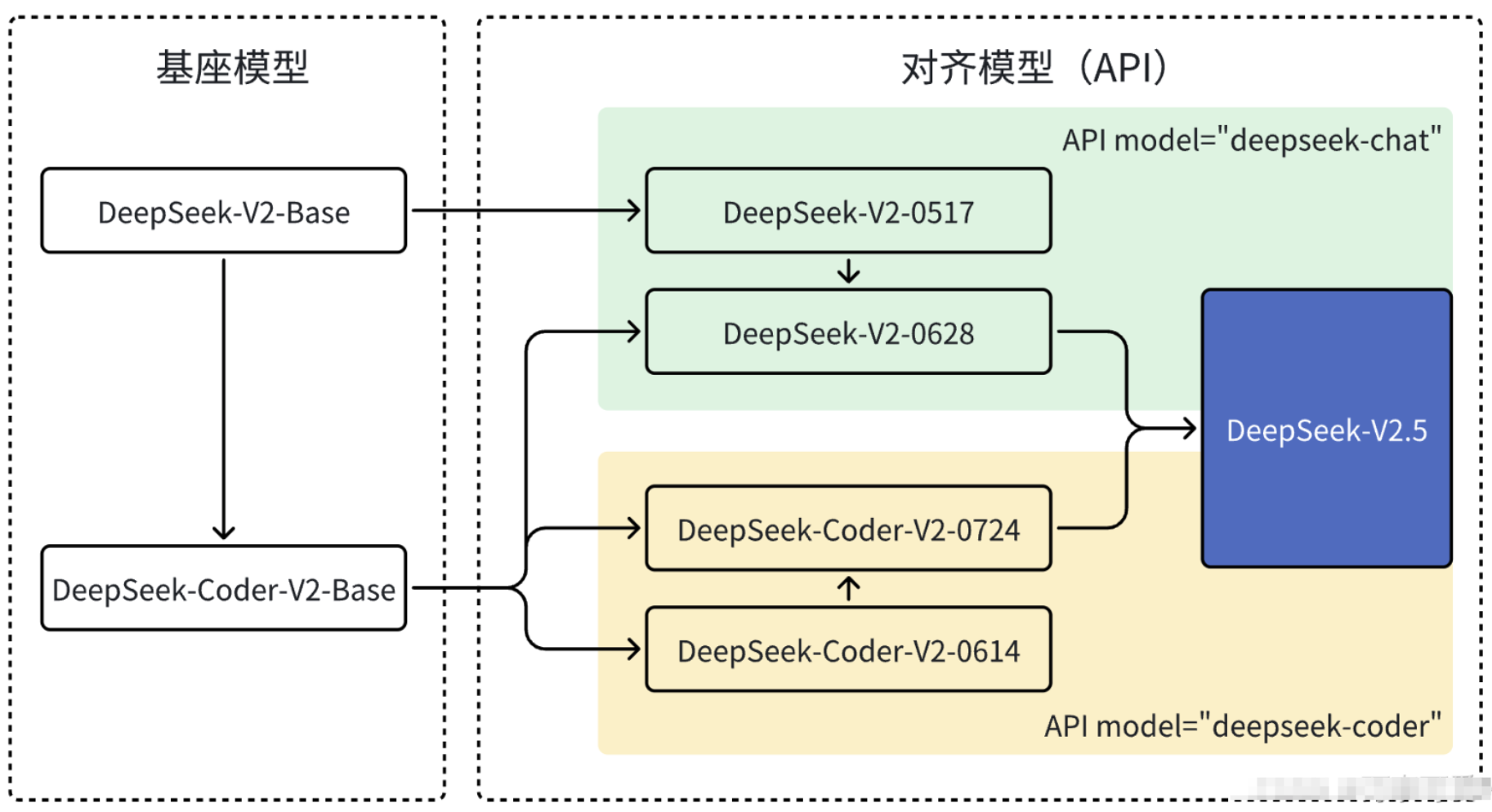

DeepSeek has been focusing on model improvement and optimization. In June, we made a major upgrade to DeepSeek-V2-Chat with Coder V2

The Base model replaces the original Chat Base model, significantly improving its code generation and reasoning capabilities, and has released

DeepSeek-V2-Chat-0628 version. Immediately afterwards, DeepSeek-Coder-V2 is on the original Base

Based on the model, the DeepSeek-Coder-V2 0724 version was launched after greatly improving general capabilities through alignment optimization. Finally, we successfully included Chat and

Coder has merged to launch the new DeepSeek-V2.5 version.

It can be seen that the official has integrated two models in this update, allowing DeepSeek-V2.5 to assist developers in handling more difficult tasks.

Chat model: specially designed and optimized for dialogue systems (chatbots), used to generate natural language conversations, able to understand the context and generate coherent and meaningful replies, common applications such as chatbots, smart assistants, etc.

Coder model: It is an artificial intelligence model based on deep learning technology that is trained through a large amount of code data to understand, generate and process code.

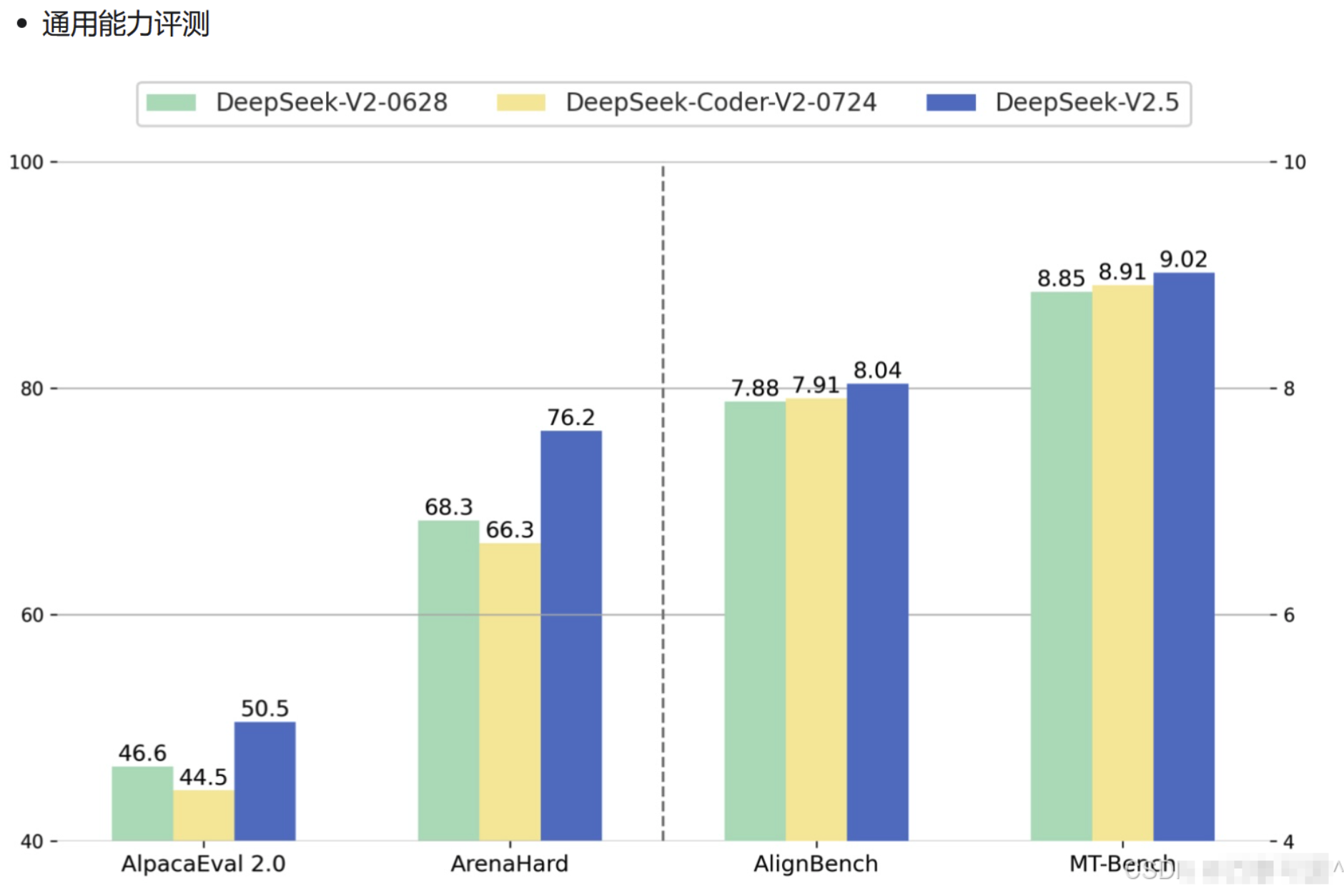

And according to the official data, V2.5's performance in general capabilities (creation, question and answers, etc.) has significantly improved compared with the V2 model.

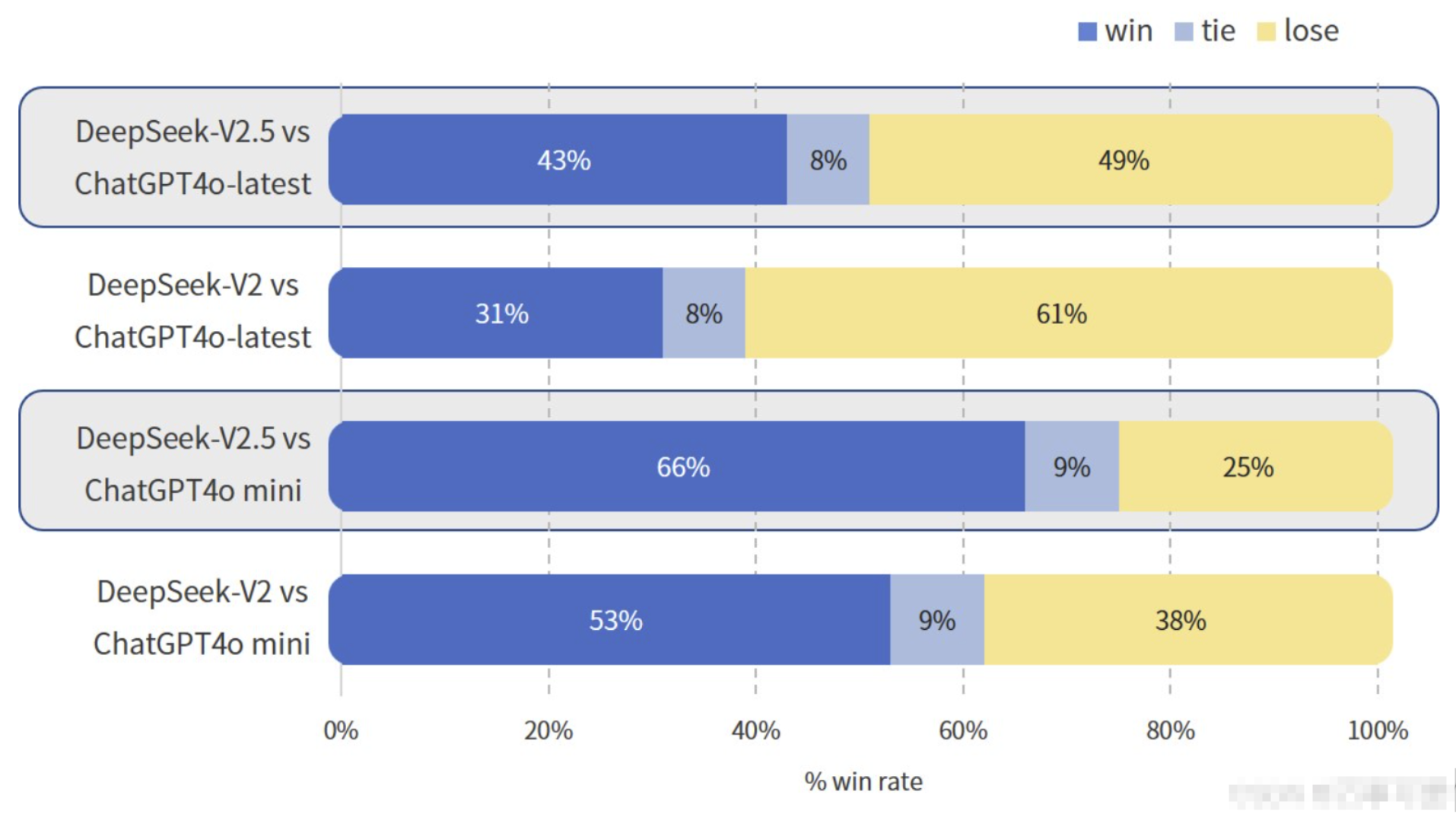

The following is a picture to compare the general capabilities of the DeepSeek - V2 and DeepSeek - V2.5 models with ChatGPT4o - latest and ChatGPT4o mini respectively.

In this picture, we can see the winning rate, draw rate and defeat rate of the two versions of DeepSeek - V2 and DeepSeek - V2.5 respectively with ChatGPT4o - latest and ChatGPT4o mini:

DeepSeek - V2.5 vs ChatGPT4o - latest: DeepSeek - V2.5 has a winning rate of 43%, a draw rate of 8%, and a loss rate of 49%.

DeepSeek - V2 vs ChatGPT4o - latest: DeepSeek - V2 has a winning rate of 31%, a draw rate of 8%, and a loss rate of 61%.

DeepSeek - V2.5 vs ChatGPT4o mini: DeepSeek - V2.5 has a winning rate of 66%, a draw rate of 9%, and a loss rate of 25%.

DeepSeek - V2 vs ChatGPT4o mini: DeepSeek - V2 has a winning rate of 53%, a draw rate of 9%, and a loss rate of 38%.

In comparison with the ChatGPT4o series models, DeepSeek-V2.5 overall outperforms DeepSeek-V2; DeepSeek-V2.5 and DeepSeek-V2 have relatively high winning rates compared with the ChatGPT4o mini, while in comparison with the ChatGPT4o-latest, relatively low winning rates compared with the ChatGPT4o-latest.

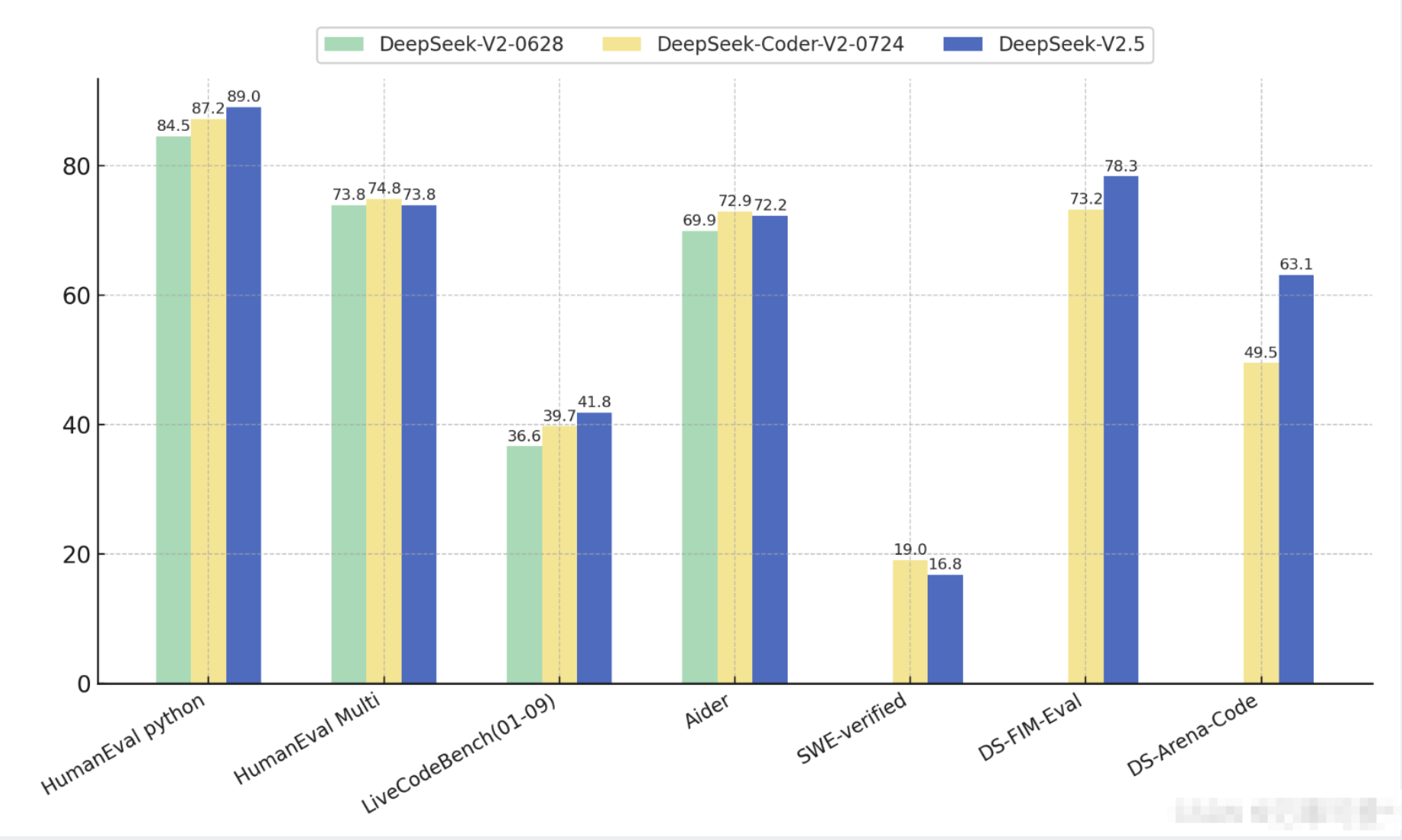

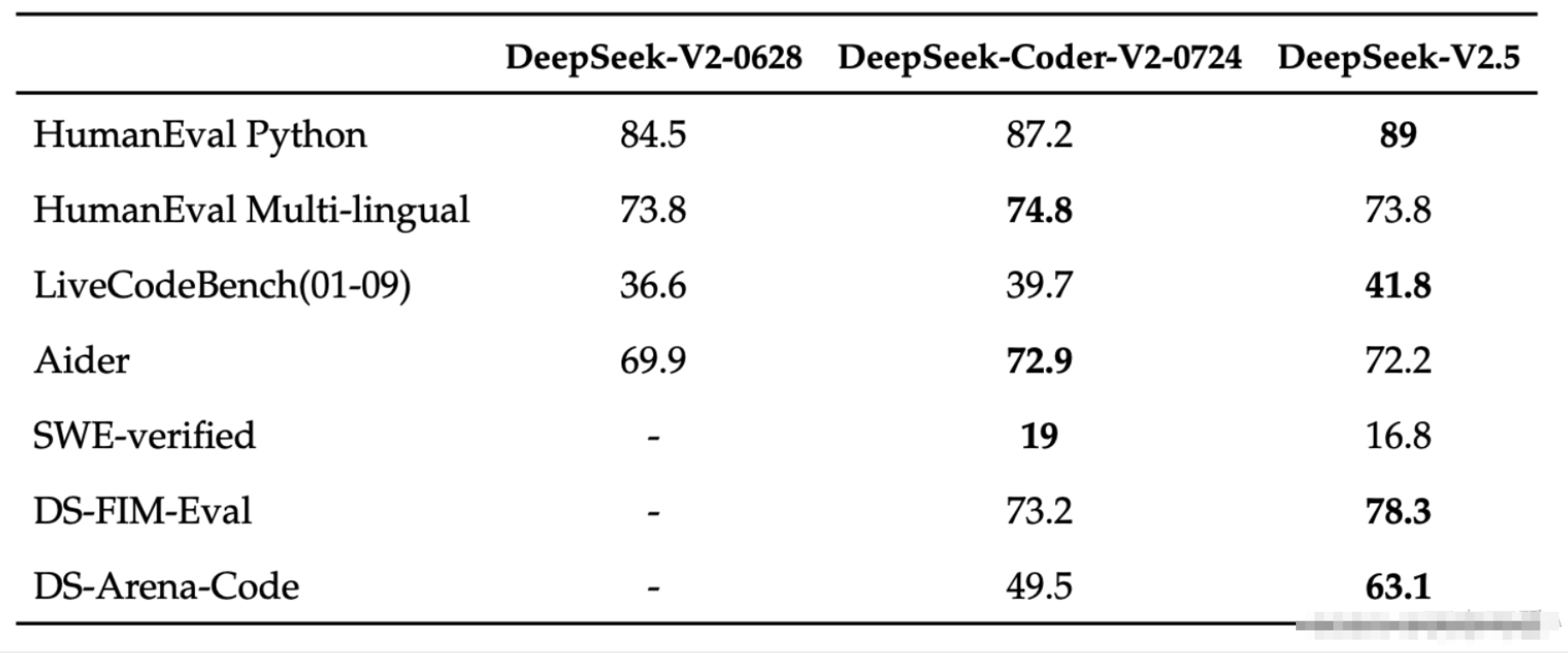

In terms of code, DeepSeek-V2.5 retains the powerful code capabilities of DeepSeek-Coder-V2-0724. In HumanEval

DeepSeek-V2.5 in Python and LiveCodeBench (January 2024 - September 2024) test

Shows a significant improvement. In HumanEval Multilingual and Aider tests, DeepSeek-Coder-V2-0724

A little better. In SWE-verified

In the test, both versions performed lower, indicating that further optimization is still needed in this regard. In addition, in the FIM completion task, the internal evaluation set DS-FIM-Eval score has been improved

5.1%, which can bring a better plug-in completion experience.

In addition, DeepSeek-V2.5 optimizes common code scenarios to improve the performance of actual use. Subjective evaluation in the internal DS-Arena-Code

Among them, the winning rate of DeepSeek-V2.5 against competitors (GPT-4o is the referee) has improved significantly.

2. Features:

DeepSeek-V2.5 has made some key improvements based on the previous version, especially in the fields of mathematical reasoning and writing, which performed better. At the same time, this version has added a network search function, which can analyze massive web page information in real time, enhancing the real-timeness of the model and data richness.

3. Advantages:

Improved mathematics and writing ability: DeepSeek-V2.5 performs excellently in complex mathematical problems and creative writing, and can assist developers in handling higher-difficulties.

Network search function: Through networking, the model can capture the latest web page information, analyze and understand the current Internet resources, and improve the real-timeness and information breadth of the model.

4. Disadvantages:

API limitations: Although it has the ability to search online, the API interface does not support this function, which affects the actual application scenarios of some users.

Multimodal capabilities are still limited: Despite improvements in many aspects, V2.5 still has limitations in multimodal tasks and cannot be compared with specialized multimodal models.

DeepSeek-V2.5 is now open sourced to HuggingFace:

https://huggingface.co/deepseek-ai/DeepSeek-V2.5

1. Release time:

November 20, 2024

It has to be said that the iteration speed of the DeepSeek version is very fast, and the historical R1-Lite model was released in November of the same year. As a pre-version of the R1 model, although it is not as popular as the R1 model, its performance as a domestic inference model benchmarking OpenAI o1 is also remarkable. The DeepSeek-R1-Lite preview model has achieved outstanding results in authoritative evaluations such as AIME, which has the highest difficulty level in the American Mathematics Competition (AMC), and the world's top programming competition (codeforces), greatly surpassing well-known models such as GPT-4o.

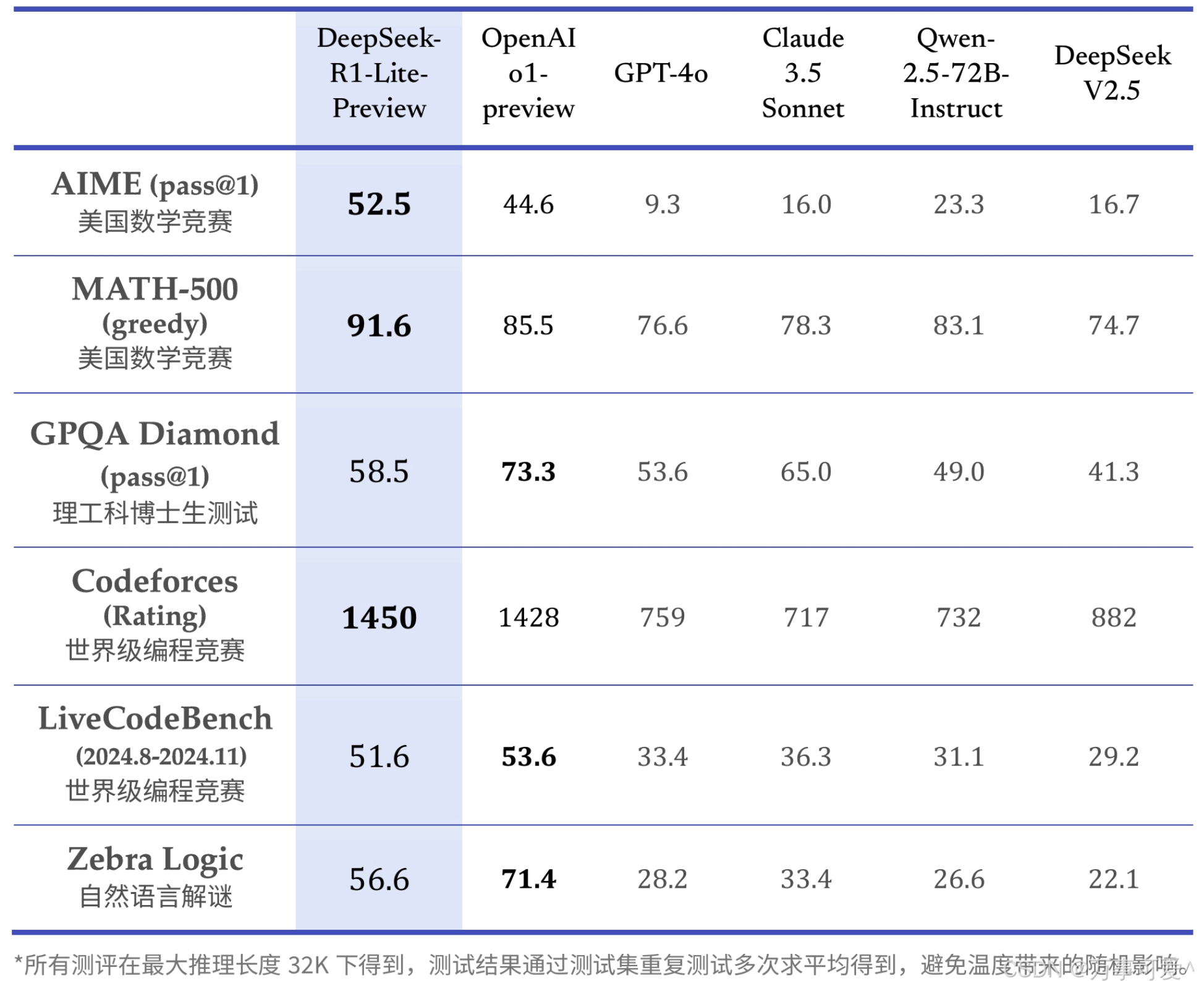

The following table shows the score results of DeepSeek-R1-Lite in various related evaluations:

DeepSeek - R1 - Lite - Preview performed outstandingly in the test tasks of mathematics competitions (AIME, MATH - 500) and world-class programming competitions (Codeforces), and also performed well in science and engineering doctoral student tests, another world-class programming competitions and natural language puzzle solving tasks. However, in science and engineering doctoral student tests, natural language puzzle solving and other tasks, OpenAI o1 - preview scored better, which is why DeepSeek - R1 - Lite did not receive much attention.

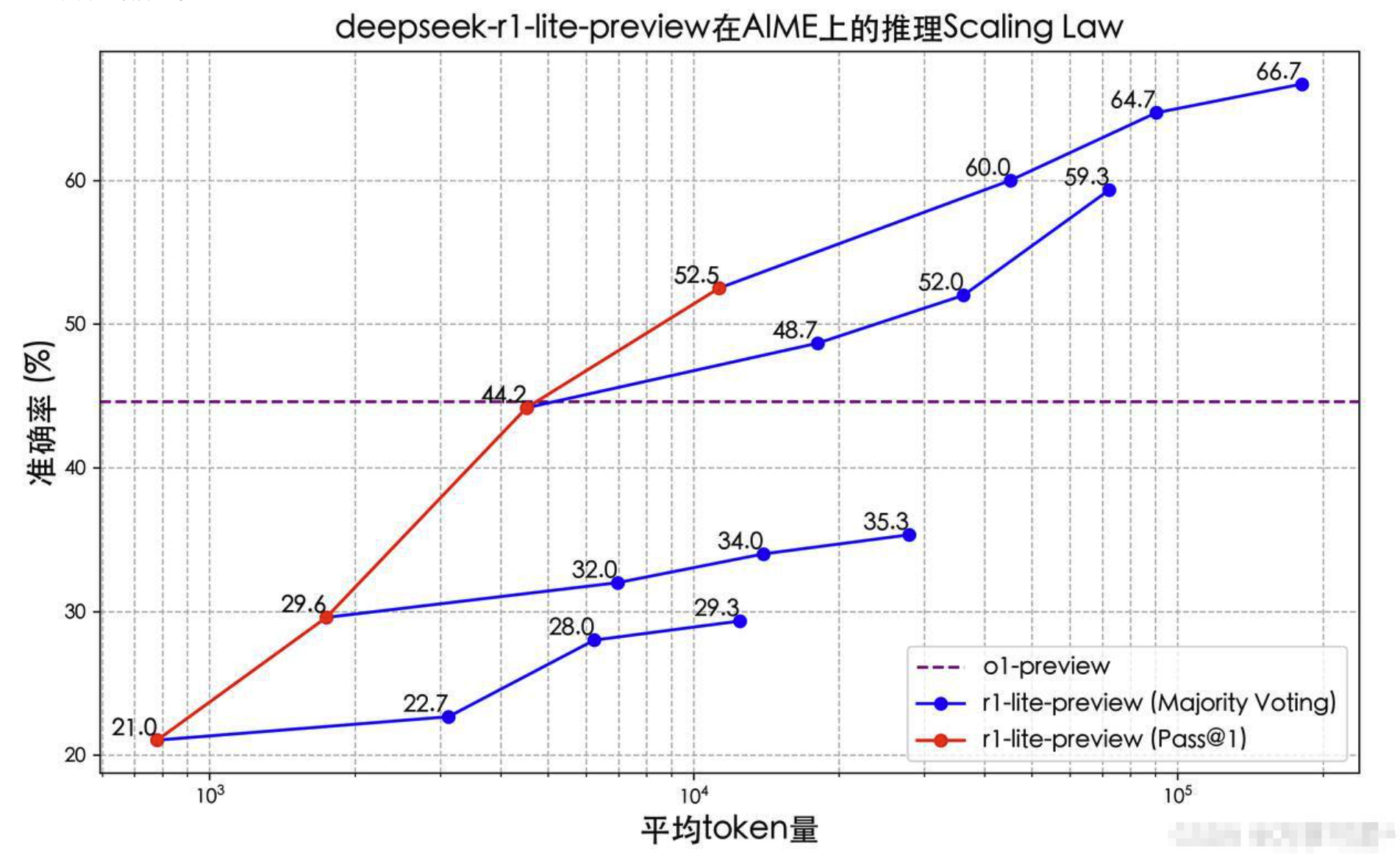

According to the official website, the reasoning process of DeepSeek-R1-Lite is long and contains a lot of reflection and verification. The figure below shows that the model's score in math competitions is closely related to the length of thinking allowed by the test.

From the above picture, we can see:

DeepSeek - R1 - Lite - Preview accuracy with average token

The increase in quantity increases significantly, and when the majority voting method is adopted, the improvement effect is more obvious, ultimately exceeding the performance of OpenAI o1 - preview.

In a one-pass (Pass@1), the accuracy rate of DeepSeek - R1 - Lite - Preview is also higher than 44.2% of OpenAI o1 - preview when the average token amount reaches a certain level.

2. Features

Using reinforcement learning training, the reasoning process includes a lot of reflection and verification, and the length of the thinking chain can reach tens of thousands of words. It has advantages in tasks such as mathematics and programming that require long logical chains; in mathematics, code and various complex logical reasoning tasks, it has achieved a reasoning effect comparable to that of o1, and demonstrated the complete thinking process of o1 that is not disclosed. It is currently available for free on the official website of DeepSeek.

3. Advantages

Strong reasoning ability: Excellent in some difficult math and code tasks, surpassing existing top models in tests such as the American Mathematics Competition (AMC) and Global Programming Competition (codeforces), and even surpassing OpenAI's o1 on some tasks. For example, in the password decryption test, a password that relies on complex logic was successfully cracked, while o1 - preview failed to answer correctly.

Detailed thinking process: When answering questions, not only provides answers, but also attaches a detailed thinking process and a verification process of reverse thinking, showing the rigor of logical reasoning.

High cost-effectiveness: The company's DeepSeek products are mainly open source, and their model training costs are much lower than those of mainstream models in the industry, and their cost-effectiveness has significant advantages.

4. Disadvantages

Code generation performance is unstable: it does not perform as expected when generating relatively simple code.

Insufficient knowledge citation ability: Failed to achieve satisfactory results when dealing with some complex tests that require modern knowledge citation.

Language interaction problems: There may be problems of Chinese and English thinking and output confusion during use.

1. Release time:

December 26, 2024

As the first hybrid expert (MoE) model independently developed by DeepQuSuo, it has 671 billion parameters, activates 37 billion, and completed pre-training on 14.8 trillion tokens.

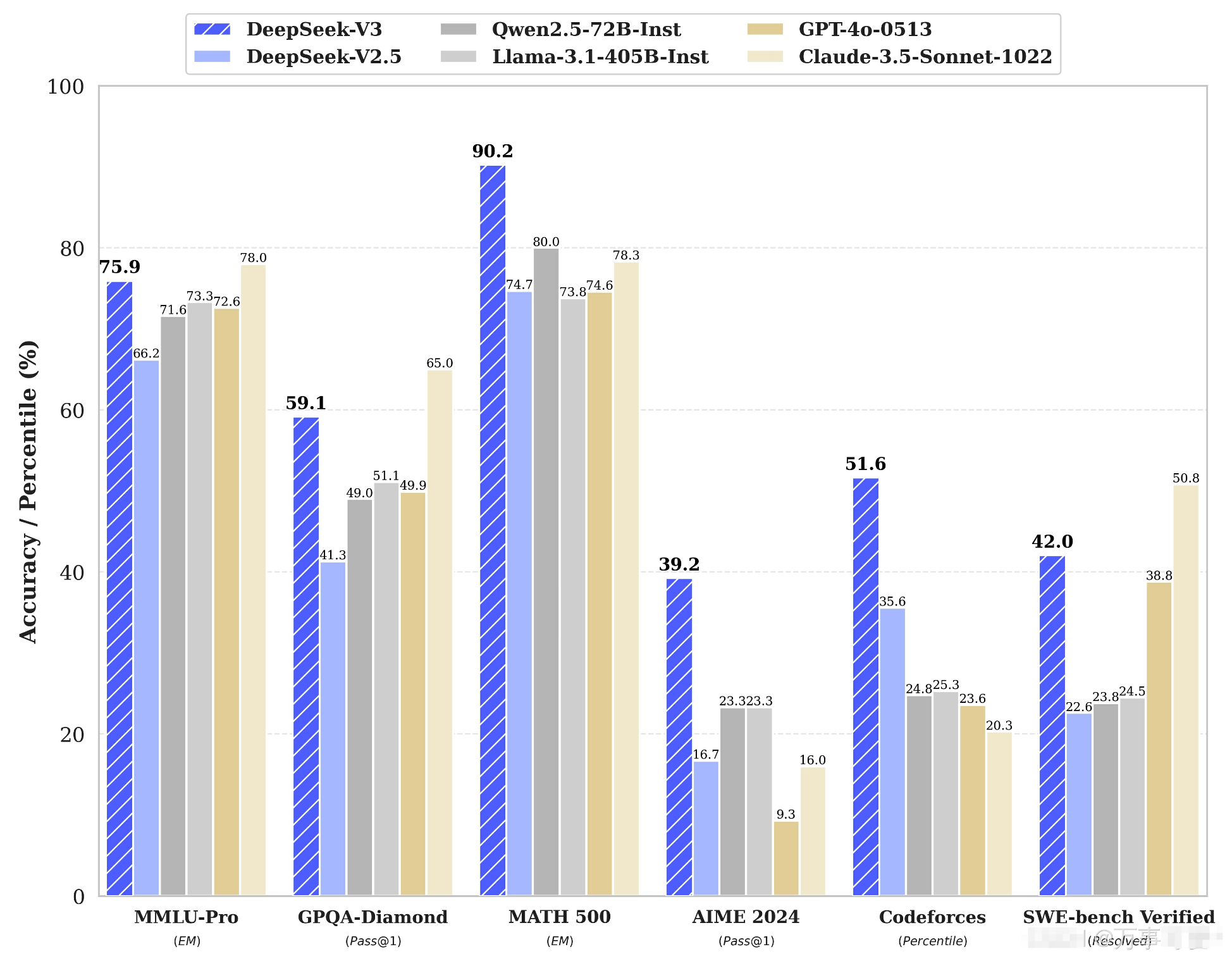

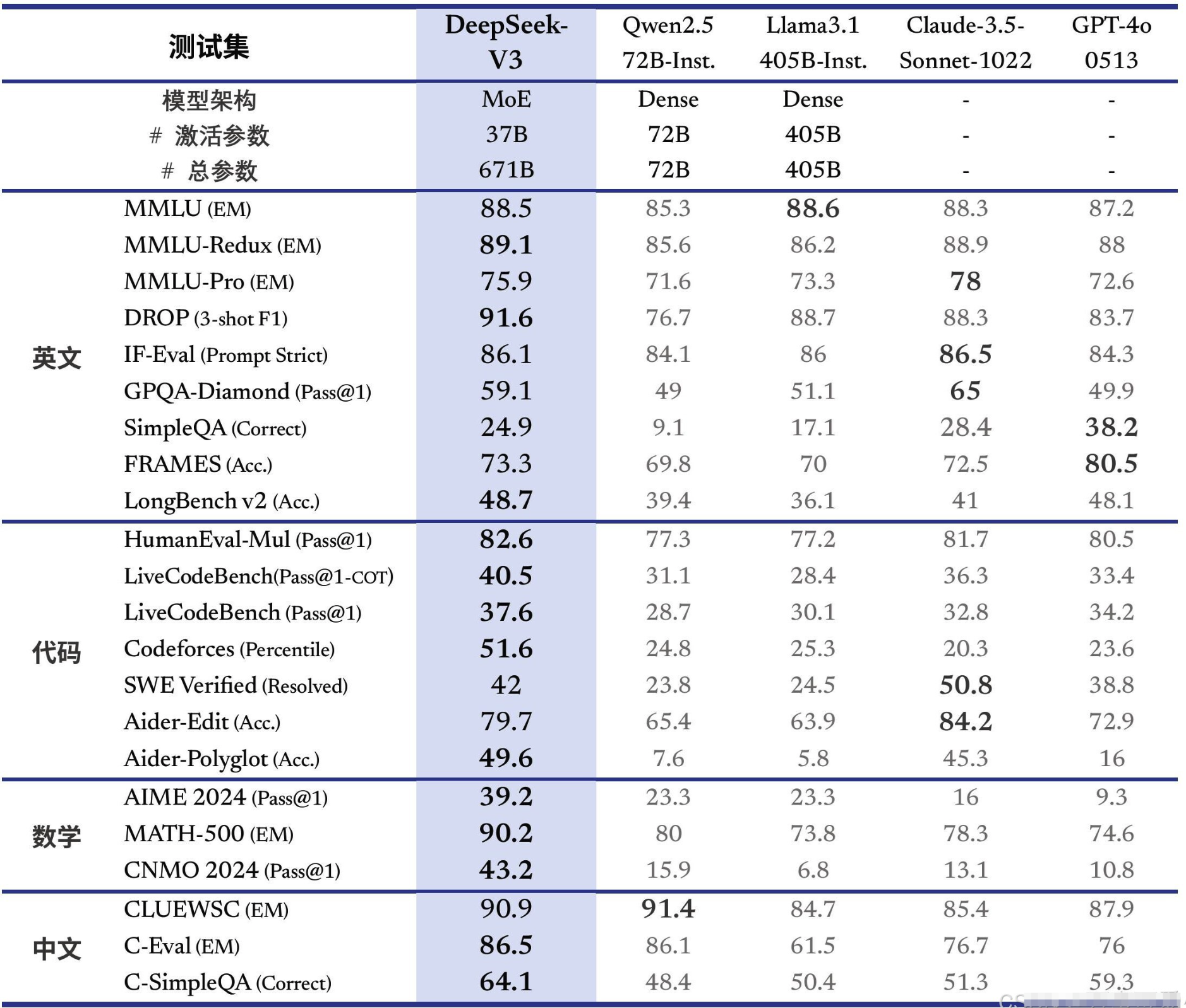

DeepSeek-V3's multiple evaluation results surpass other open source models such as Qwen2.5-72B and Llama-3.1-405B, and in terms of performance, the world's top closed source models GPT-4o and Claude-3.5-Sonnet.

DeepSeek - V3 performed outstandingly in the MMLU - Pro, MATH 500, and Codeforces task tests, with a leading accuracy rate; it also performed well in the GPQA Diamond, SWE - bench Verified tasks, but in the AIME 2024 task, GPT - 4o - 0513 has better accuracy rate.

As can be seen from the above table, this comparison involves models such as DeepSeek - V3, Qwen2.5 - 72B - Inst, Llama3.1 - 405B - Inst, Claude - 3.5 - Sonnet - 1022, GPT - 4o - 0513, etc., and it is analyzed from the aspects of model architecture, parameters and performance of each test set:

Model architecture and parameters

DeepSeek - V3: Adopts MoE architecture, activates parameters 37B, and total parameters 671B.

Qwen2.5 - 72B - Inst: Dense architecture, activation parameter 72B, total parameter 72B.

Llama3.1 - 405B - Inst: Dense architecture, activation parameter 405B, total parameter 405B.

The other two models do not disclose current information.

English test set performance

MMLU related: DeepSeek - V3 scores were 88.5, 89.1, and 75.9 in the MMLU-EM, MMLU-Redux EM, and MMLUPro-EM tests, respectively, and performed close to other models in some tests.

DROP: DeepSeek - V3 scores 91.6, leading the other models.

IF - Eval: DeepSeek - V3 is 86.1, which is comparable to other models.

GPQA - Diamond: DeepSeek - V3 scored 59.1, second only to Claude - 3.5 - Sonnet - 1022's 65.

SimpleQA et al: In SimpleQA, FRAMES, LongBench v2 and other tests, DeepSeek-V3 performance differs, such as SimpleQA score of 24.9 and FRAMES of 73.3.

Code test set performance

HumanEval - Mul: DeepSeek - V3 scored 82.6, performing better.

LiveCodeBench: In LiveCodeBench (Pass@1 - COT) and LiveCodeBench (Pass@1) tests, DeepSeek - V3 is 40.5 and 37.6 respectively.

Codeforces et al: DeepSeek - V3 scored 51.6 in Codeforces Percentile test and 42 in SWE - bench Verified (Resolved).

Mathematical test set performance

AIME 2024: DeepSeek - V3 score is 39.2, higher than Qwen2.5 - 72B - Inst, Llama3.1 - 405B - Inst, Claude - 3.5 - Sonnet - 1022.

MATH - 500: DeepSeek - V3 scored 90.2, with obvious advantages.

Chinese test set performance

CLUEWSC: DeepSeek - V3 scores 90.9, which is close to other models.

C - Eval et al.: In the C - Eval and C - SimpleQA tests, DeepSeek - V3 was 86.5 and 64.1 respectively.

Overall, DeepSeek - V3 has performed well in multiple test sets, with obvious advantages in tests such as DROP, MATH - 500, and different degrees of advantages and disadvantages in test sets in different languages and fields.

2. Features:

DeepSeek-V3 is a milestone version in the series, with 671 billion parameters, focusing on knowledge-based tasks and mathematical reasoning, with a significant improvement in performance. V3 introduces native FP8 weights, supports local deployment, and greatly improves the inference speed, and increases the generation and pronunciation speed from 20TPS to 60TPS, adapting to the needs of large-scale applications.

3. Advantages:

Strong reasoning ability: With 671 billion parameters, DeepSeek-V3 has shown outstanding performance in knowledge reasoning and mathematical tasks.

High Generation Speed: The speed of generating 60 characters (TPS) per second allows V3 to meet application scenarios with high response speed requirements.

Local deployment support: Through the open source of FP8 weights, users can deploy locally, reducing their dependence on cloud services and improving data privacy.

4. Disadvantages:

High training resource requirements: Although the inference capability has been greatly improved, V3 requires a large amount of GPU resources for training, which makes its deployment and training cost higher.

The multimodal capability is not strong: Like the previous version, V3 has not made special optimizations in multimodal tasks (such as image understanding), and still has certain shortcomings.

The following is a link to the paper of V3 model for your reference and study.

Paper link: https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf

1. Release time:

January 20, 2025

As the highly anticipated DeepSeek-R1, the real thing is that it has gone through a lot of hardships before it was born and has come to this day. Since its release, DeepSeek-R1 has adhered to this open source principle and followed MIT License, allowing users to train other models with the help of R1 through distillation technology.

This will have the following two effects:

Open source protocol level

MIT License is a loose open source software license agreement. This means that DeepSeek - R1 is aimed at developers and users with a very open attitude. On the premise of following the relevant provisions of MIT License, users have great freedom:

Free use: You can use the DeepSeek-R1 model freely in any individual project, commercial project and other scenarios, without worrying about legal issues arising from usage scenarios.

Free modification: The code, model architecture, etc. of DeepSeek - R1 can be modified and customized to meet specific business needs or research purposes.

Free distribution: Versions based on DeepSeek - R1 modified or unmodified can be distributed, whether free distribution or accompanied by commercial products.

Model training and technical application level

Allowing users to train other models with the help of R1 through distillation technology, which has high technical value and application potential:

Model Lightweight: Distillation technology can transfer knowledge of large DeepSeek-R1 models to small models. Developers can train lighter and more efficient models, such as deploying models on resource-constrained devices (such as mobile devices, embedded devices, etc.) to achieve real-time reasoning and application without relying on powerful computing resources to run the large DeepSeek - R1 original model.

Personalized customization: Users can use DeepSeek-R1 as the basis for distillation to train models that are more suitable for the task based on their specific task needs, such as text classification in specific fields, image recognition in specific types, etc., thereby achieving a better balance between performance and resource consumption and improving the performance of the model in specific scenarios.

Promoting technological innovation: This method provides researchers and developers with a powerful tool and starting point, encouraging more people to explore and innovate based on DeepSeek-R1, accelerate the application and development of artificial intelligence technology in various fields, and promote technological progress in the entire industry.

In addition, DeepSeek-R1 is launched to open the thinking chain output to users, and can be called by setting model='deepseek-reasoner', which undoubtedly greatly facilitates many individual users who are interested in big models.

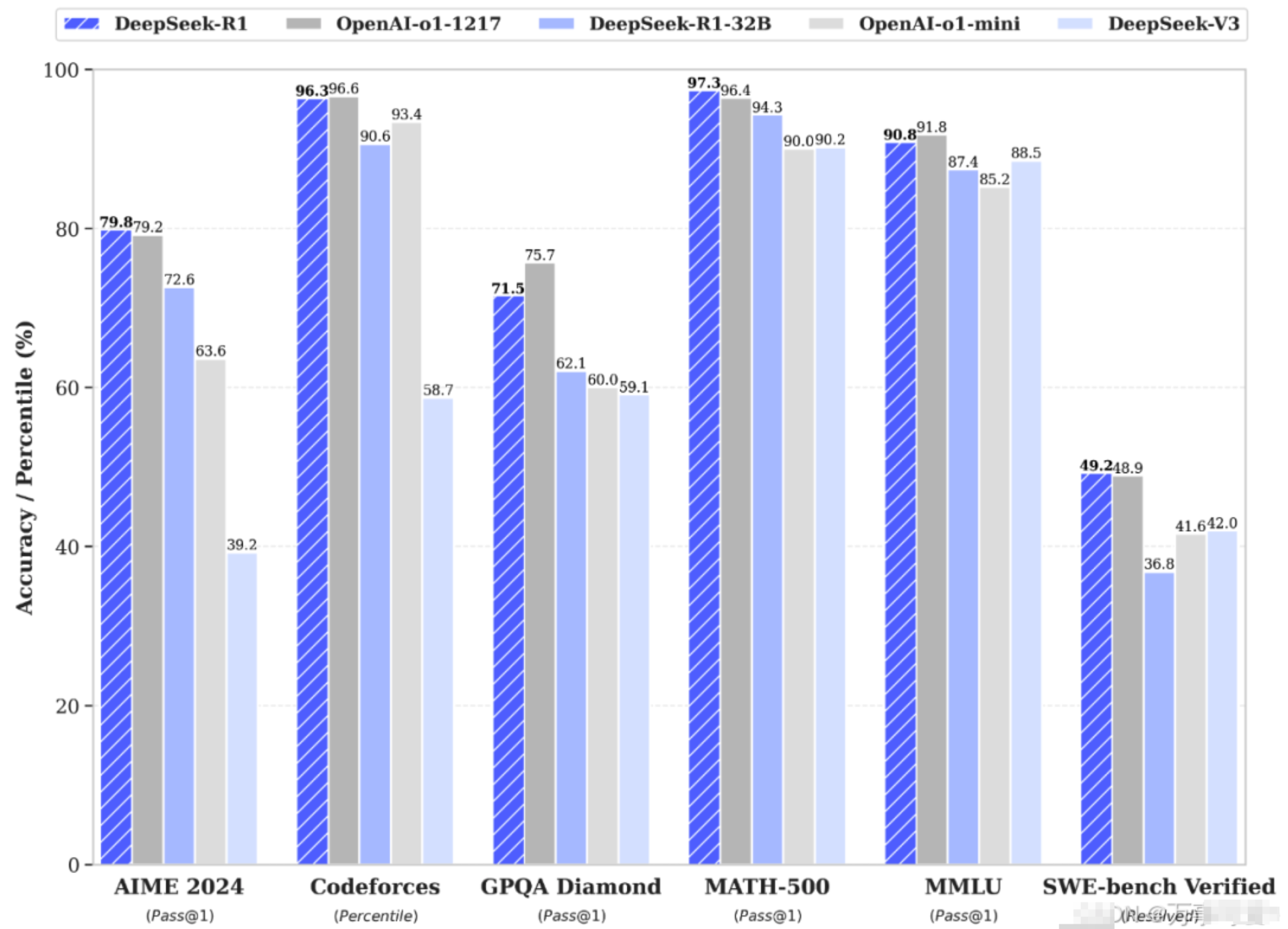

According to the official website information, DeepSeek-R1 used reinforcement learning technology on a large scale in the post-training stage, which greatly improved the model inference ability when only a very small labeled data was available. In mathematics, code, natural language reasoning and other tasks, the performance is comparable to the official version of OpenAI o1.

As can be seen from the above figure, DeepSeek - R1 or DeepSeek - R1 - 32B performed well in the Codeforces, MATH - 500, SWE - bench Verified tests; OpenAI - o1 - 1217 performed well in the AIME 2024, GPQA Diamond, and MMLU tests.

However, in comparison of small distillation models, the R1 model surpasses OpenAI o1-mini.

While the official open source data, DeepSeek-R1-Zero and DeepSeek-R1 two 660B models, through the output of DeepSeek-R1, 6 small models were distilled to the community, among which the 32B and 70B models achieved the effect of benchmarking OpenAI o1-mini in multiple capabilities.

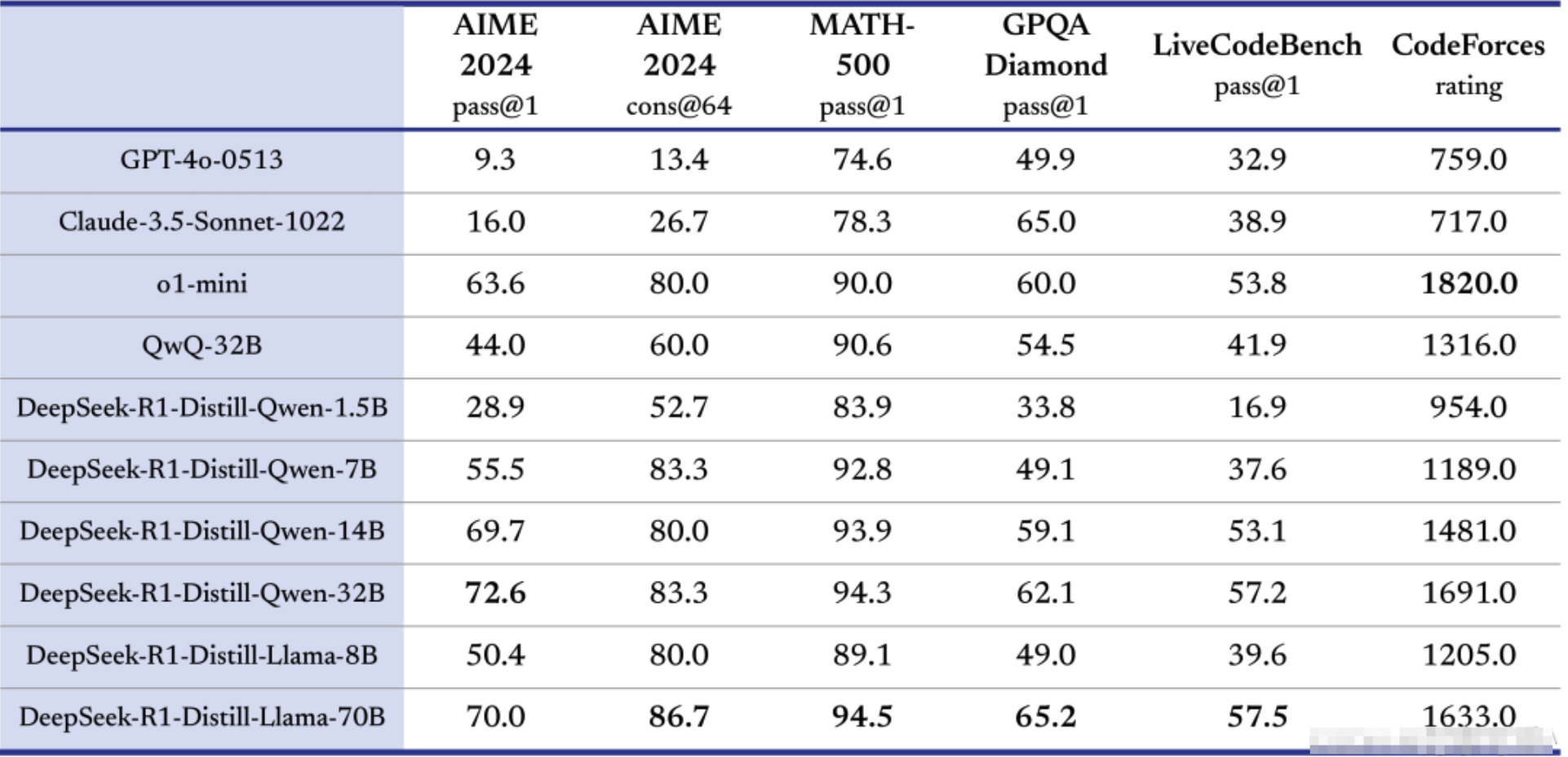

The above table is a table comparing the performance of different models on multiple test sets. The test sets include AIME 2024, MATH - 500, etc. The models include GPT - 4o - 0513, Claude - 3.5 - Sonnet - 1022, etc. It also involves a series of models based on DeepSeek - R1 distillation. The specific details are as follows:

Model and performance

GPT - 4o - 0513: The scores in each test set are relatively balanced, such as the score in AIME 2024 pass@1 is 9.3 and the CodeForces rating is 759.0.

Claude - 3.5 - Sonnet - 1022: The performance is relatively stable in each test, such as AIME 2024 pass@1, get 16.0, and CodeForces rating is 717.0.

o1 - mini: Outstanding performance in multiple test sets, especially in CodeForces rating to 1820.0.

QwQ - 32B: It has certain performance in different test sets, such as MATH - 500 pass@1 to get 90.6.

DeepSeek - R1 - Distill - Qwen series: As the parameters increase (from 1.5B to 32B), the overall results in most test integrations have improved, such as DeepSeek - R1 - Distill - Qwen - 32B scored 94.3 in MATH - 500 pass@1, exceeding DeepSeek - R1 - Distill - Qwen - 1.5B's 83.9.

DeepSeek - R1 - Distill - Llama series: performed well in multiple tests, DeepSeek - R1 - Distill - Llama - 70B at MATH - 500 pass@1 to get 94.5.

Summarize

From the table, o1 - mini has obvious advantages in CodeForces competition scores; the large parameter model of DeepSeek - R1 distillation (such as DeepSeek - R1 - Distill - Qwen - 32B, DeepSeek - R1 - Distill - Llama - 70B) performed well in mathematics and programming related test sets, reflecting that the DeepSeek - R1 distillation technology has a role in improving the model performance, and different models have different advantages in each test set.

2. Features:

DeepSeek-R1 is the latest version in the series, and optimizes the model's inference ability through reinforcement learning (RL) technology. The R1 version of reasoning capabilities are close to the O1 of OpenAI, and follow the MIT license to support model distillation, further promoting the healthy development of the open source ecosystem.

3. Advantages:

Reinforcement learning optimized reasoning ability: Using reinforcement learning technology, R1 can show stronger performance in the inference task than other versions.

Open source support and scientific research applications: R1 is completely open source, supports scientific researchers and technology developers to carry out secondary development, and promotes the rapid progress of AI technology.

4. Disadvantages:

Insufficient multimodal capability: Despite significant improvements in inference capabilities, the support for multimodal tasks has not been fully optimized.

Application scenarios are limited: R1 is mainly aimed at the fields of scientific research, technology development and education, and its applicable scenarios in commercial applications and practical operations are relatively narrow.

The continuous iteration and upgrade of the DeepSeek series reflects its continuous progress in natural language processing, reasoning capabilities and application ecology. Each version has its own unique advantages and applicable scenarios, and users can choose the most suitable version according to their own needs. With the continuous development of technology, DeepSeek may continue to make breakthroughs in multimodal support, reasoning capabilities, etc. in the future, which is worth looking forward to.