Tip: Learn the detailed operation steps for local deployment of Deepseek for Kirin Kylin-Desktop-V10-SP1 system (Specially noted: Because the environment of this experiment is a virtual machine and the hardware configuration is low, this experiment takes the deployment of DeepSeek -R1 1.5b as an example; the other version deployment method is to modify the command parameter 1.5b in this experiment to the corresponding version number)

DeepSeek (Chinese name: In-depth search): It is an artificial intelligence model developed by domestic artificial intelligence companies. It has strong natural language processing capabilities, can understand and answer questions, and can also assist in writing code, organizing data and solving complex mathematical problems.

2.1 Main advantages

① Offline use: No need to rely on network connections, you can call AI capabilities anytime and anywhere, especially in environments where the network is unstable or network-free

② Data security: Deploying the model locally can ensure that the data does not leave the local device, reducing the risk of data leakage, and is suitable for scenarios with strict data compliance requirements, such as finance, government affairs, etc.

2.2 Main disadvantages

① High hardware requirements: The higher version (671b) requires a high-performance GPU and sufficient memory to support the operation of the model.

② Resource occupation: Local deployment will occupy local computing resources and storage space, which may not be suitable for devices with limited resources.

③ Model update lag: Model update requires manual operation, which may be lag behind cloud service updates, and may not be able to obtain the latest features in time

1.1 Successfully installed the Kirin system

Note: The system installed in this experiment is: Kylin-Desktop-V10-SP1

1.2 Internet connection is required

Note: Internet connection is required for the first deployment

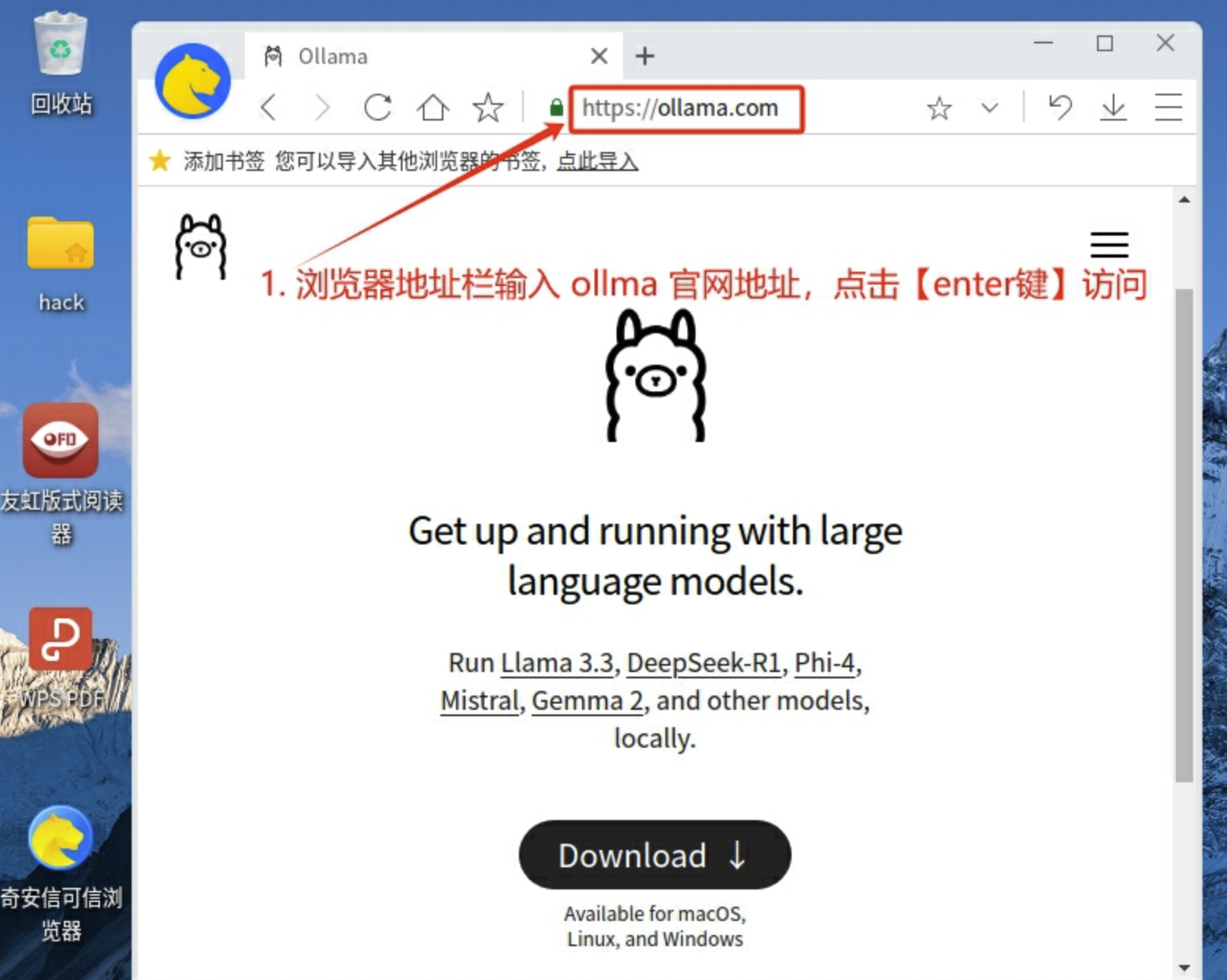

2.1 Download Ollama

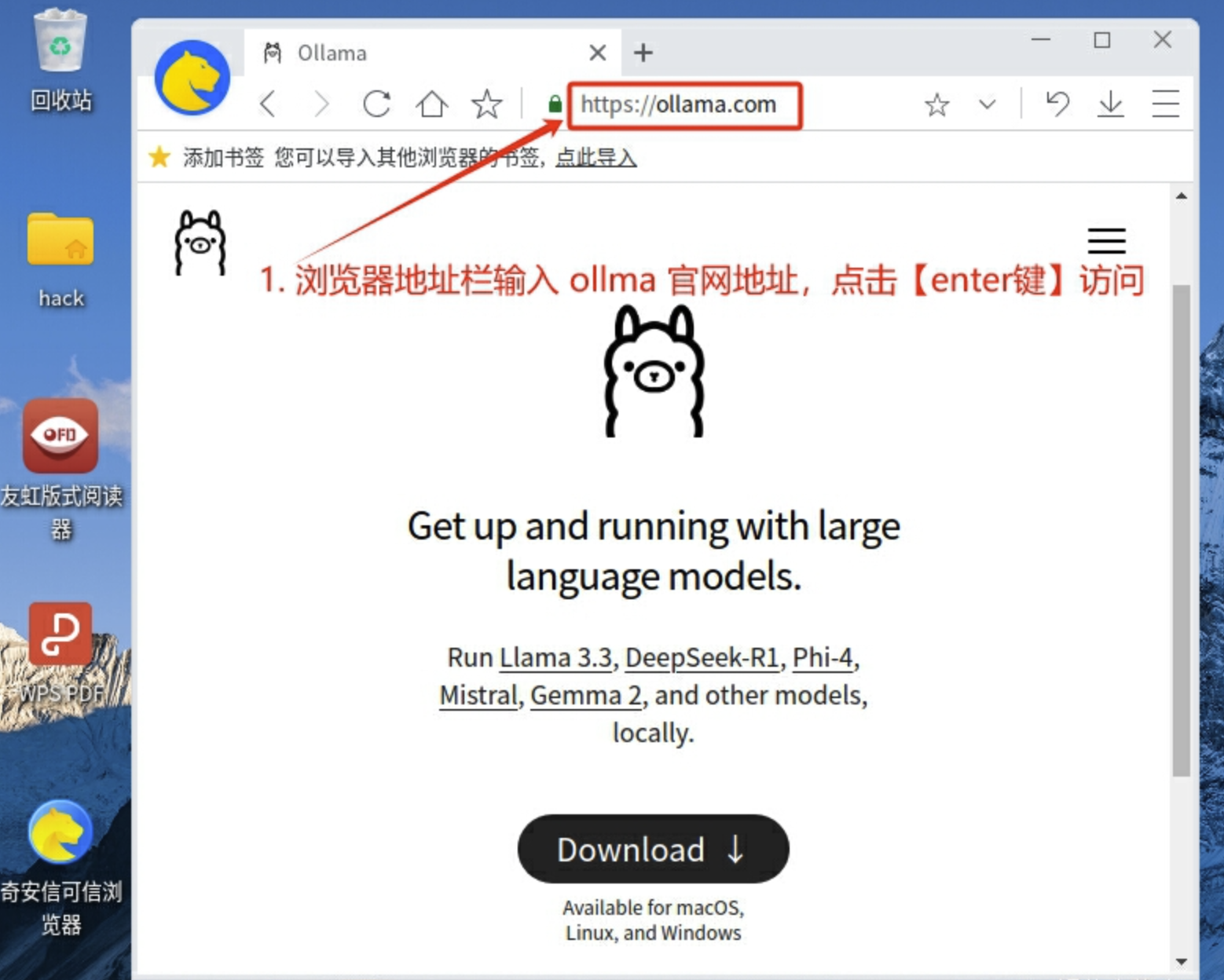

1. Visit [ Ollama official website ]

Ollma official website address: https://ollama.com/

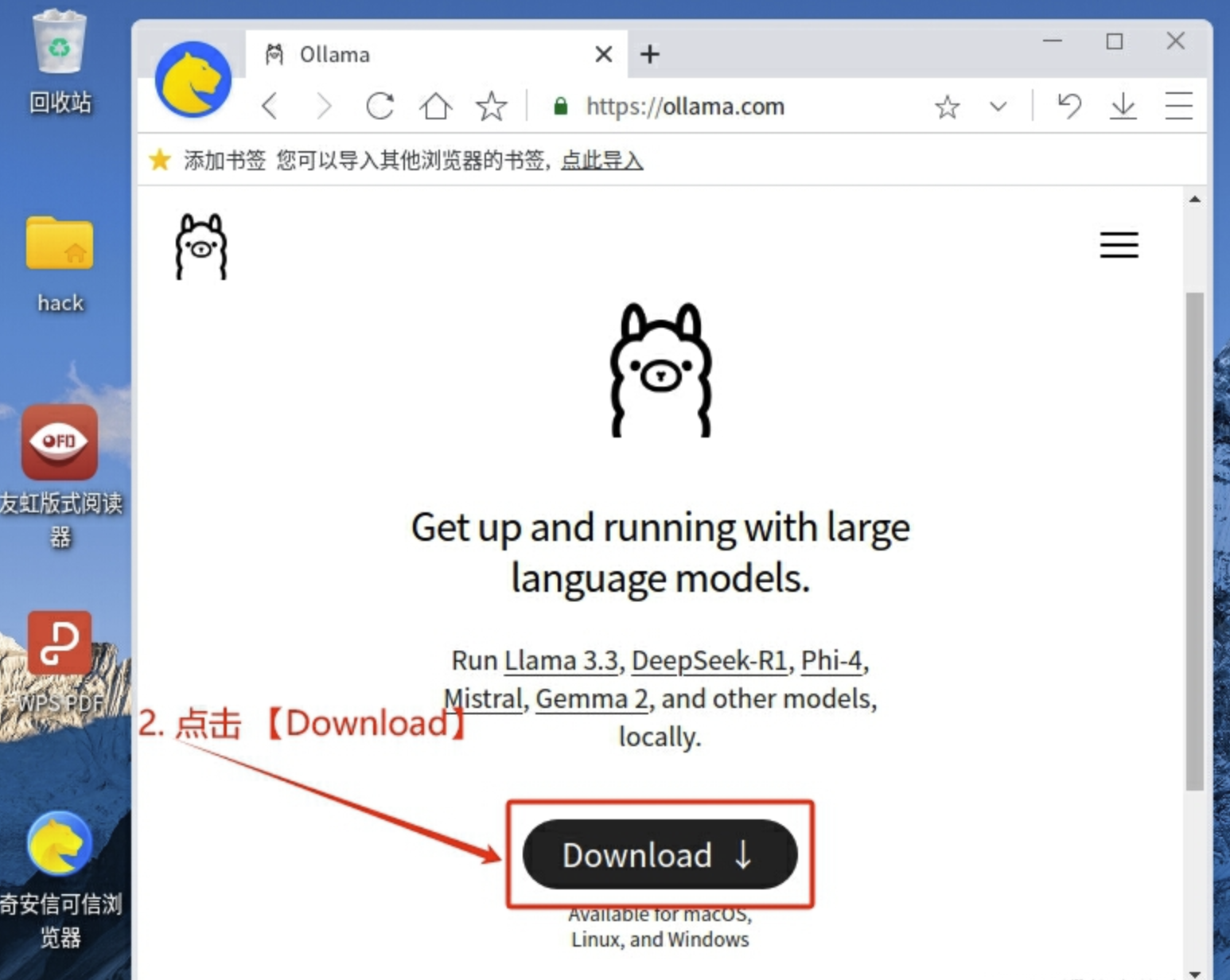

2. Click [Download]

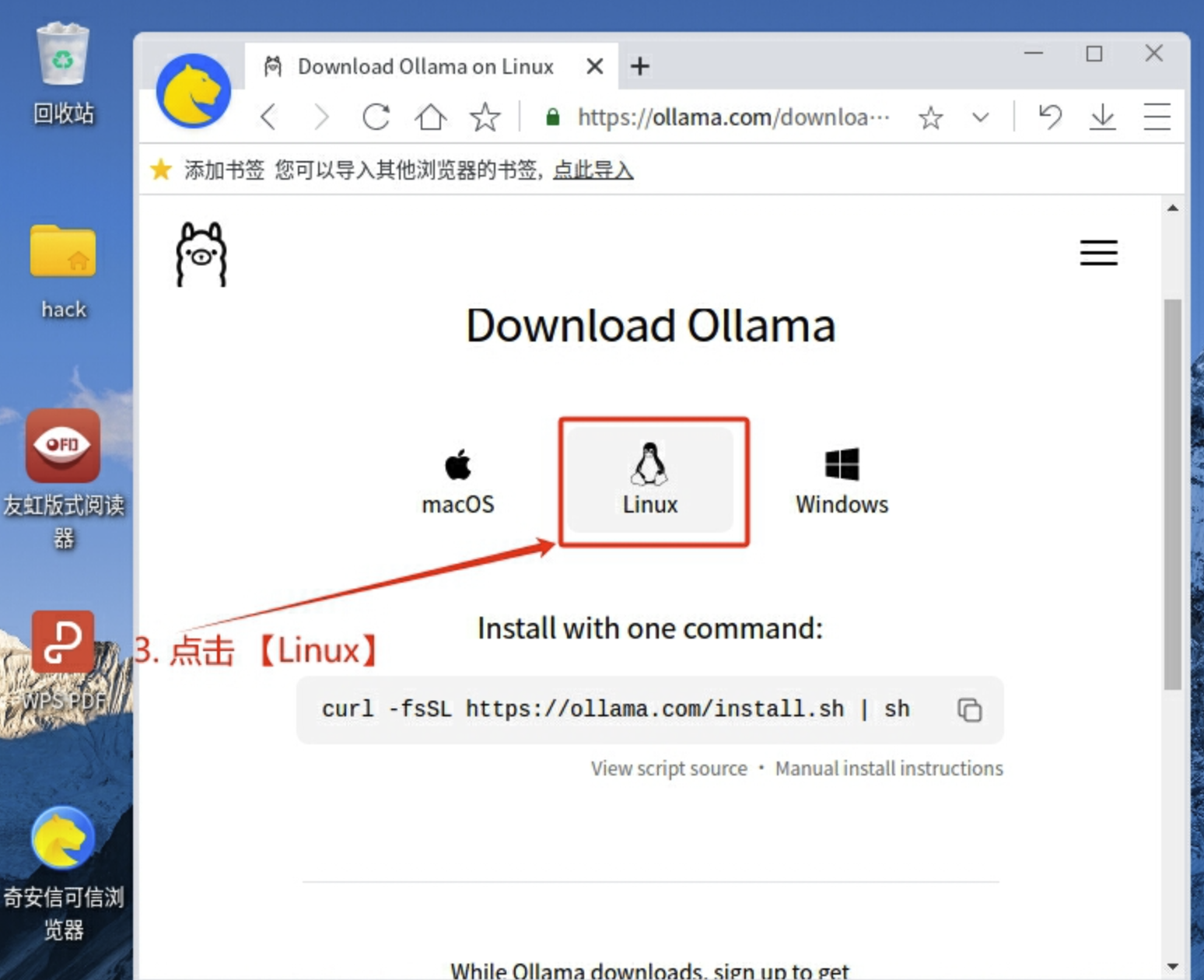

3. Click [Linux]

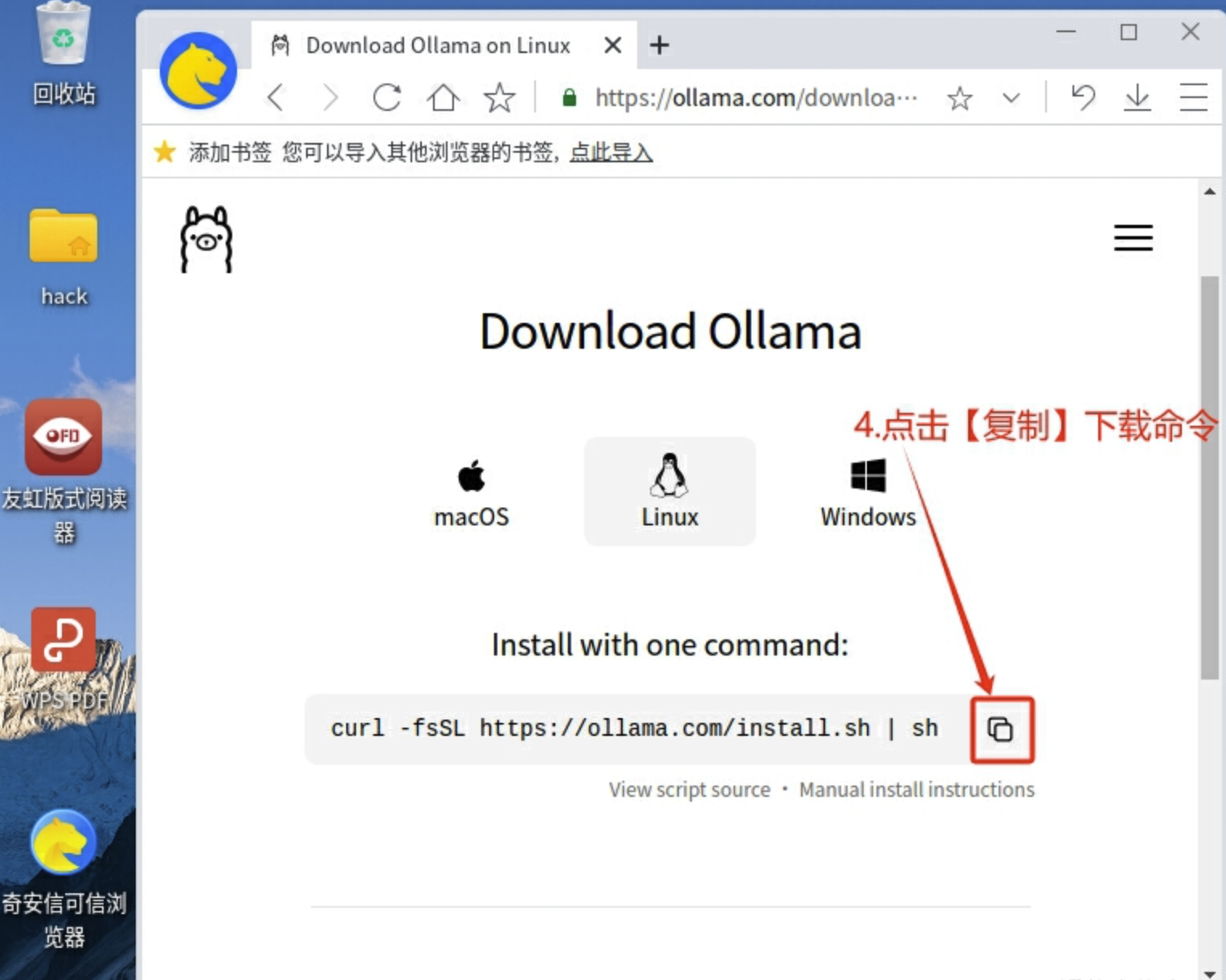

4. Click [Copy] to download the command

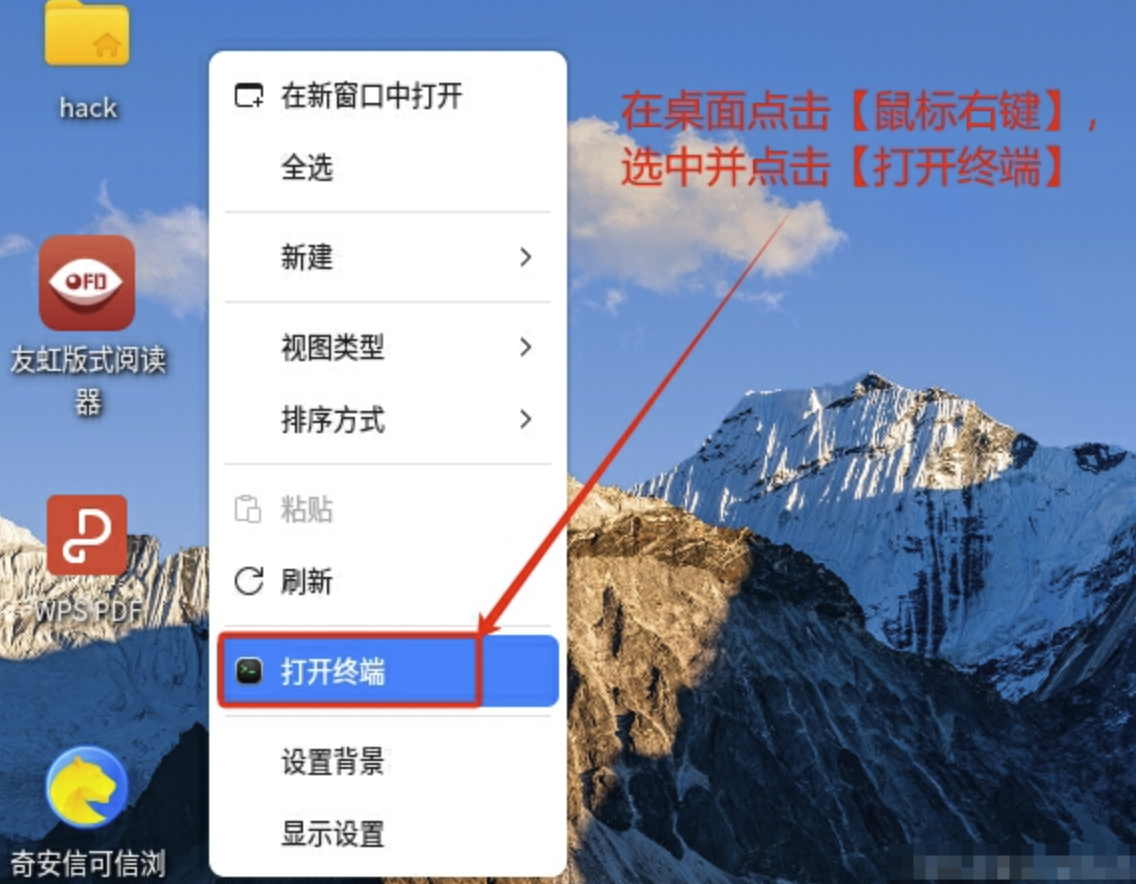

5. Click [right mouse button] on the desktop, select and click [Open Terminal]

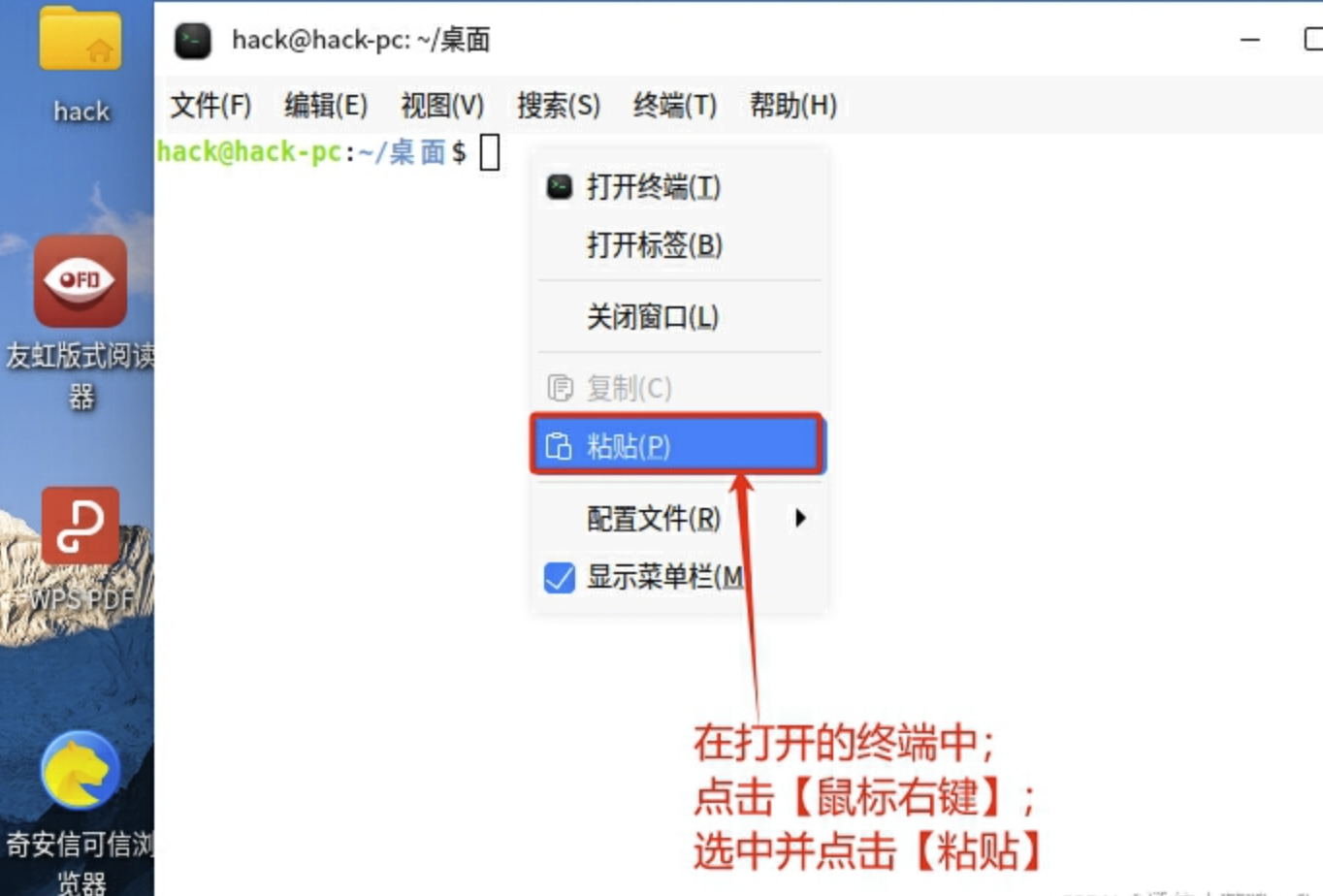

6. In the open terminal, click [Right-click], select and click [Paste]

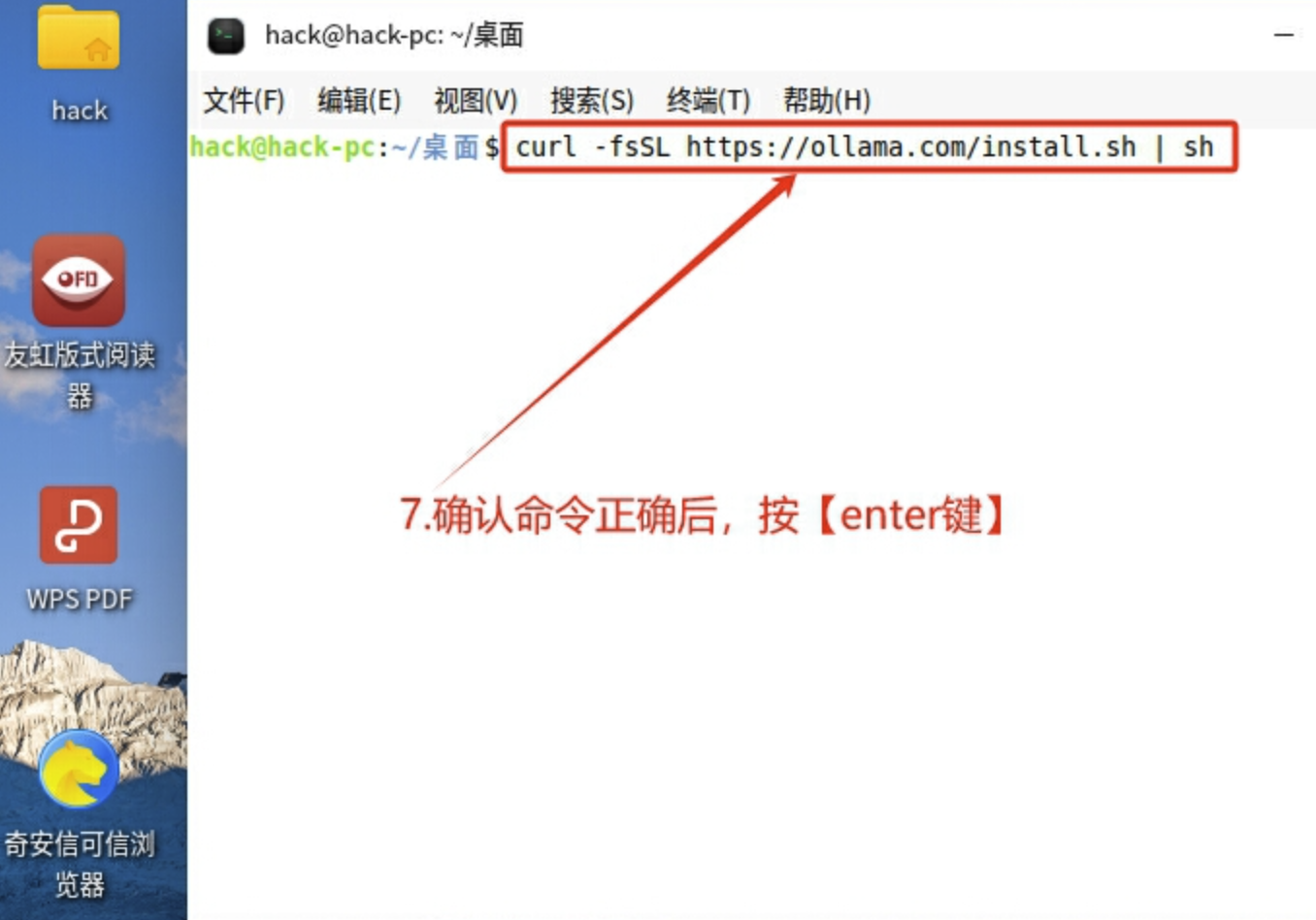

7. After confirming that the command is correct, press [enter]

curl -fsSL https://ollama.com/install.sh | sh

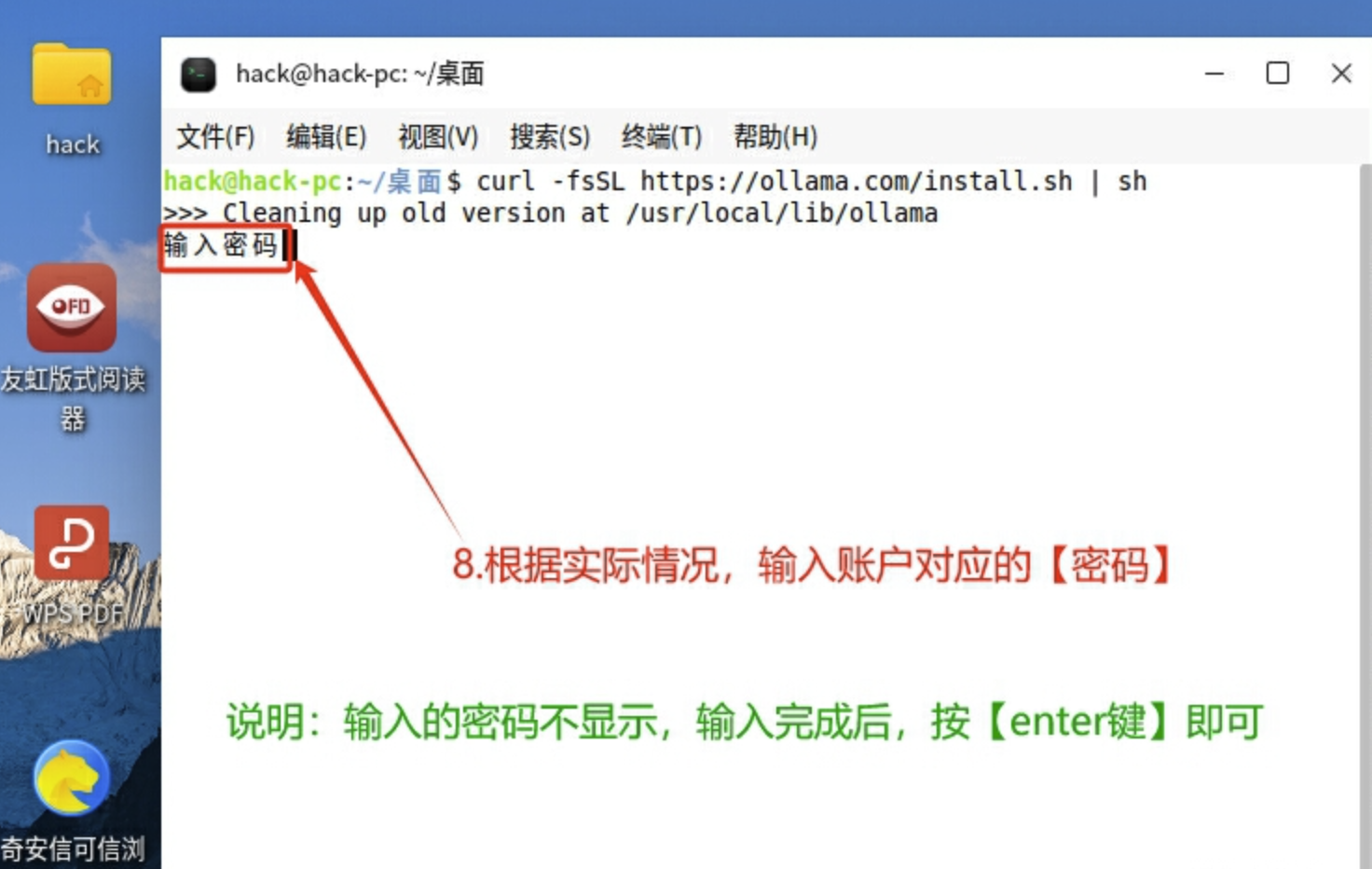

8. According to the actual situation, enter the [password] corresponding to the account

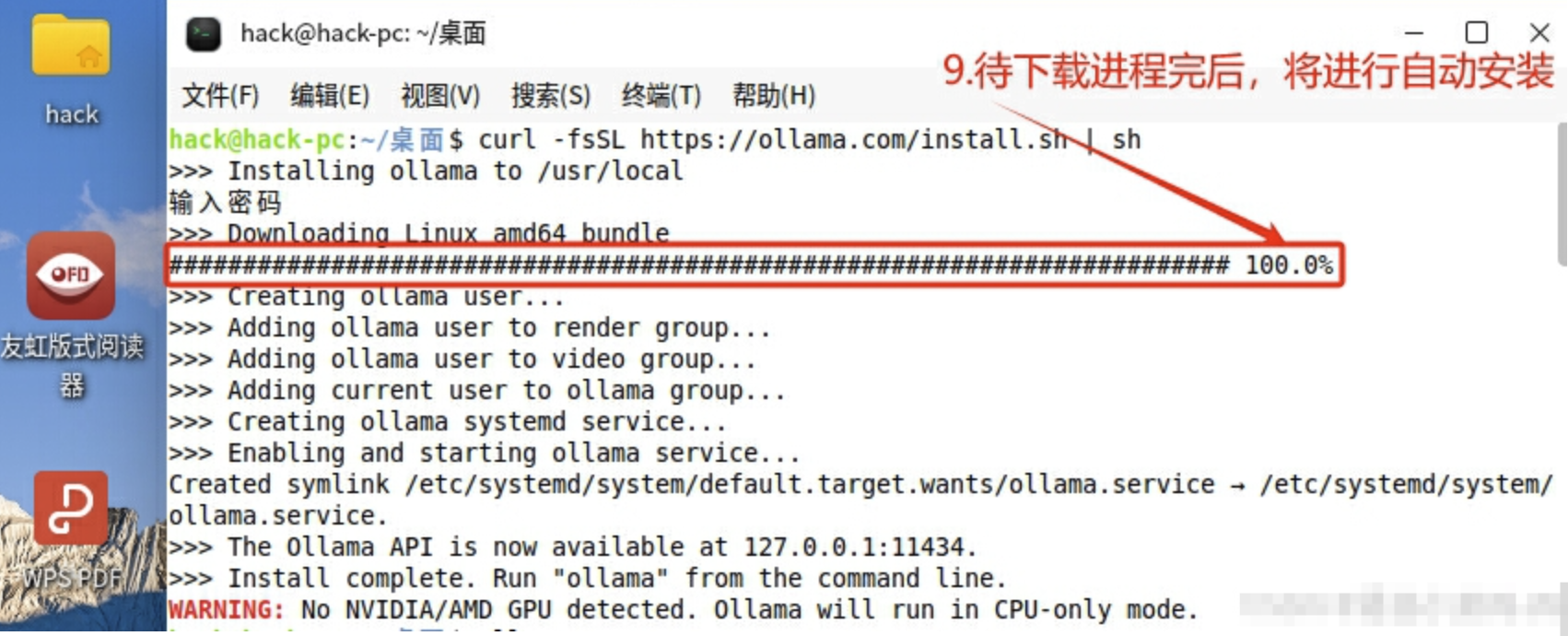

9. After the download process is completed, it will be automatically installed.

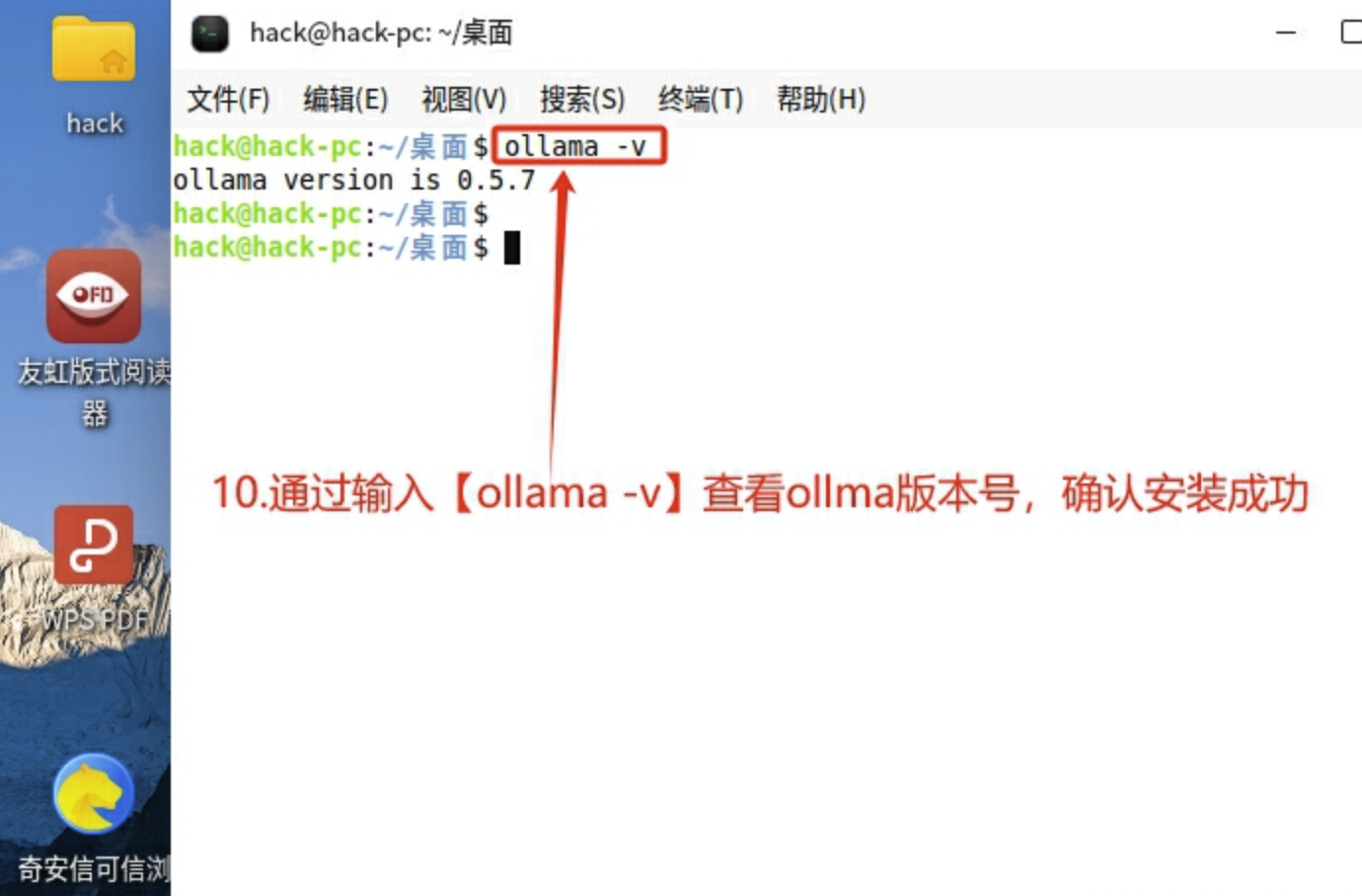

10. Check the version number of the ollma by entering [ollama -v] to confirm that the installation is successful

ollama -v

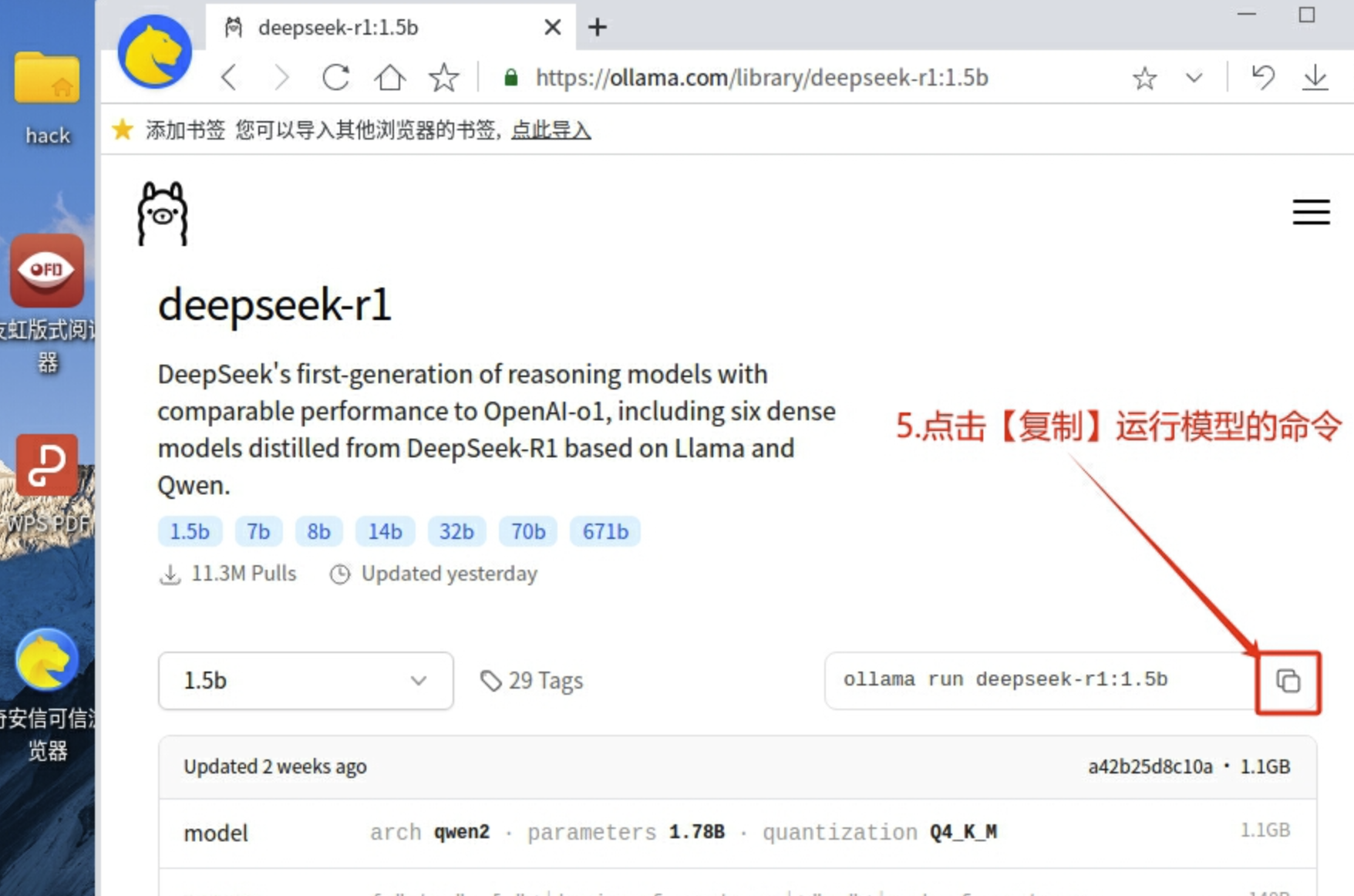

2.2 Download the Deepseek model

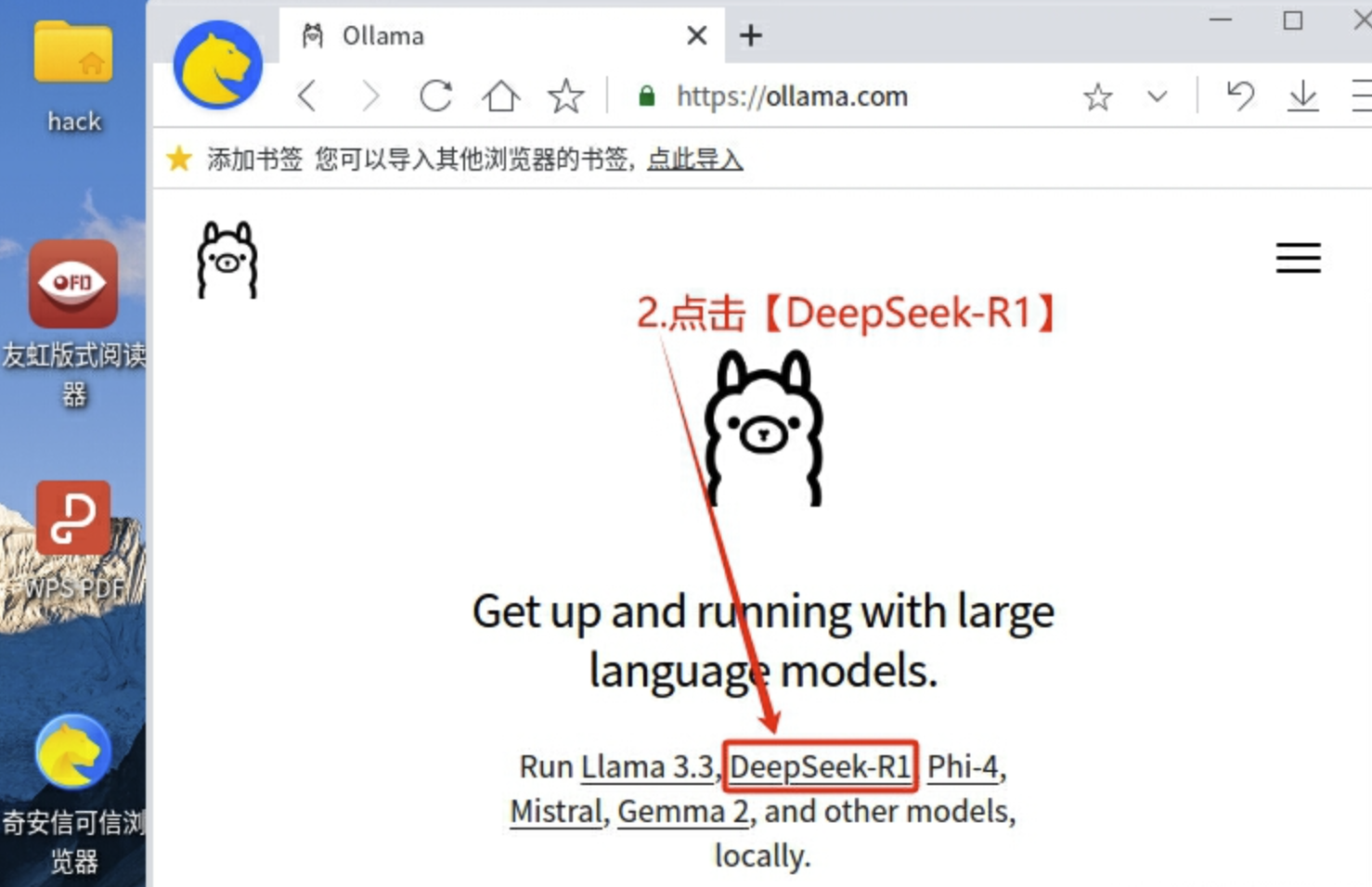

1. Visit [ Ollama official website ]

Ollma official website address: https://ollama.com/

2. Click [DeepSeek-R1]

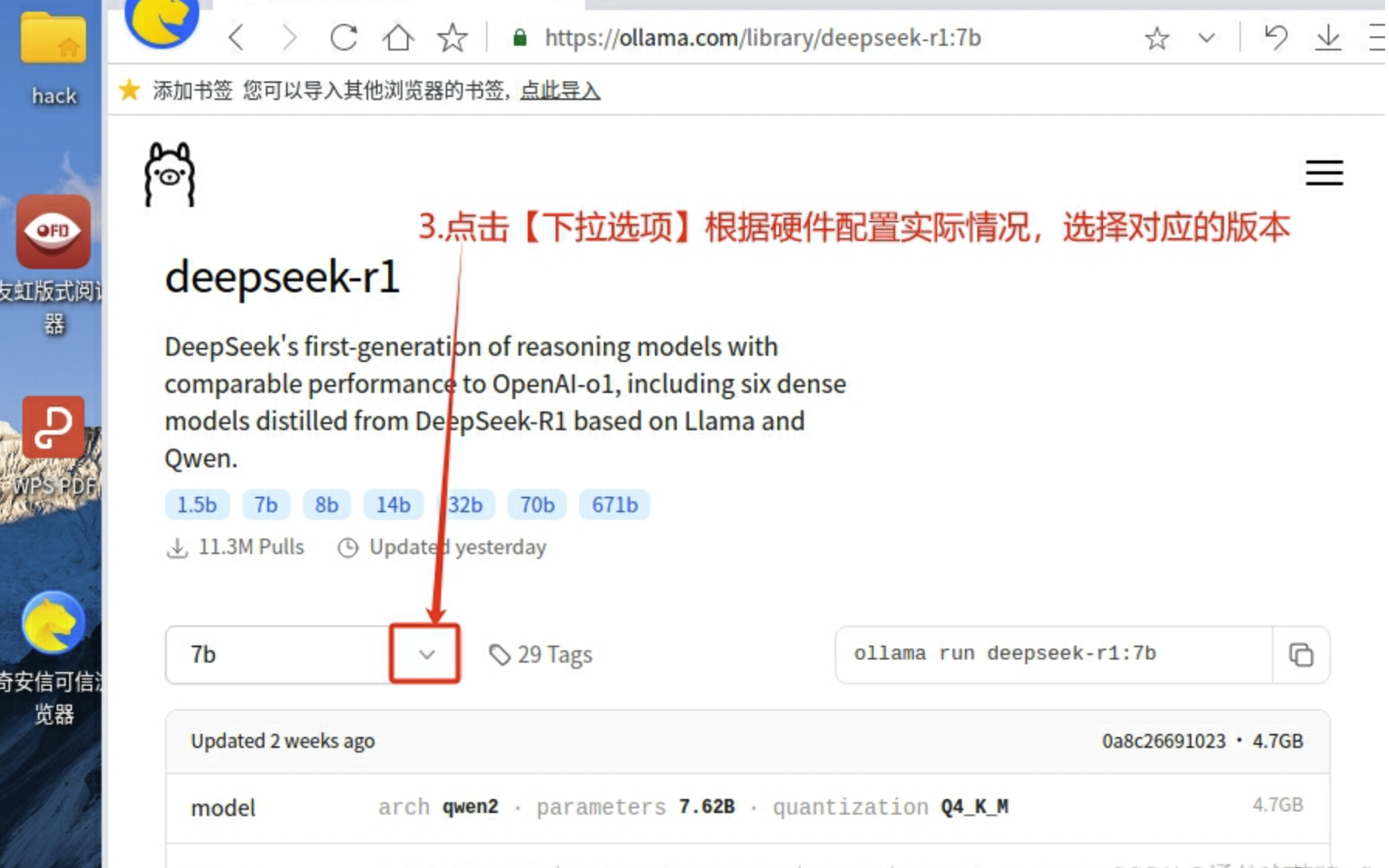

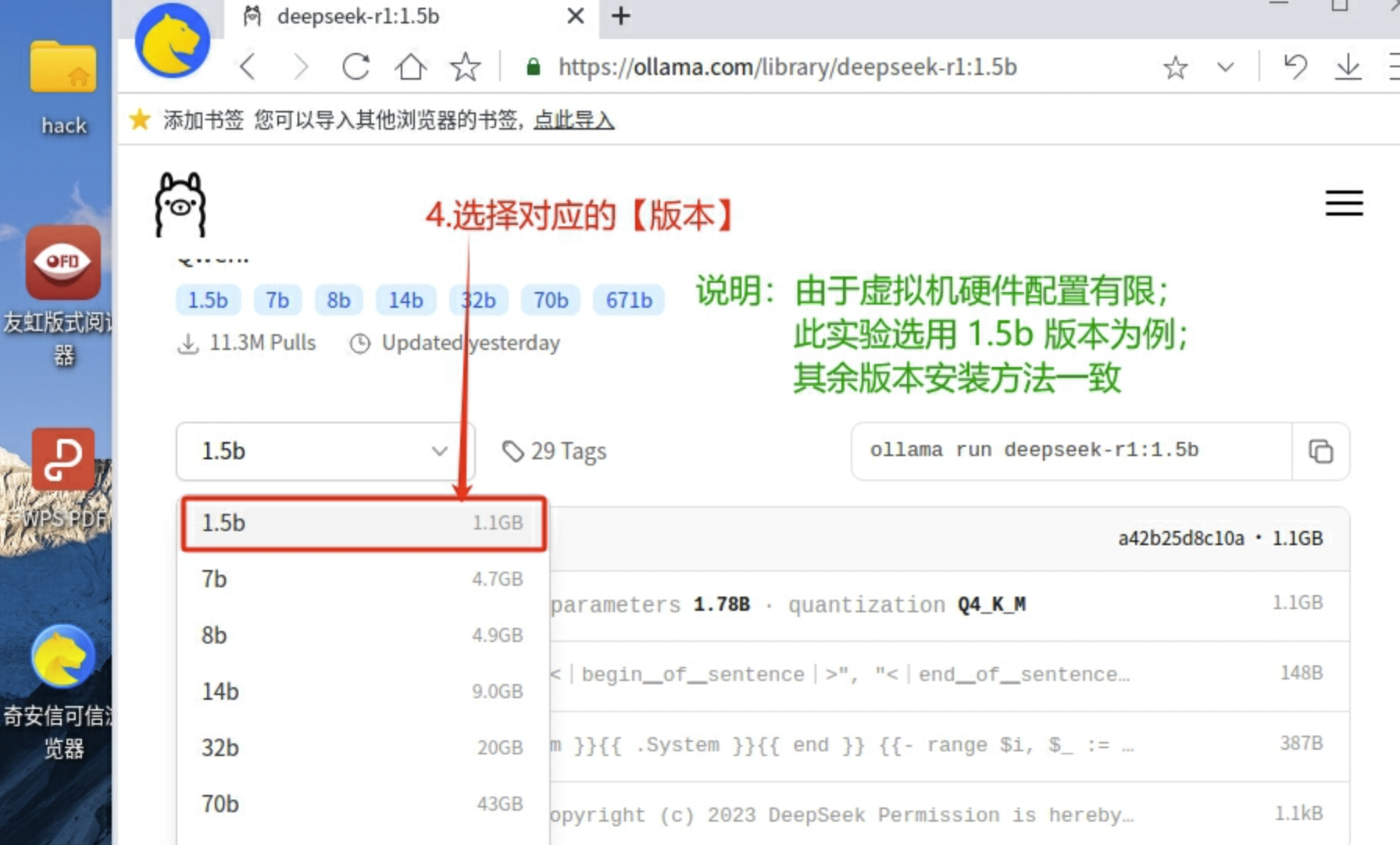

3. Click [Drop-down Option] to select the corresponding version according to the actual hardware configuration.

4. Select the corresponding [version] (Note: This experiment uses version 1.5b as an example)

5. Click [Copy] to run the model command

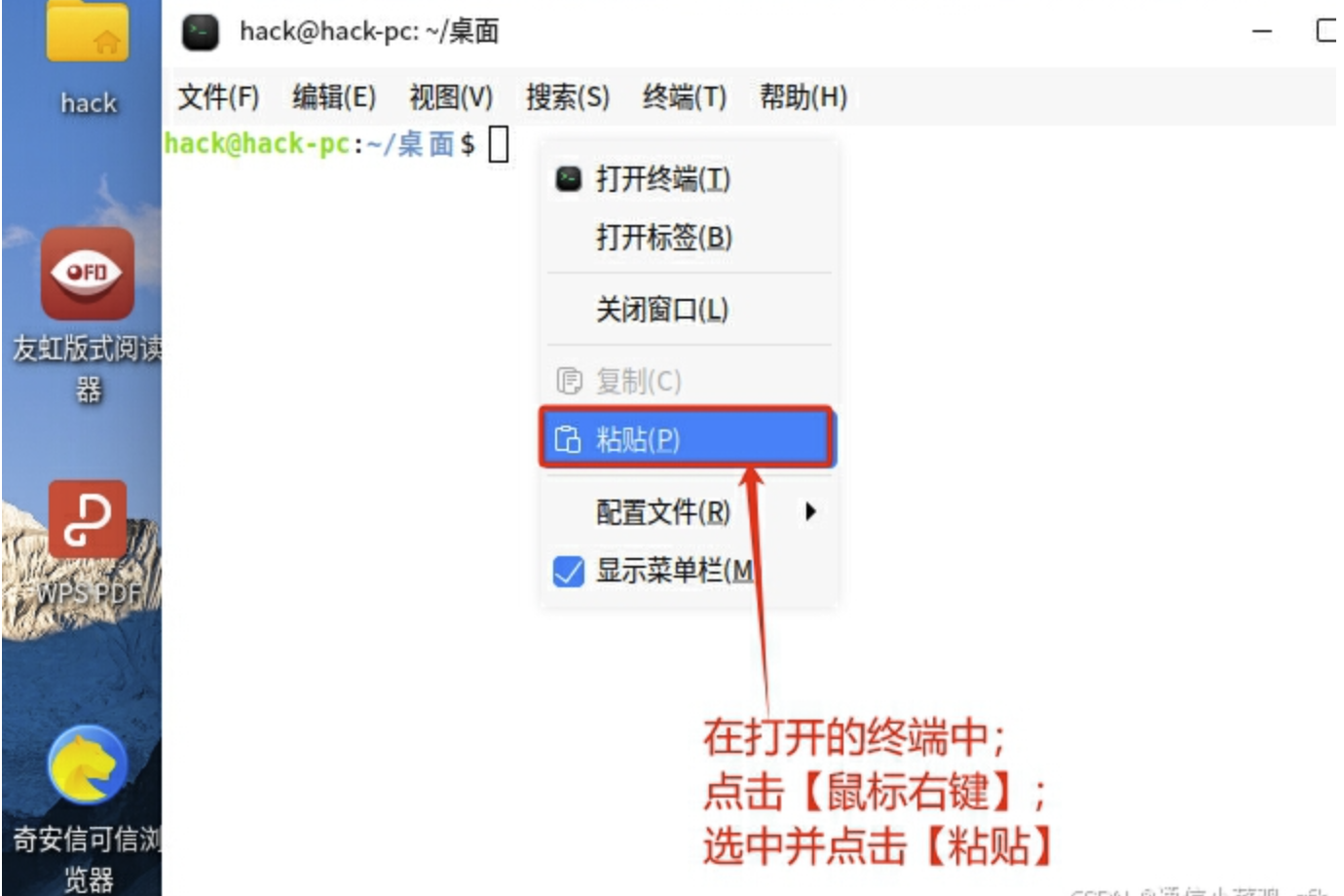

6. Click [right mouse click] on the desktop, select and click [Open Terminal]

7. In the open terminal, click [Right-click], select and click [Paste]

8. After confirming that the command is correct, press [enter]

ollama run deepseek-r1:1.5b

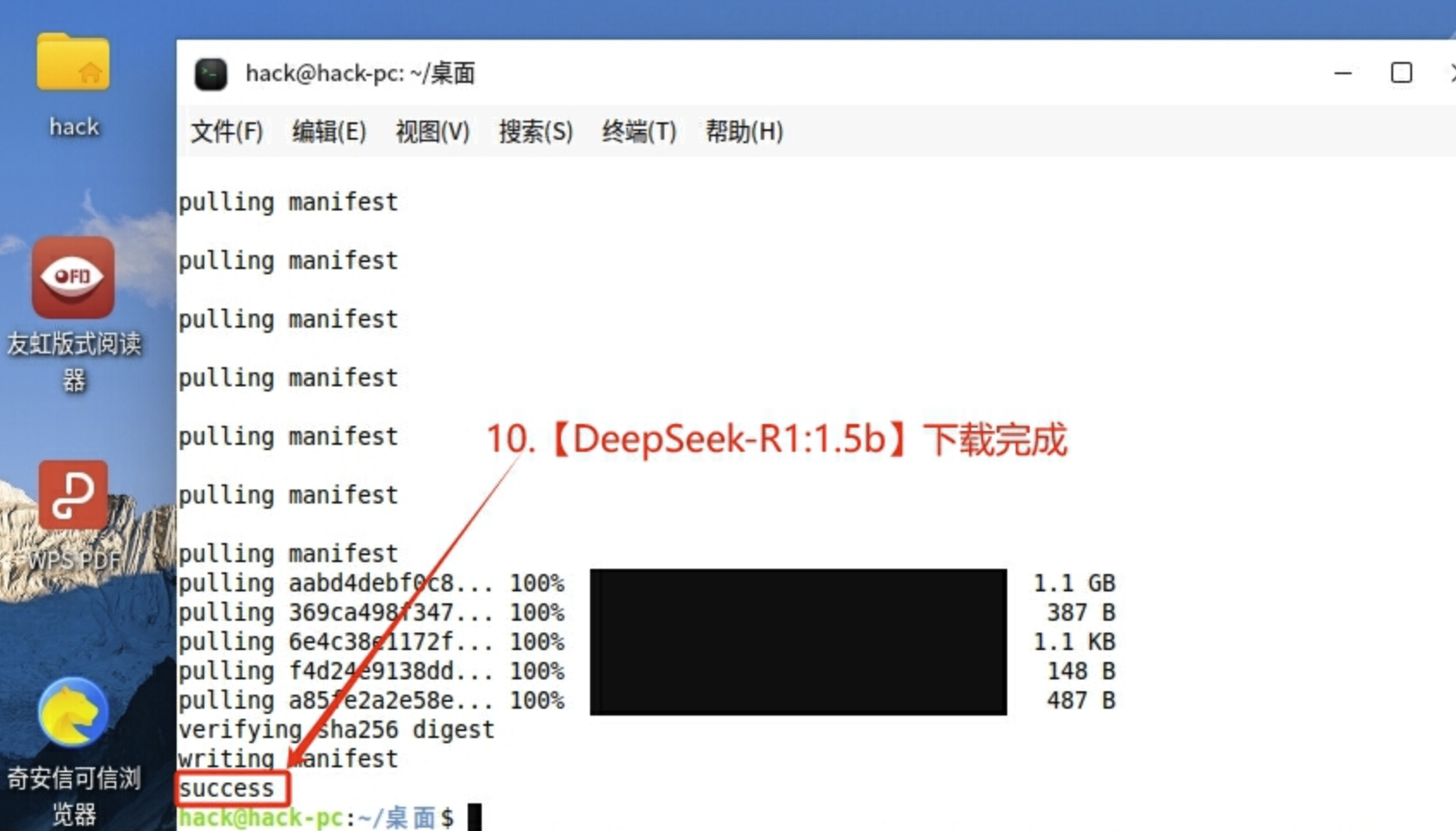

9. Download the corresponding [DeepSeek-R1] version (Note: It will only be downloaded when the model is run for the first time)

10.【DeepSeek-R1:1.5b】Download

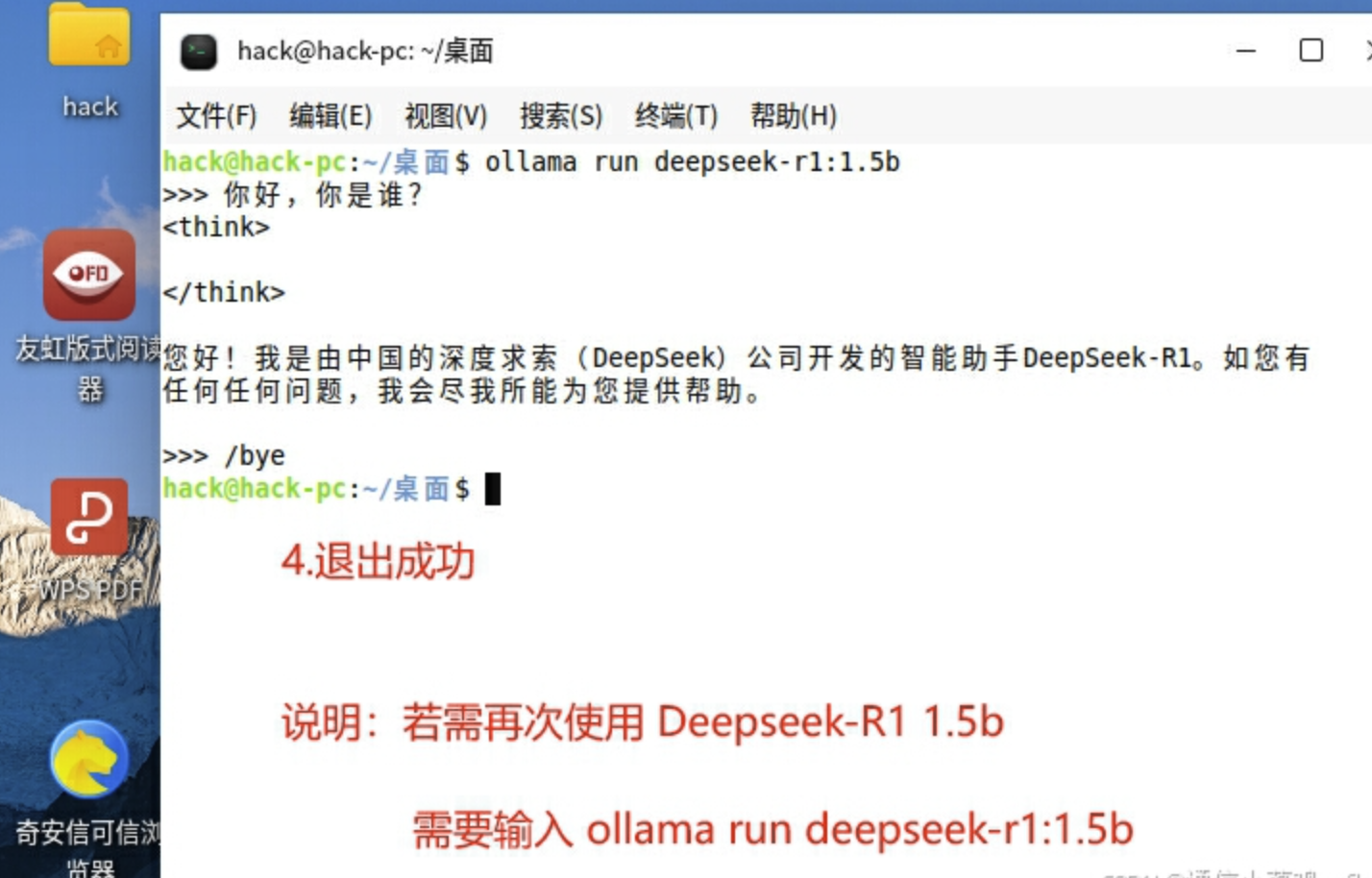

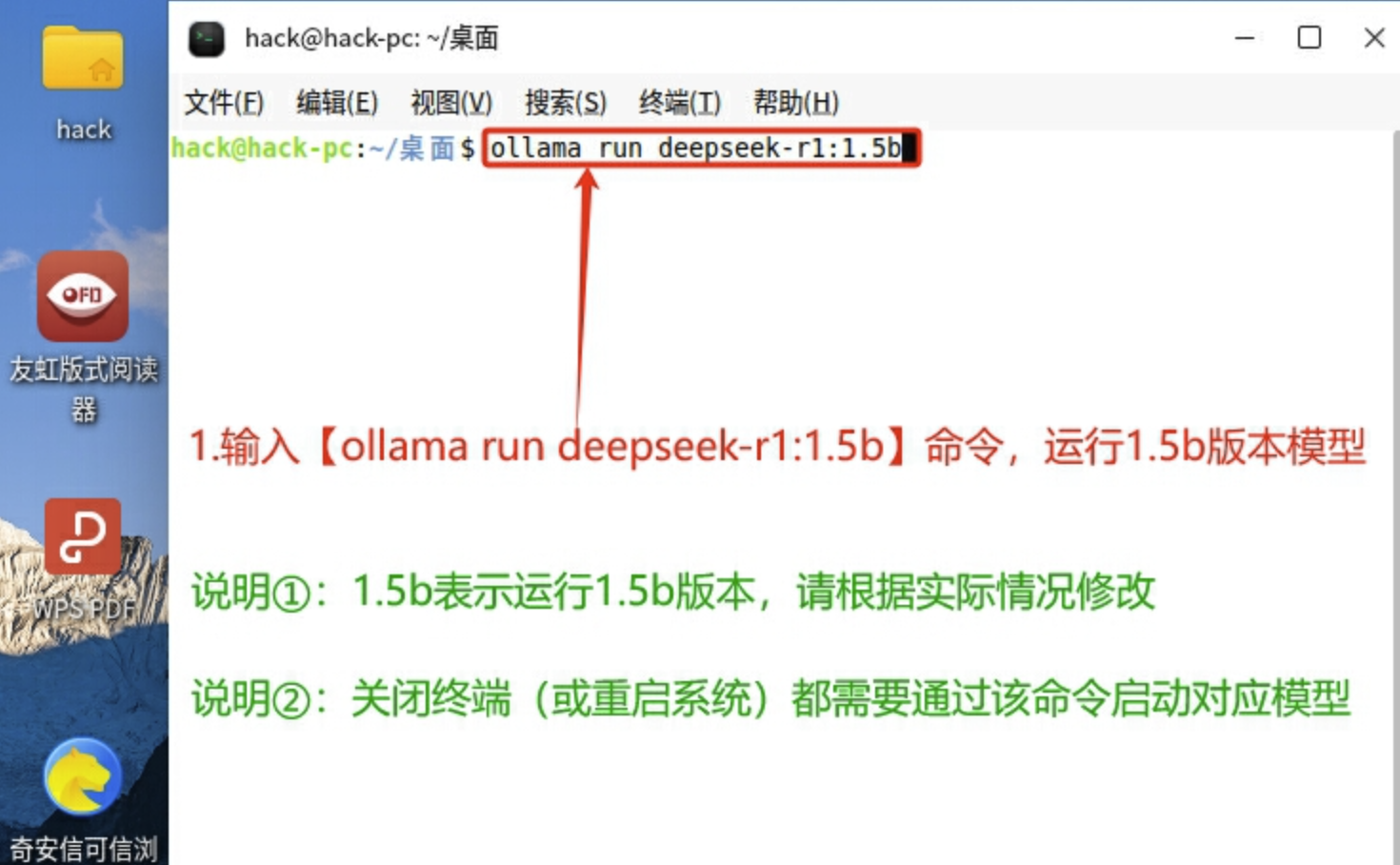

3. Simple use of Deepseek

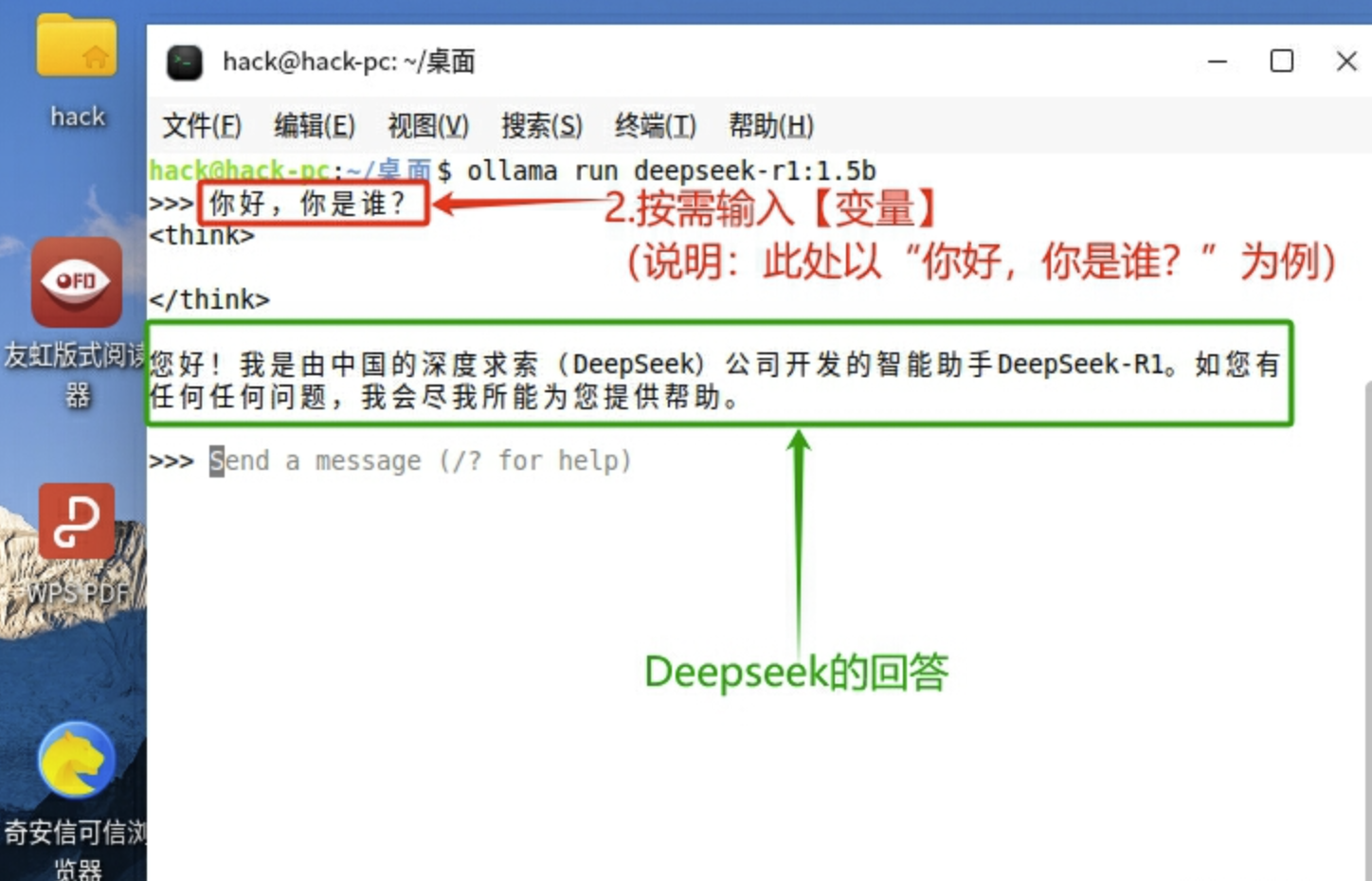

1. Enter the [ollama run deepseek-r1:1.5b] command to run the 1.5b version model

ollama run deepseek-r1:1.5b

2. Enter [variable] as needed (Note: Here is an example of "Hello, who are you?")

3. After using it, enter [/bye] to exit

4. Exit successfully (Instructions: If you need to use Deepseek-R1 1.5b again, you need to enter ollama run deepseek-r1:1.5b )