A research team from New York University, MIT, and Google recently proposed an innovative framework aimed at solving the bottleneck problem of diffusion models in inference time expansion. This breakthrough research goes beyond the traditional method of simply adding denoising steps and opens up new ways to improve the performance of generative models.

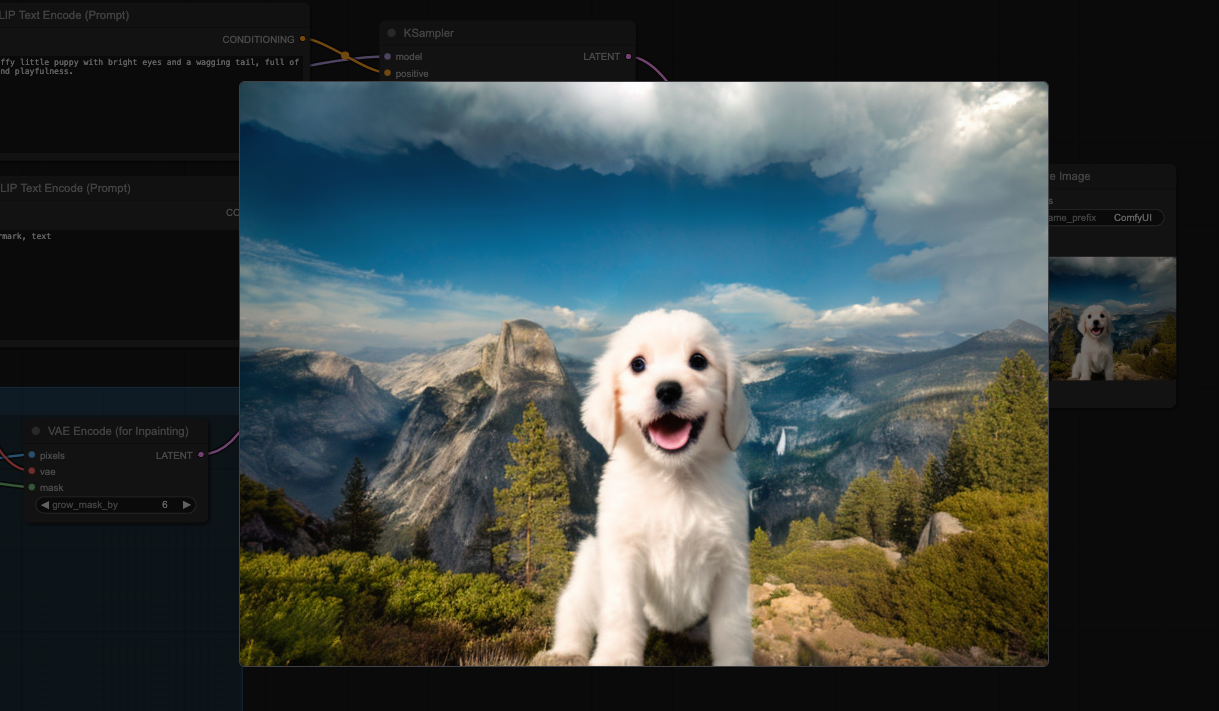

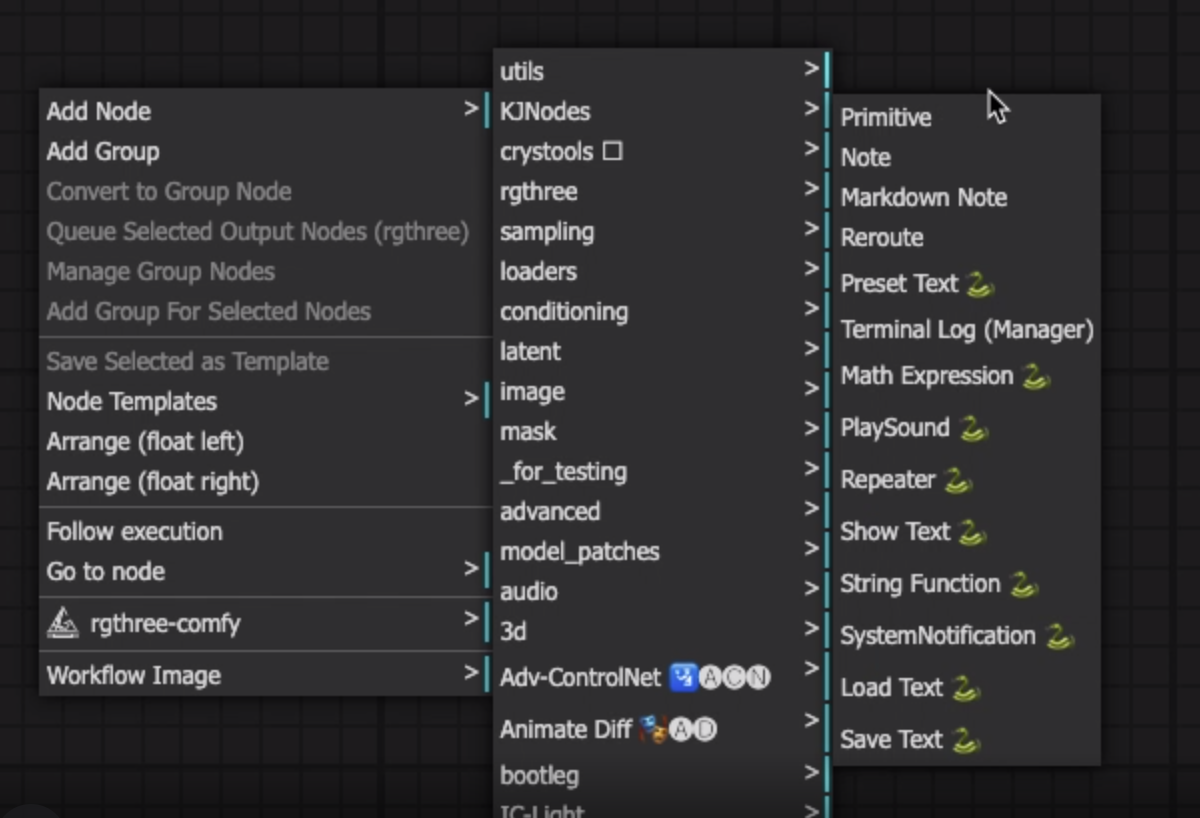

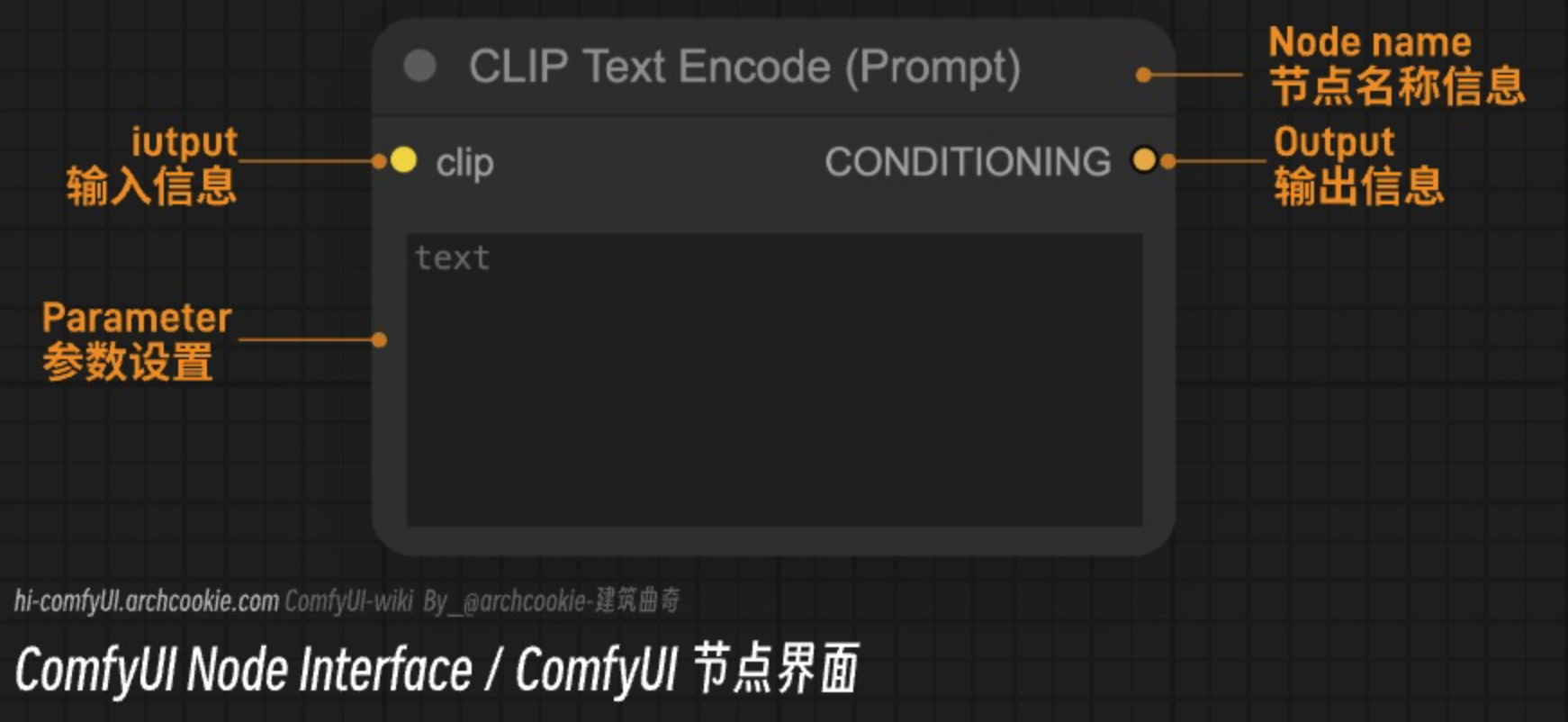

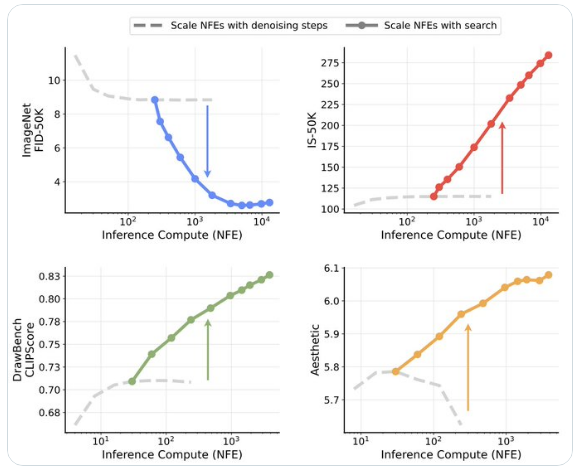

The framework mainly unfolds from two dimensions: one is to use the verifier to provide feedback, and the other is to implement the algorithm to discover better noise candidates. Based on the pre-trained SiT-XL model with 256×256 resolution, the research team innovatively introduced additional computing resources dedicated to search operations while maintaining 250 fixed denoising steps.

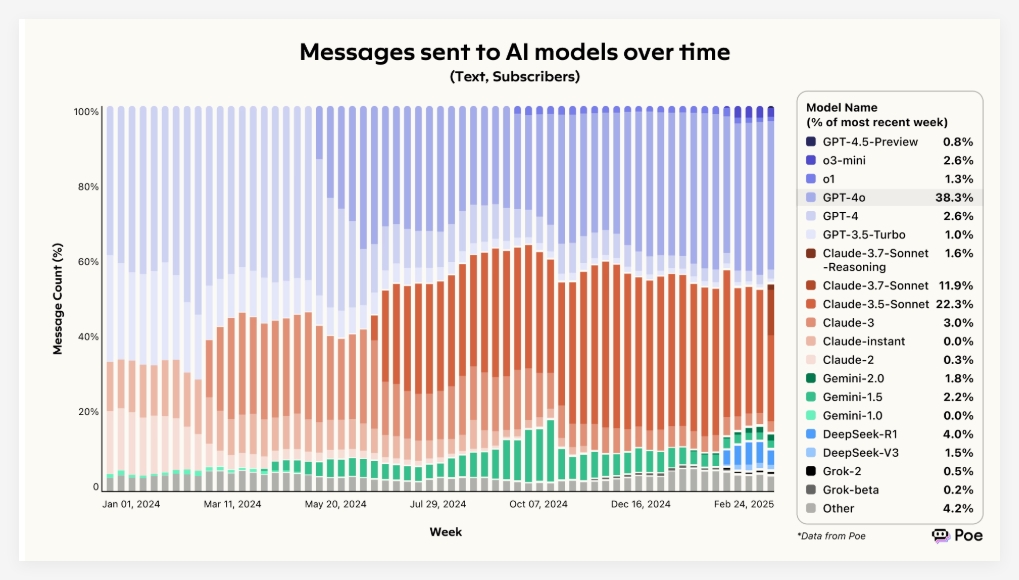

In terms of verification system, the study used two Oracle Verifiers: Inception Score (IS) and Fréchet Inception Distance (FID). IS selects the highest classification probability based on the pre-trained InceptionV3 model, while FID strives to minimize the difference with pre-computed ImageNet Inception feature statistics.

Experimental results show that the framework performs well on multiple benchmarks. In the DrawBench test, LLM Grader evaluation confirmed that the search verification method can continuously improve sample quality. In particular, ImageReward and Verifier Ensemble have achieved significant improvements in various metrics, thanks to their precise evaluation capabilities and high consistency with human preferences.

This study not only confirms the effectiveness of the search-based computational expansion method, but also reveals the inherent bias of different verifiers, pointing the way for the future development of more specialized verification systems for visual generation tasks. This discovery is of great significance for improving the overall performance of AI generative models.