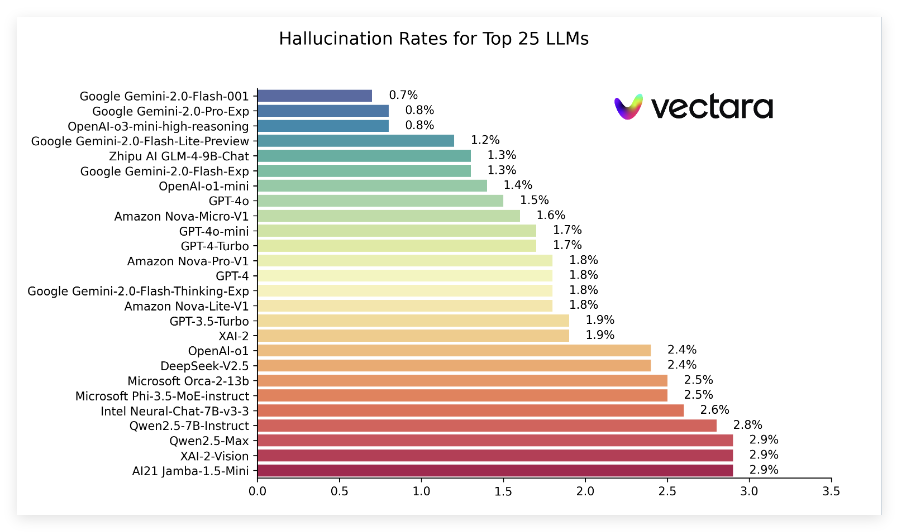

Recently, Vectara released a report called "List of Hallucinations", comparing the performance of hallucinations produced by different large language models (LLMs) when summarizing short documents. This ranking utilizes Vectara’s Hughes Hallucination Assessment Model (HHEM-2.1), which is regularly updated to evaluate how often these models introduce false information into the summary. According to the latest data, the report points out key indicators such as hallucination rate, factual consistency rate, response rate, and average summary length of a series of popular models.

In the latest rankings, Google's Gemini2.0 series performed well, especially Gemini-2.0-Flash-001, which topped the list with a low hallucination rate of 0.7%, showing that it introduced little false information when processing documents. In addition, Gemini-2.0-Pro-Exp and OpenAI's o3-mini-high-reasoning models followed closely with a hallucination rate of 0.8%, and performed well.

The report also shows that although the hallucination rates in many models have increased, most remain at a low level, and the factual consistency rates of multiple models are above 95%, indicating that they are relatively capable of ensuring the authenticity of information. Strong. It is particularly noteworthy that the response rates of models are generally high, with the vast majority of models approaching 100%, which means they perform well in understanding and responding to questions.

In addition, the ranking list also mentions the average summary lengths of different models, indicating the differences in the capabilities of the model in terms of information concentration. Overall, this ranking not only provides important reference data for researchers and developers, but also provides convenience for ordinary users to understand the performance of current large language models.

Specific ranking entrance: https://github.com/vectara/hallucination-leaderboard