Do you think that when the image models fed with massive amounts of data are always as slow as snails climbing trees when generating high-quality pictures? Don’t worry, Luma AI recently opened up an image model pre-training technology called Inductive Moment Matching (IMM) . It is said that it can enable the model to generate high-quality images at an unprecedented "lightning" speed, which is simply a turbocharger in an alchemy furnace!

In recent years, the AI community has generally felt that generative pre-training seems to have encountered a bottleneck. Although the amount of data continues to rise, algorithm innovation has been relatively stagnant. Luma AI believes that this is not that the data is insufficient, but that the algorithm fails to fully tap the potential of the data. It is like holding a gold mine but only digging with a hoe. The efficiency is really worrying.

To break this "algorithm ceiling", Luma AI has turned its attention to efficient inference-time computing expansion . They believe that instead of "involving" the model capacity, it is better to think about how to speed up in the reasoning stage. So, IMM, the "speed player", came into being!

So, what is unique about the IMM technology that can achieve such an amazing speedup?

The key is that it reversely designs the pre-training algorithm from the perspective of inference efficiency . Traditional diffusion models, like carefully crafted artists, require fine adjustments step by step. Even if the model is powerful, it requires a lot of steps to get the best results. IMM is different. It is like a painter with "instant movement" skill. In the process of reasoning, the network not only focuses on the current time step, but also considers the "target time step" .

You can imagine that when a traditional diffusion model generates images, it is like exploring and moving forward step by step in a maze. And what about IMM? It directly sees the exit of the maze, and can "jump" past more flexibly, greatly reducing the required steps. This clever design makes every iteration more expressive and no longer limited by linear interpolation.

What is even more commendable is that IMM has introduced a mature moment matching technology such as maximum mean discrepancy , which is like adding an accurate navigation system to the "jump" to ensure that the model can accurately move towards high-quality goals.

Practice is the only criterion for testing truth. Luma AI has proved the strength of IMM with a series of experiments:

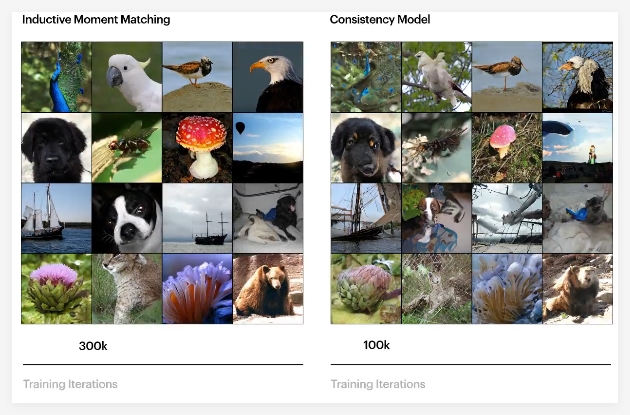

In addition to its fast speed, IMM also performed well in terms of training stability . In contrast, Consistency Models are prone to instability during pre-training and require special hyperparameter design. IMMs are more "worry-free" and can be trained stably under various hyperparameters and model architectures.

It is worth noting that IMM does not rely on the denoising fraction matching or fraction-based random differential equations that the diffusion model depends on . Luma AI believes that the real breakthrough lies not only in the moment matching itself, but also in their perspective of reasoning first . This idea allows them to discover the limitations of existing pre-training paradigms and design innovative algorithms that can overcome these limitations.

Luma AI is confident about the future of IMM, and they believe this is just the beginning, heralding a new paradigm towards a multimodal fundamental model that transcends existing boundaries . They hope to fully unleash the potential of creative intelligence.

GitHub repository: https://github.com/lumalabs/imm