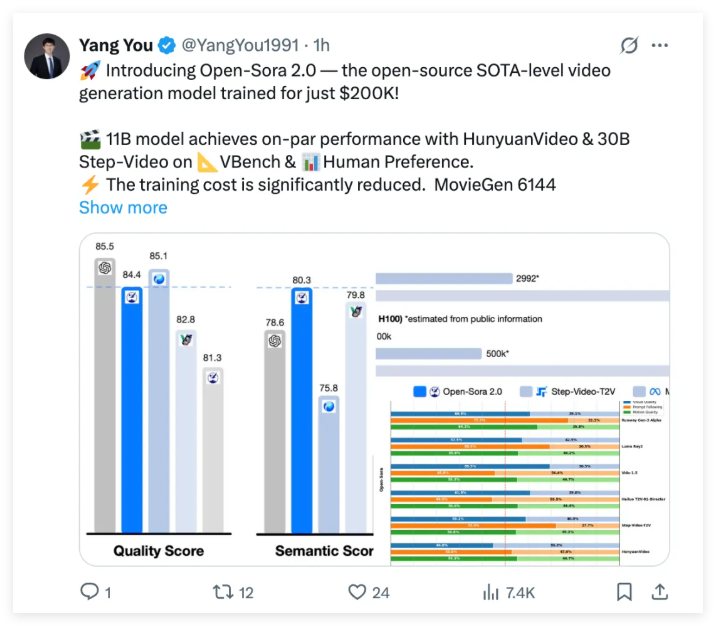

Have you heard of the inhuman OpenAI Sora? The training cost of hundreds of millions of dollars is simply the "Rolls-Royce" in the video generation industry. Now, Luchen Technology announces the open source video generation model Open-Sora2.0 !

After spending only $200,000 (equivalent to the investment of 224 GPUs), a commercial-level video generation large model with 11 billion parameters was successfully trained.

Performance catching up with "OpenAI Sora"

Although Open-Sora 2.0 is not expensive, its strength is not vague at all. It is a tough guy who dares to challenge the industry benchmark HunyuanVideo and Step-Video with 30 billion parameters. Open-Sora2.0's performance is impressive in authoritative VBench and user preference tests, and it can match those closed-source models that cost millions of dollars to train on many key metrics.

What is even more exciting is that in the VBench evaluation, the performance gap between Open-Sora2.0 and OpenAI Sora has narrowed significantly from the previous 4.52% to only 0.69% ! This can almost be said to be a comprehensive performance rating !

Moreover, Open-Sora2.0's score in VBench even surpassed Tencent's HunyuanVideo, which is truly "the new wave of the Yangtze River pushes the old wave", achieving higher performance at a lower cost, setting a new benchmark for open source video generation technology!

In user preference review, Open Sora has at least two indicators that surpass the open source SOTA model HunyuanVideo and the business model Runway Gen-3Alpha in the three key dimensions of visual performance, text consistency and action performance.

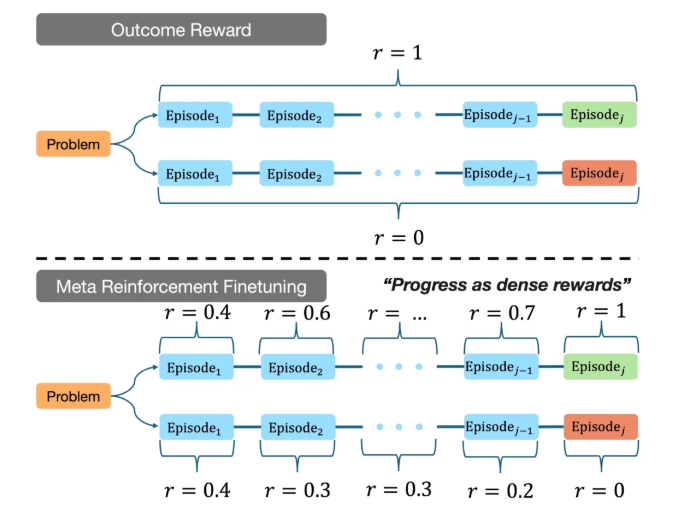

Everyone must be curious about how Open-Sora 2.0 achieves such high performance at such a low cost? There are many secret weapons behind this. First, the Open Sora team continued the design idea of Open-Sora1.2 in the model architecture, and continued to use 3D autoencoder and Flow Matching training framework . At the same time, they also introduced a 3D full attention mechanism to further improve the quality of video generation.

In order to pursue extreme cost optimization, Open-Sora2.0 starts from multiple aspects:

It is estimated that the cost of a single training of more than 10B open source video models on the market is often millions of dollars, while Open Sora2.0 reduces this cost by 5-10 times. This is simply a blessing in the field of video generation, giving more people the opportunity to participate in the research and development of high-quality video generation.

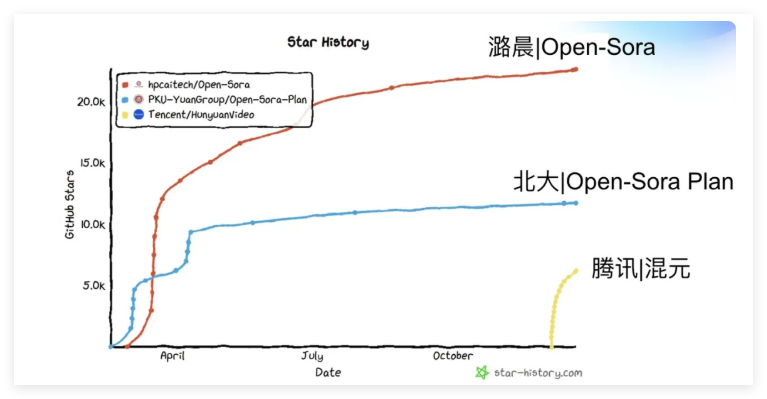

What is even more commendable is that Open-Sora not only open source model code and weights, but also open source full-process training code , which will undoubtedly greatly promote the development of the entire open source ecosystem. As statistics from third-party technology platforms, Open-Sora's academic paper citations received nearly 100 citations in half a year, ranking first in the global open source influence ranking, becoming one of the world's most influential open source video generation projects.

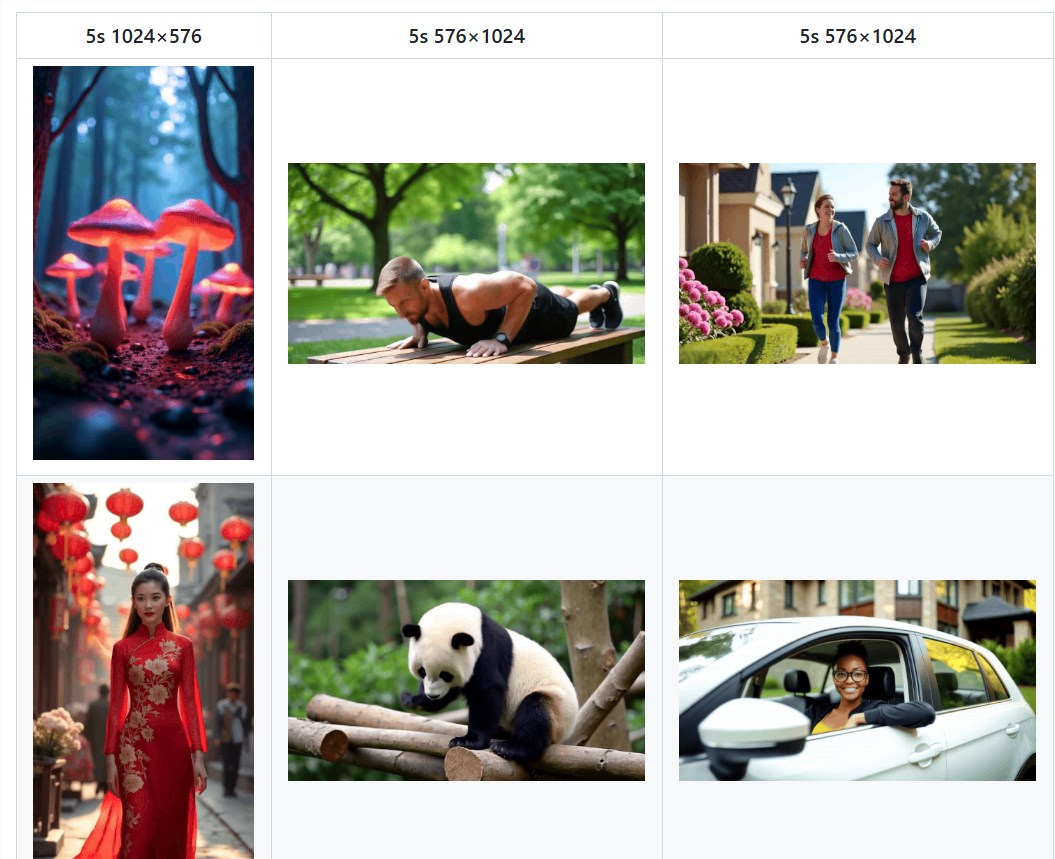

The Open-Sora team is also actively exploring the application of high-compression ratio video autoencoder to significantly reduce inference costs. They trained a video autoencoder with high compression ratio (4×32×32) to shorten the inference time of generating 768px and 5-second videos in a single card from nearly 30 minutes to within 3 minutes , which has increased the speed by 10 times! This means that we can generate high-quality video content faster in the future.

The open source video generation model Open-Sora2.0 launched by Luchen Technology, with its low-cost, high-performance and comprehensive open source characteristics, undoubtedly brings a strong "parity" trend to the video generation field. Its emergence not only narrowed the gap with the top closed-source models, but also lowered the threshold for high-quality video generation, allowing more developers to participate and jointly promote the development of video generation technology.

? GitHub open source repository : https://github.com/hpcaitech/Open-Sora

?Technical report: https://github.com/hpcaitech/Open-Sora-Demo/blob/main/paper/Open_Sora_2_tech_report.pdf