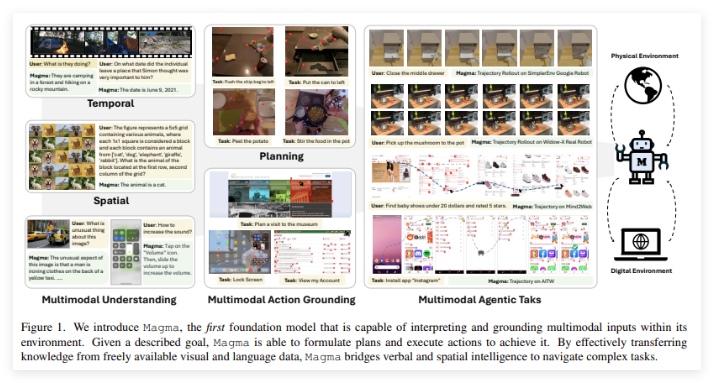

Recently, Microsoft research teams joined forces with researchers from multiple universities to release a multimodal AI model called "Magma". This model is designed to process and integrate multiple data types, including images, text and video, to perform complex tasks in digital and physical environments. With the continuous advancement of technology, multimodal AI agents are being widely used in robotics, virtual assistants and user interface automation.

Previous AI systems usually focused on vision-language understanding or robotic operation, and it was difficult to combine these two capabilities into a unified model. Although many existing models perform well in specific fields, they have poor generalization capabilities in different application scenarios. For example, Pix2Act and WebGUM models perform well in UI navigation, while OpenVLA and RT-2 are more suitable for robotic manipulation, but they often require training separately and are difficult to cross the boundaries between digital and physical environments.

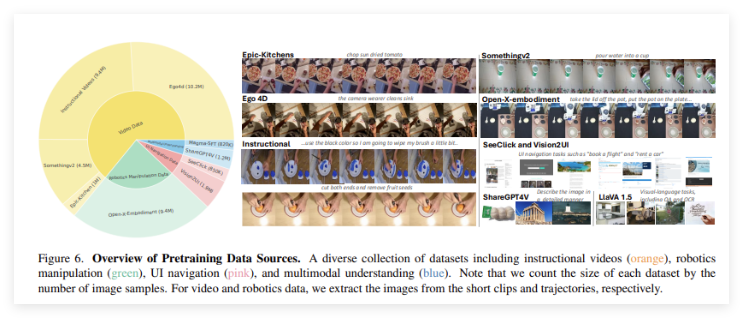

The launch of the “Magma” model is precisely to overcome these limitations. It integrates multimodal understanding, action positioning and planning capabilities by introducing a powerful training method to enable AI agents to operate seamlessly in a variety of environments. Magma's training dataset contains 39 million samples, including images, videos, and robot motion trajectories. In addition, the model adopts two innovative technologies: Set-of-Mark (SoM) and Trace-of-Mark (ToM). The former enables the model to mark actionable visual objects in the UI environment, while the latter enables it to track the movement of objects over time and improves the planning capabilities of future actions.

“Magma” adopts advanced deep learning architecture and large-scale pre-training techniques to optimize its performance in multiple fields. The model uses the ConvNeXt-XXL visual backbone to process images and videos, and the LLaMA-3-8B language model handles text input. This architecture enables "Magma" to efficiently integrate vision, language and action execution. After comprehensive training, the model has achieved excellent results on multiple tasks, showing strong multimodal understanding and spatial reasoning capabilities.

Project portal: https://microsoft.github.io/Magma/