With the widespread use of large language models (LLMs) in modern artificial intelligence applications, tools such as chatbots and code generators rely on the capabilities of these models. However, the resulting efficiency problem in the reasoning process has become increasingly prominent.

Especially when dealing with attention mechanisms, such as FlashAttention and SparseAttention, they often appear to be inadequate when faced with diverse workloads, dynamic input modes, and GPU resource constraints. These challenges, coupled with high latency and memory bottlenecks, create an urgent need for more efficient and flexible solutions to support scalable and responsive LLM inference.

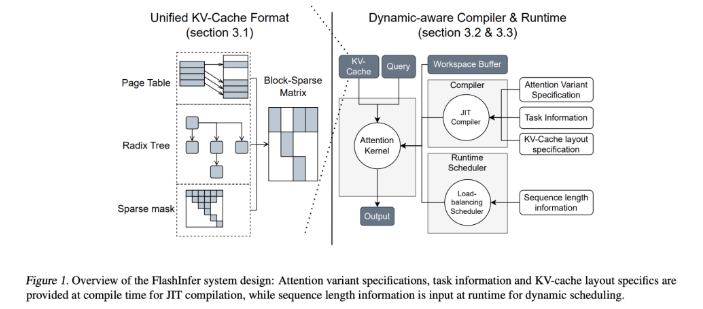

To solve this problem, researchers from the University of Washington, NVIDIA, Perplexity AI, and Carnegie Mellon University jointly developed FlashInfer, an artificial intelligence library and kernel generator designed specifically for LLM inference. FlashInfer provides a high-performance GPU kernel implementation, covering multiple attention mechanisms, including FlashAttention, SparseAttention, PageAttention and sampling. Its design philosophy emphasizes flexibility and efficiency, aiming to address key challenges in LLM inference services.

FlashInfer’s technical features include:

1. *Comprehensive attention kernel: supports multiple attention mechanisms, including pre-filling, decoding and appending attention, is compatible with various KV-cache formats, and improves the performance of single request and batch service scenarios.

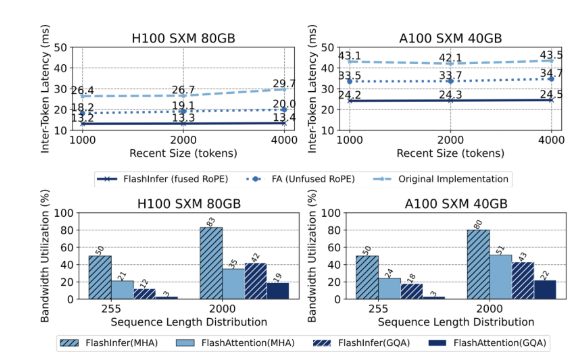

2. * Optimized shared prefix decoding: Through grouped query attention (GQA) and fused rotation position embedding (RoPE) attention, FlashInfer achieves significant speed improvements, such as in long hint decoding, compared to vLLM’s Page Attention implementation 31 times faster.

3. Dynamic load balancing scheduling: FlashInfer's scheduler can dynamically adjust according to input changes, reducing GPU idle time and ensuring efficient utilization. Its compatibility with CUDA Graphs further improves applicability in production environments.

In terms of performance, FlashInfer performs well in multiple benchmarks, significantly reducing latency, and excels especially in handling long-context reasoning and parallel generation tasks. On NVIDIA H100GPU, FlashInfer achieves 13-17% speedup in parallel generation tasks. Its dynamic scheduler and optimized kernel significantly improve bandwidth and FLOP utilization, especially in cases of uneven or uniform sequence lengths.

FlashInfer provides a practical and efficient solution to LLM inference challenges, greatly improving performance and resource utilization efficiency. Its flexible design and integration capabilities make it an important tool to promote the development of LLM service framework. As an open source project, FlashInfer encourages further collaboration and innovation within the research community, ensuring continuous improvement and adaptation to emerging challenges in the field of AI infrastructure.

Project entrance: https://github.com/flashinfer-ai/flashinfer

AI courses are suitable for people who are interested in artificial intelligence technology, including but not limited to students, engineers, data scientists, developers, and professionals in AI technology.

The course content ranges from basic to advanced. Beginners can choose basic courses and gradually go into more complex algorithms and applications.

Learning AI requires a certain mathematical foundation (such as linear algebra, probability theory, calculus, etc.), as well as programming knowledge (Python is the most commonly used programming language).

You will learn the core concepts and technologies in the fields of natural language processing, computer vision, data analysis, and master the use of AI tools and frameworks for practical development.

You can work as a data scientist, machine learning engineer, AI researcher, or apply AI technology to innovate in all walks of life.