Recently, the Allen Institute of Artificial Intelligence (AI2) released the latest large-scale language model - OLMo232B . This model discloses all data, code, weights and training processes in a "completely open" attitude, in a sharp contrast with the closed source model. AI2 hopes to promote joint innovation among global researchers through open collaboration.

With 32 billion parameters , OLMo232B has excellent performance in multiple academic benchmarks, even surpassing GPT-3.5Turbo and GPT-4o mini . The training process is divided into two stages: pre-training and mid-term training. It uses an extensive data set of about 3.9 trillion tokens and a Dolmino data set focusing on high-quality content, ensuring the model's solid language skills.

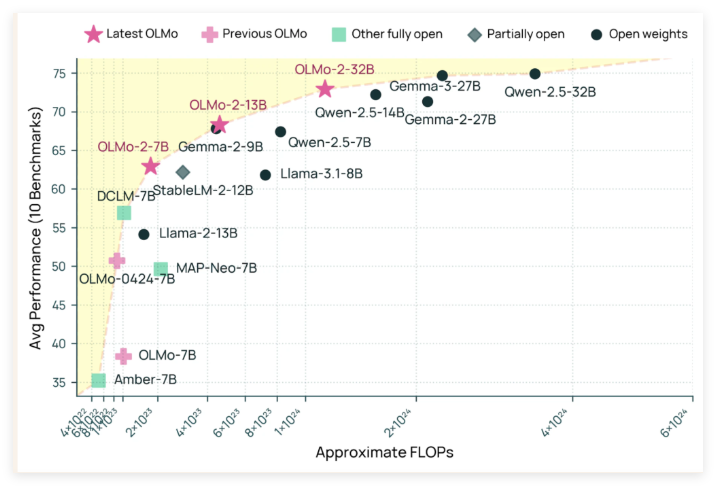

In addition, OLMo232B performed well in training efficiency. When it reached a performance level comparable to the leading model, it used only about one-third of the computing resources , reflecting AI2's investment in resource-efficient AI development.

The launch of OLMo232B marks an important milestone in the development of open and accessible AI, providing researchers and developers around the world with powerful open source tools to drive advances in the field of artificial intelligence.

github: https://github.com/allenai/OLMo-core

huggingface:https://huggingface.co/allenai/OLMo-2-0325-32B-Instruct