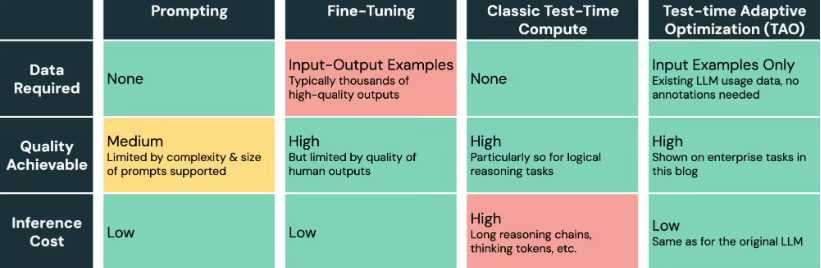

Recently, data intelligence company Databricks has launched a new method for fine-tuning of large language models - TAO (Test-time Adaptive Optimization). The emergence of this technology has brought new hope to the development of open source models. By using label-free data and reinforcement learning, TAO not only excels in reducing enterprise costs, but also achieves impressive results in a series of benchmark tests.

According to the report of technology media NeoWin, the Llama3.370B model after TAO has shown performance better than traditional annotation fine-tuning methods in tasks such as financial document Q&A and SQL generation, and even approaches OpenAI's top closed-source model. This achievement marks another major breakthrough in the open source model in competing with commercial AI products.

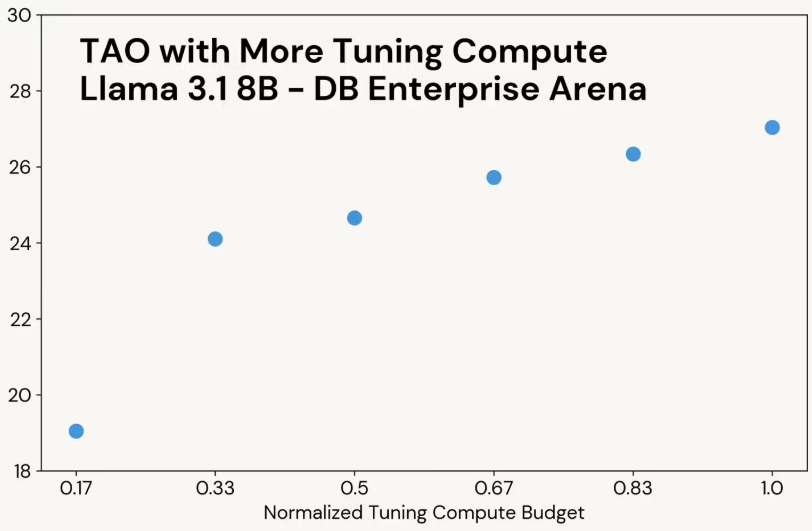

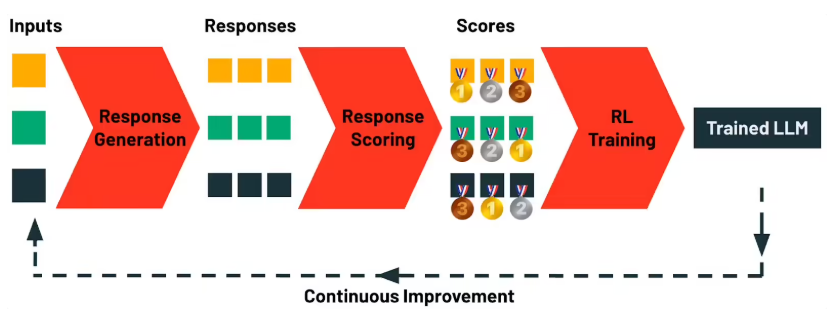

The core of the TAO method is its unique “computing on test” concept that automatically explores the diversity of tasks while combining reinforcement learning to optimize the model, thus avoiding the manual annotation costs required for traditional fine-tuning. TAO's fine-tuned Llama model has achieved remarkable results in several enterprise benchmarks:

- In the FinanceBench benchmark, the model scored 85.1 in 7200 SEC documents Q&A, surpassing the traditional annotation fine-tuning (81.1) and OpenAI's o3-mini (82.2).

- In the BIRD-SQL test, the TAO fine-tuned Llama model scored 56.1, close to the 58.1 of GPT-4o, far exceeding the traditional annotation fine-tuning (54.9).

- In DB Enterprise Arena, the TAO model scored 47.2, which, while slightly below the 53.8 of GPT-4o, still shows strong competitiveness.

TAO technology opens a new door to the continuous evolution of open source models. As user usage increases, the model will self-optimize through feedback data. Currently, Databricks has started private testing on the Llama model, and companies can apply to participate in this innovative experience.

The launch of this new technology is not only an innovative breakthrough in the field of open source AI, but also an important guide for the future development of large language models. With more enterprises participating, the TAO fine-tuning method is expected to further promote the performance improvement of the open source model, allowing open source AI to show greater potential in commercial applications.