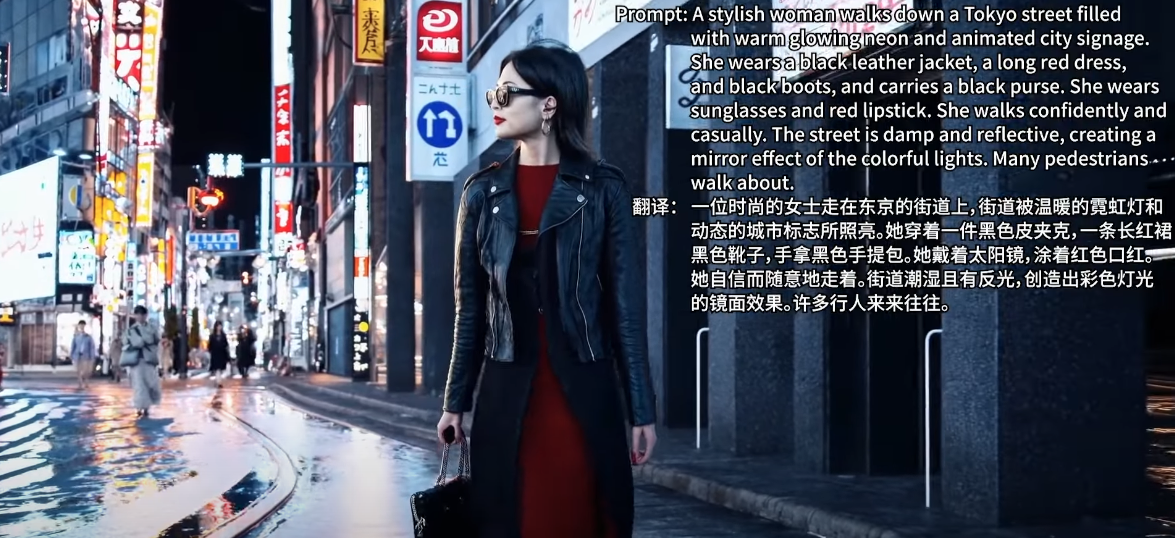

OpenAI released the video generation model Sora, and the news made the AI circle boil. Sora generates high-quality videos through simple prompt words, and can even generate videos that reach one minute, and the stability and character consistency of the video are very good. Videos generated by Sora will automatically cut the lens in 13 seconds. This effect is actually generated at one time, not later splicing. This made me sigh that Sora's handling of character details, especially the details of face shape, facial features, hairstyle, glasses and earrings, were all done almost flawlessly.

I have always been cautious when introducing new technologies or new products, because there are too many marketing accounts on the market. Whenever there is a new product released, I will start to promote it, and I often say "technical revolution" and "the king of the beginning of the year". It seems that the end of the world is coming. Therefore, I usually wait a few days before posting videos to avoid unnecessary anxiety to everyone. To be honest, this method may also be because I am a little lazy, which has led to the average number of views of my videos. But Sora is really different this time. Although it has not been officially launched yet and is still using the Transformer architecture, it is indeed worth my video. After all, it can be said to be a milestone in the field of AI video generation.

The only way to experience Sora at present is to leave a message on OpenAI CEO Sam Altman's Twitter, and you can occasionally be selected to experience it. The information about Sora currently given by the official comes from two pages, one is Sora's homepage and the other is its research page. On Sora's homepage, OpenAI clearly states that Sora is an AI model of Wensheng Video, and all the videos displayed on the page are directly generated by Sora and have not undergone any post-processing, including editing and splicing. After seeing this information, I have a deeper understanding of the performance of the video. For example, when I saw the 13-second camera switch, I couldn't help but feel shocked, because this was not a later operation, but a one-time completion by Sora.

Sora's goal or final form is to build a "world model", which is extremely ambitious. Through researching the literature, I found that Sora does not rely on image generation models like other AI video generation tools, but is able to simulate physical motions in the real world in video. Traditional AI video generation technology often relies on inserting preset actions or skeleton images between each frame. Although it looks more natural, it is essentially implemented through the conversion of a single image without involving physical information. . Unlike, Sora not only generates videos, but also handles physical motion in the video, making the video look more realistic.

Interestingly, OpenAI mentioned in the research report that Sora does not provide any physical information during training, but instead results are obtained through big data training. It can be said that Sora's performance comes from "the miracle of data accumulation". This also explains why it can show such natural physical motion in the generated video. Meanwhile, Sora is able to generate videos that last up to one minute, which also makes it stand out among other AI-generated video products. Currently, the generation time of most video generation tools on the market is about a few seconds, and Sora can achieve minute-level video generation, and the amount of calculation behind it is also amazing.

As we further dive into Sora's technical principles, we can see that it is based on a video transformation model called ViViT. This model uses the concept of "patches" to uniformly process images and videos, and obtains video feature information through complex operations. The basis of this technology is still the Transformer architecture, but its application in video generation and image generation are significantly different. Through this technology, Sora can control the time and space characteristics of video more accurately, which is one of the reasons why Sora surpasses existing tools in quality.

In addition, one of Sora's highlights is that it can generate 2K resolution images, which is far superior to the existing AI image generation tools on the market. For example, the image resolution generated by SD XL is only 1024x1024, while Sora can directly generate images with 2K resolution, which makes the generated images more layered in detail, especially in hair, skin texture and depth of field. All showed extremely real effects.

Surprisingly, Sora's generation not only brings a visual impact, but the physical simulation capabilities behind it are also worth pondering. For example, Sora can simulate physical states in the real world, and even when the character blocks the dog, the dog can still appear after the character walks away, showing extremely strong spatial consistency and temporal consistency. This ability reminds us of the philosophical question discussed by Einstein and Bohr - whether the world still exists when no one observes it. This puppy example made me feel that Sora seemed to have some self-understanding of the world, even as if she had created a simulated world.

However, Sora is still in beta, so we can only look forward to its official launch. Although it has great potential in simulating physics and generating video, it still has many limitations, such as glass breakage, dog fission, basketball modeling, etc., which are not perfect. This may also mean that Sora's understanding of the world does not fully comply with our current physical rules.

Sora is undoubtedly a huge breakthrough in the field of AI video generation. It not only produces extremely high-quality videos, but also makes significant progress in simulating physical and temporal consistency. Although it still has shortcomings, as technology continues to evolve, I believe Sora will become a benchmark in the video generation field in the future. For those interested in getting to know more, Sora's potential is undoubtedly worth paying attention to.