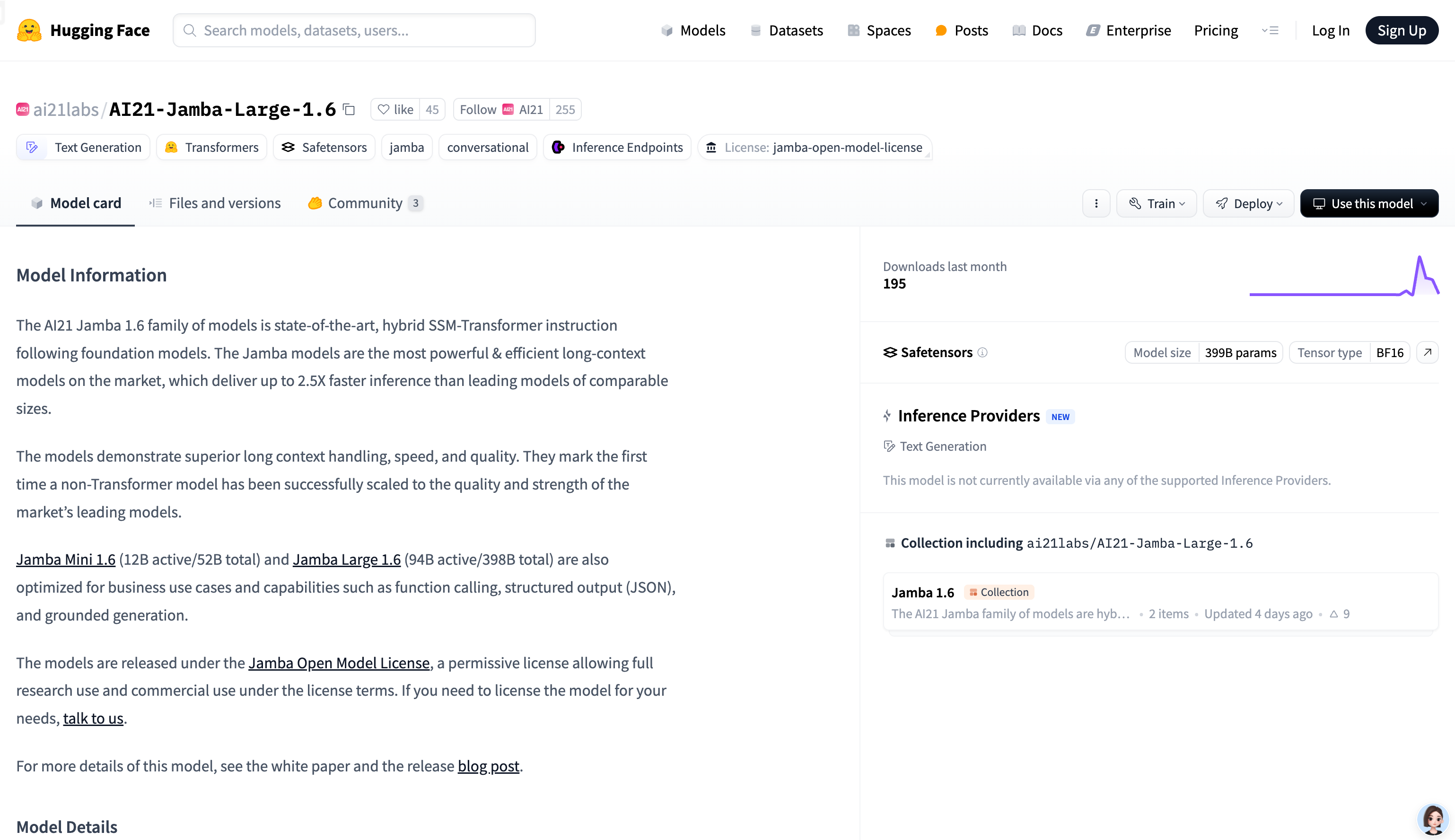

AI21-Jamba-Large-1.6 is a hybrid SSM-Transformer architecture foundation model developed by AI21 Labs, designed for long text processing and efficient reasoning. The model excels in long text processing, inference speed and quality, supports multiple languages, and has strong command-following capabilities. It is suitable for enterprise-level applications that need to process large amounts of text data, such as financial analysis, content generation, etc. This model is licensed under the Jamba Open Model License, allowing research and commercial use under the terms of the license.

Demand population:

"This model is suitable for businesses and developers who need to efficiently process long text data, such as finance, law, content creation, etc. It can quickly generate high-quality text, support multilingual and complex task processing, and is suitable for commercial applications that require high performance and efficiency."

Example of usage scenarios:

In the financial field, it is used to analyze and generate financial reports, providing accurate market forecasts and investment advice.

In content creation, help generate articles, stories or creative copywriting to improve creative efficiency.

In the customer service scenario, as a chatbot, answering user questions, providing accurate and natural language replies.

Product Features:

Supports long text processing (context length up to 256K), suitable for processing long documents and complex tasks

Fast inference speed, 2.5 times faster than similar models, significantly improving efficiency

Supports multiple languages, including English, Spanish, French, etc., suitable for multilingual application scenarios

Have command-following capabilities and be able to generate high-quality text based on user instructions

Support tool calls, can be combined with external tools to expand model functions

Tutorials for use:

1. Install necessary dependencies, such as mamba-ssm, causal-conv1d and vllm (recommended to use vllm for efficient inference).

2. Use vllm to load the model and set up appropriate quantization strategies (such as ExpertsInt8) to suit GPU resources.

3. Use the transformers library to load the model and quantize it in combination with bitsandbytes to optimize inference performance.

4. Prepare the input data and use AutoTokenizer to encode the text.

5. Call the model to generate text, and control the generation results by setting parameters (such as temperature, maximum generation length).

6. Decode the generated text and extract the content output from the model.

7. To use the tool call function, embed the tool definition into the input template and process the tool call results returned by the model.