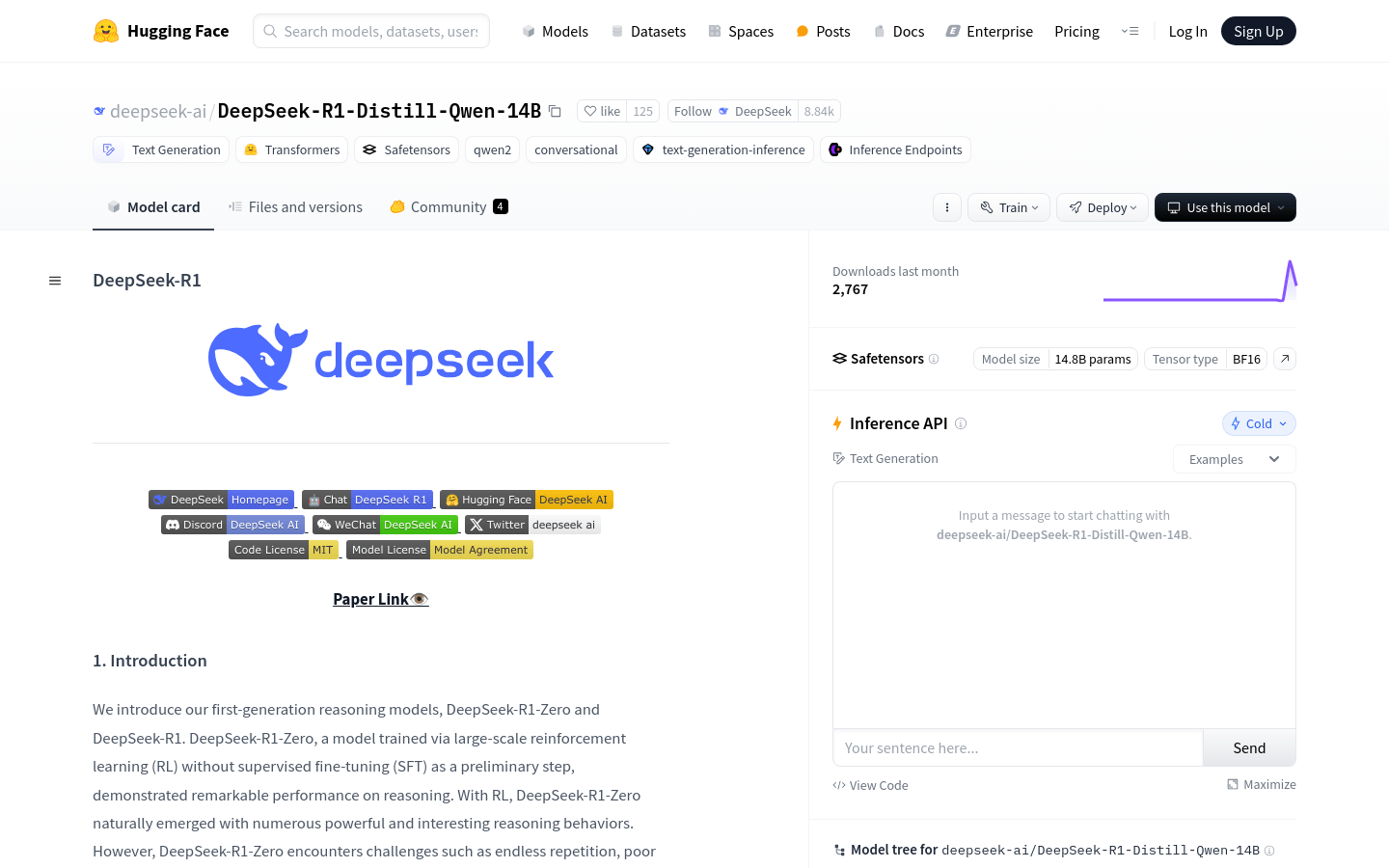

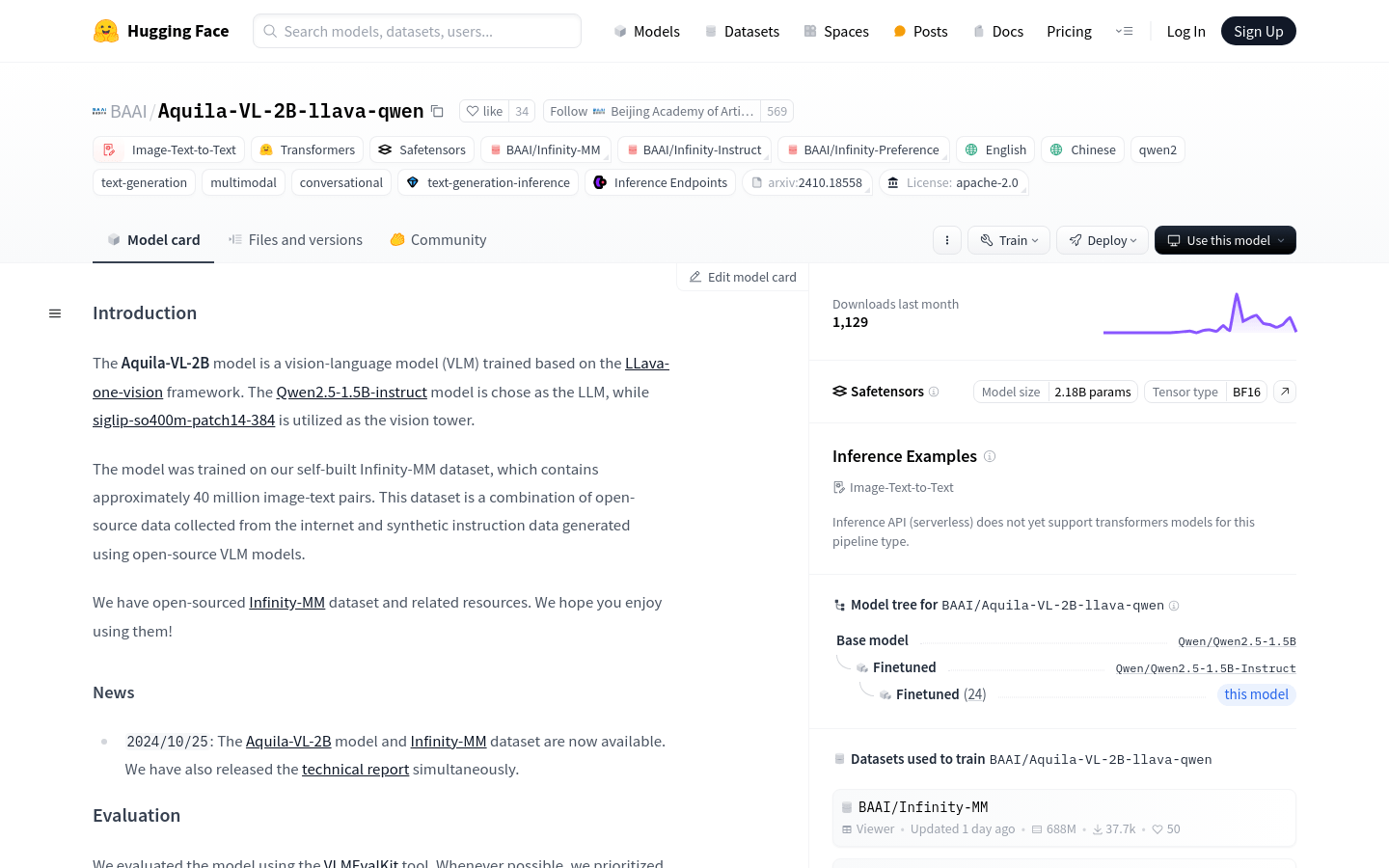

The Aquila-VL-2B model is a visual language model (VLM) trained based on the LLava-one-vision framework. The Qwen2.5-1.5B-instruct model is used as the language model (LLM), and siglip-so400m-patch14-384 is used as the visual tower. The model is trained on a self-built Infinity-MM dataset and contains about 40 million image-text pairs. This dataset combines open source data collected from the Internet and synthetic instruction data generated using open source VLM models. The open source of the Aquila-VL-2B model is designed to drive the development of multimodal performance, especially in the combination of images and text processing.

Demand population:

"The target audience is researchers, developers and enterprises who need to process and analyze large amounts of image and text data for intelligent decision-making and information extraction. The Aquila-VL-2B model provides powerful visual language understanding and generation capabilities, helping them improve data processing efficiency and accuracy."

Example of usage scenarios:

Case 1: Use the Aquila-VL-2B model to analyze and describe images on social media.

Case 2: In the e-commerce platform, this model is used to automatically generate descriptive text for product images to improve user experience.

Case 3: In the field of education, through the combination of images and text, students can provide more intuitive learning materials and interactive experiences.

Product Features:

• Support image-text-to-Text conversion (Image-Text-to-Text)

• Built based on Transformers and Safetensors libraries

• Supports multiple languages, including Chinese and English

• Supports multimodal and dialogue generation

• Support text generation reasoning

• Inference Endpoints compatible

• Supports large-scale image-text datasets

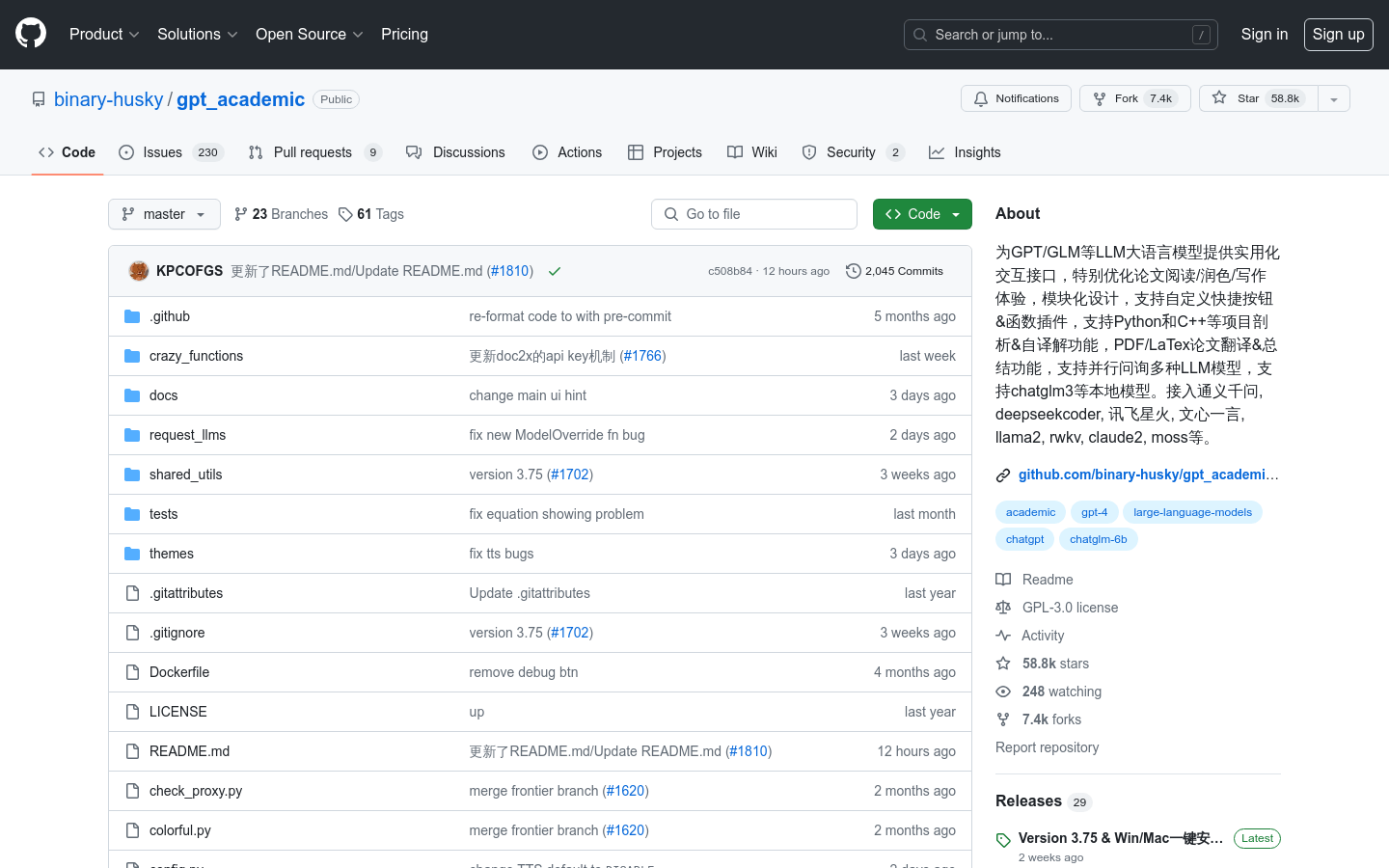

Tutorials for use:

1. Install the necessary libraries: Use pip to install the LLaVA-NeXT library.

2. Load the pretrained model: Load the Aquila-VL-2B model through the load_pretrained_model function in llava.model.builder.

3. Prepare image data: Use the PIL library to load the image and use the process_images function in llava.mm_utils to process the image data.

4. Build a conversation template: Select the appropriate conversation template based on the model and build the problem.

5. Generate tips: Combine the problem and the dialogue template to generate input tips for the model.

6. Encoding input: Use tokenizer to encode prompt questions into input formats that are understandable to the model.

7. Generate output: Call the model's generate function to generate text output.

8. Decode output: Use the tokenizer.batch_decode function to decode the model output into readable text.