What is Aya Model?

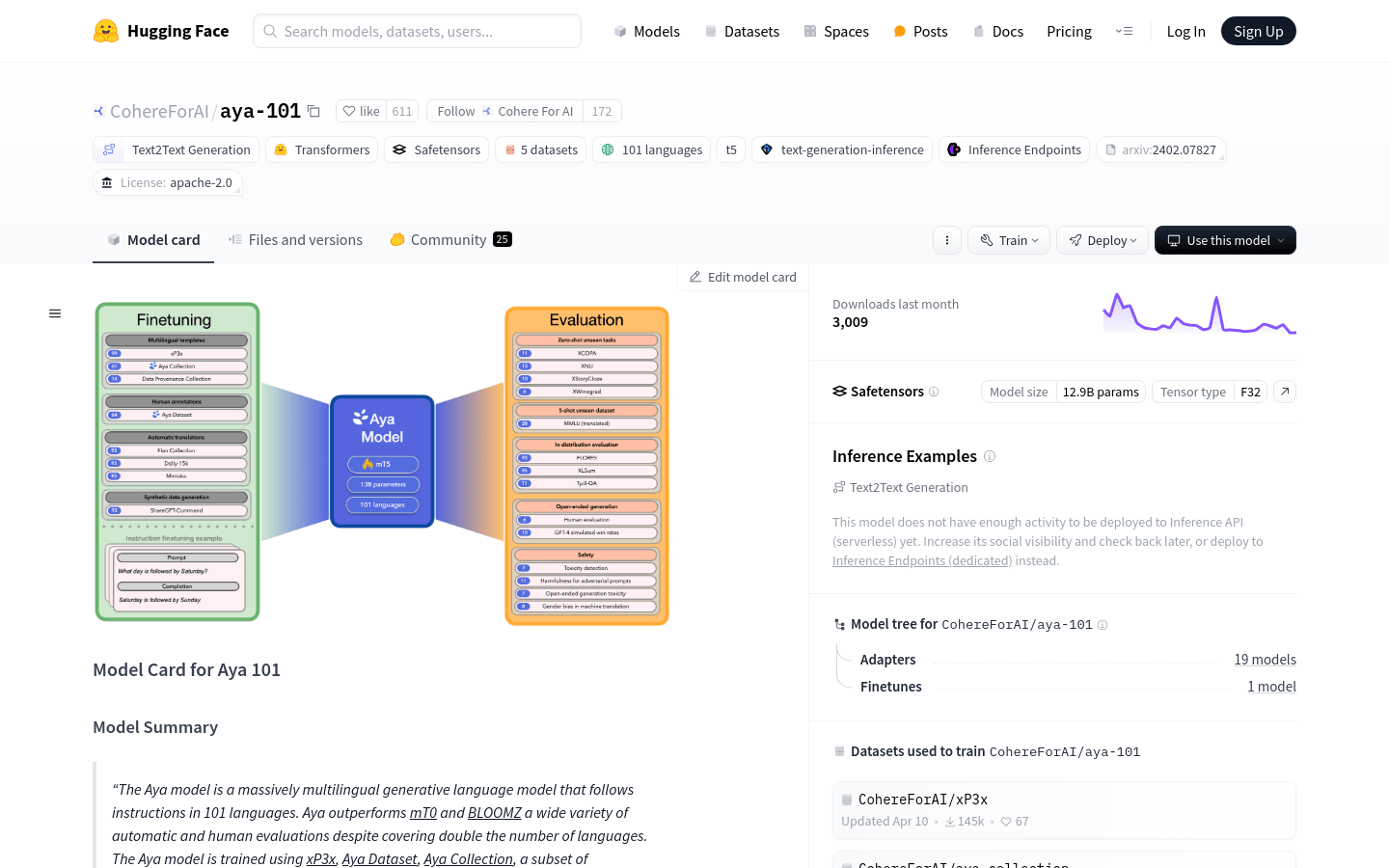

The Aya model is a large-scale multilingual generative language model capable of understanding and generating text in 101 languages. It has been evaluated favorably against models like mT0 and BLOOMZ in various automatic and human assessments, despite covering twice as many languages.

The model was trained on multiple datasets including xP3x, the Aya dataset, and subsets of the Aya collection and DataProvenance collection. It is released under the Apache-2.0 license to promote advancements in multilingual technology.

Who Can Use Aya Model?

The target audience includes researchers, developers, and enterprises working with NLP applications across multiple languages. The Aya model, with its strong multilingual capabilities and open-source licensing, is ideal for those developing and deploying NLP solutions in diverse linguistic environments.

Example Scenarios:

Translate Turkish text into English accurately.

Provide detailed background information on the diversity of Indian languages.

Generate text showcasing the model's capabilities in different languages and tasks.

Key Features:

Supports 101 languages for text generation.

Performs excellently in various languages based on automated and human evaluations.

Trained on multiple datasets such as xP3x and others.

Has 13 billion parameters for robust language understanding and generation.

Offers comprehensive model documentation including usage instructions, details, evaluations, bias risks, and limitations.

Encourages community research and open-source contributions.

Provides code examples for quick start on text generation tasks.

How to Use Aya Model:

1. Install the transformers library using pip: pip install -q transformers.

2. Import the AutoModelForSeq2SeqLM and AutoTokenizer classes.

3. Set the model checkpoint to 'CohereForAI/aya-101'.

4. Use AutoTokenizer to encode input text.

5. Use AutoModelForSeq2SeqLM to generate text.

6. Decode the generated text to get the final output.