What is Cog inference for flux models?

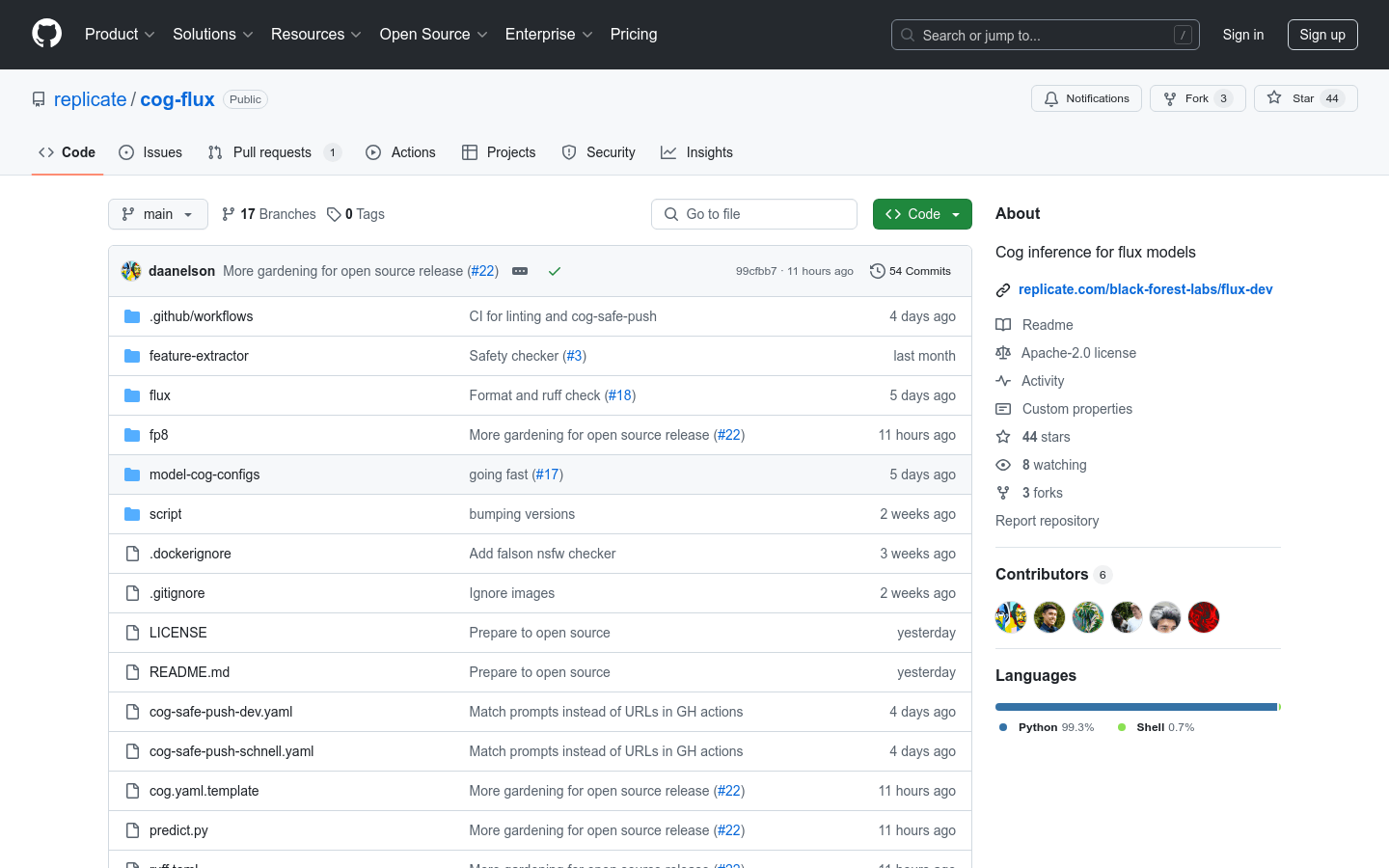

Cog inference for flux models is an inference engine developed by Black Forest Labs for the FLUX.1 [schnell] and FLUX.1 [dev] models. It supports features like compilation with torch.compile, optional fp8 quantization using the aredden/flux-fp8-api, content safety checks through CompVis and Falcons.ai, and img2img support to enhance performance and security of image generation models.

Who would benefit from using Cog inference for flux models?

Researchers working on image generation projects and developers integrating these models into personal projects can benefit from this tool. Additionally, teams focused on content moderation can use its safety checks to filter inappropriate content.

Can you give examples of how Cog inference for flux models might be used?

Researchers could use FLUX.1 [schnell] for advancing their image generation studies. Developers may integrate FLUX.1 [dev] into their own applications. Content review teams can utilize the model’s safety checks to ensure that generated images comply with standards.

What are the key features of Cog inference for flux models?

Key features include support for torch.compile, optional fp8 quantization, content safety checks, img2img functionality, and the ability to run the model via API or browser on Replicate. Users can also run the model on their personal hardware.

How do you get started with using Cog inference for flux models?

First, choose between FLUX.1 [schnell] or FLUX.1 [dev]. Then, use command-line tools for single predictions. You can also run the model through Cog’s API or directly in a browser. Review the Cog getting started guide to understand how it works. For deploying the model on Replicate, follow the provided guidelines. Customize the model as needed and push your custom version to Replicate.