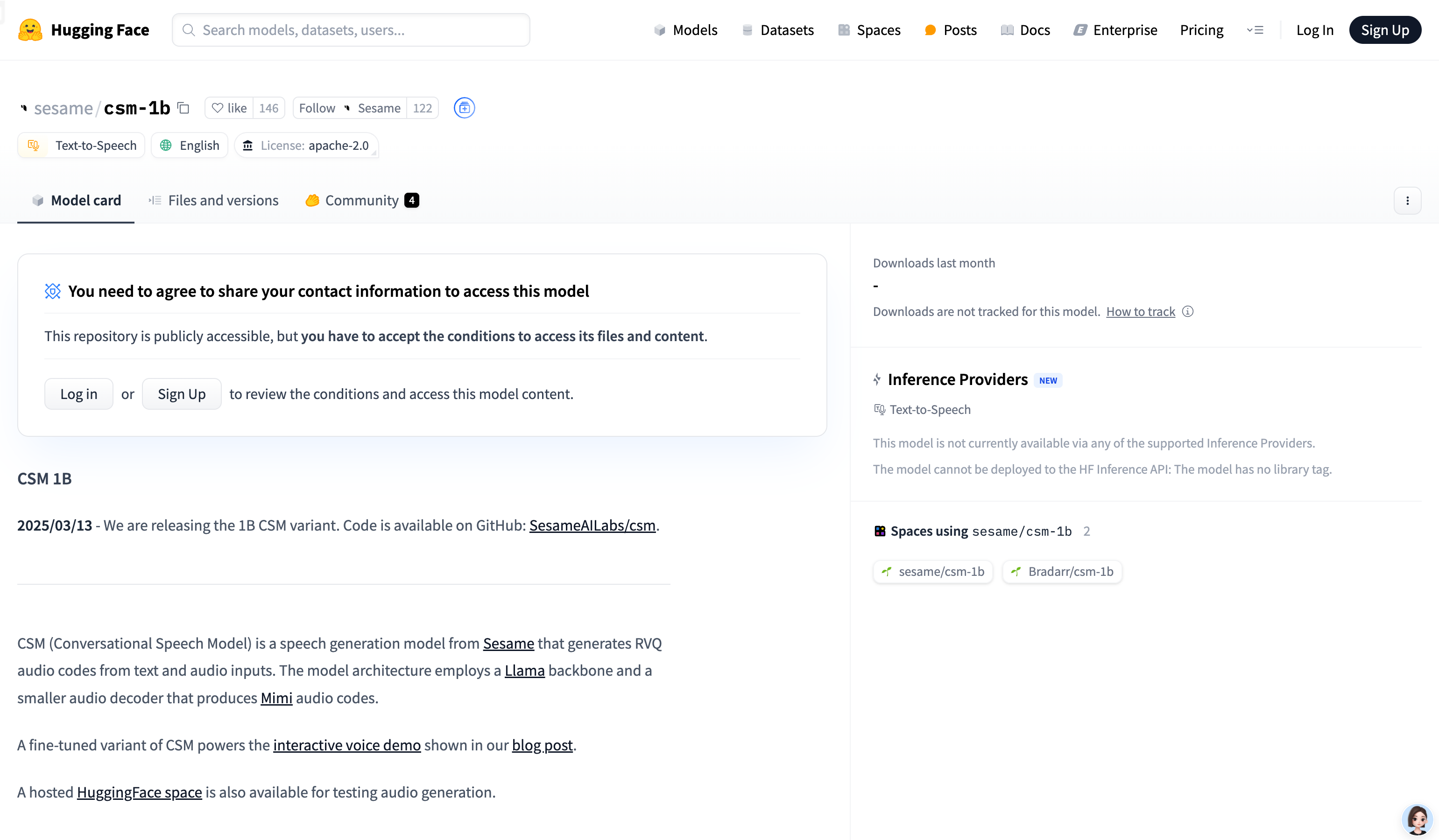

CSM 1B is a speech generation model based on the Llama architecture that can generate RVQ audio codes from text and audio inputs. This model is mainly used in the field of speech synthesis and has high-quality speech generation capabilities. The advantage is that it can handle conversational scenarios with more speakers and generate natural and smooth voice through contextual information. The model is open source and aims to support research and educational purposes, but is explicitly prohibited for impersonation, fraud or illegal activities.

Demand population:

"This model is suitable for researchers, developers, and educators who need high-quality speech synthesis. It provides technical support for voice interaction applications, speech synthesis research and educational scenarios."

Example of usage scenarios:

Generate natural voice for virtual assistants in voice interaction applications

Used for speech synthesis research and explore high-quality speech generation technology

Generate pronunciation examples for language learning in educational scenarios

Product Features:

Supports generation of high-quality voice from text

Can handle conversational scenarios with more talkers

Generate more natural voice through contextual information

Open source model for easy research and education use

Supports multiple languages (but may not work well in non-English)

Tutorials for use:

1. Cloning model repository: `git clone [email protected]:SesameAILabs/csm.git`

2. Set up the virtual environment and install dependencies: `python3.10 -m venv .venv` and `pip install -r requirements.txt`

3. Download the model file: `hf_hub_download(repo_id="sesame/csm-1b", filename="ckpt.pt")`

4. Load the model and generate voice: call the `load_csm_1b` and `generate` methods to generate audio

5. Save the generated audio: Use `torchaudio.save` to save the audio file