deepeval provides different aspects of metrics to evaluate LLM's answers to questions to ensure that answers are relevant, consistent, unbiased, and non-toxic. These integrate well with CI/CD pipelines, allowing machine learning engineers to quickly evaluate and check whether the LLM application is performing well as they improve it. deepeval provides a Python-friendly offline evaluation method to ensure your pipeline is ready for production. It's like "Pytest for your pipelines", making the process of producing and evaluating your pipelines as simple and straightforward as passing all your tests.

Demand group:

["Evaluate different aspects of language model application", "Integrate with CI/CD for automated testing", "Rapidly iteratively improve language models"]

Example of usage scenario:

Use simple unit testing methods to test the relevance and consistency of ChatGPT answers

Applications based on language chain, automated testing through deepeval

Use synthetic queries to quickly find model problems

Product features:

Tests for answer relevance, factual consistency, toxicity, and bias

View the web UI for tests, implementations and comparisons

Automatic evaluation of answers via synthetic query-answers

Integrates with common frameworks like LangChain

Synthetic query generation

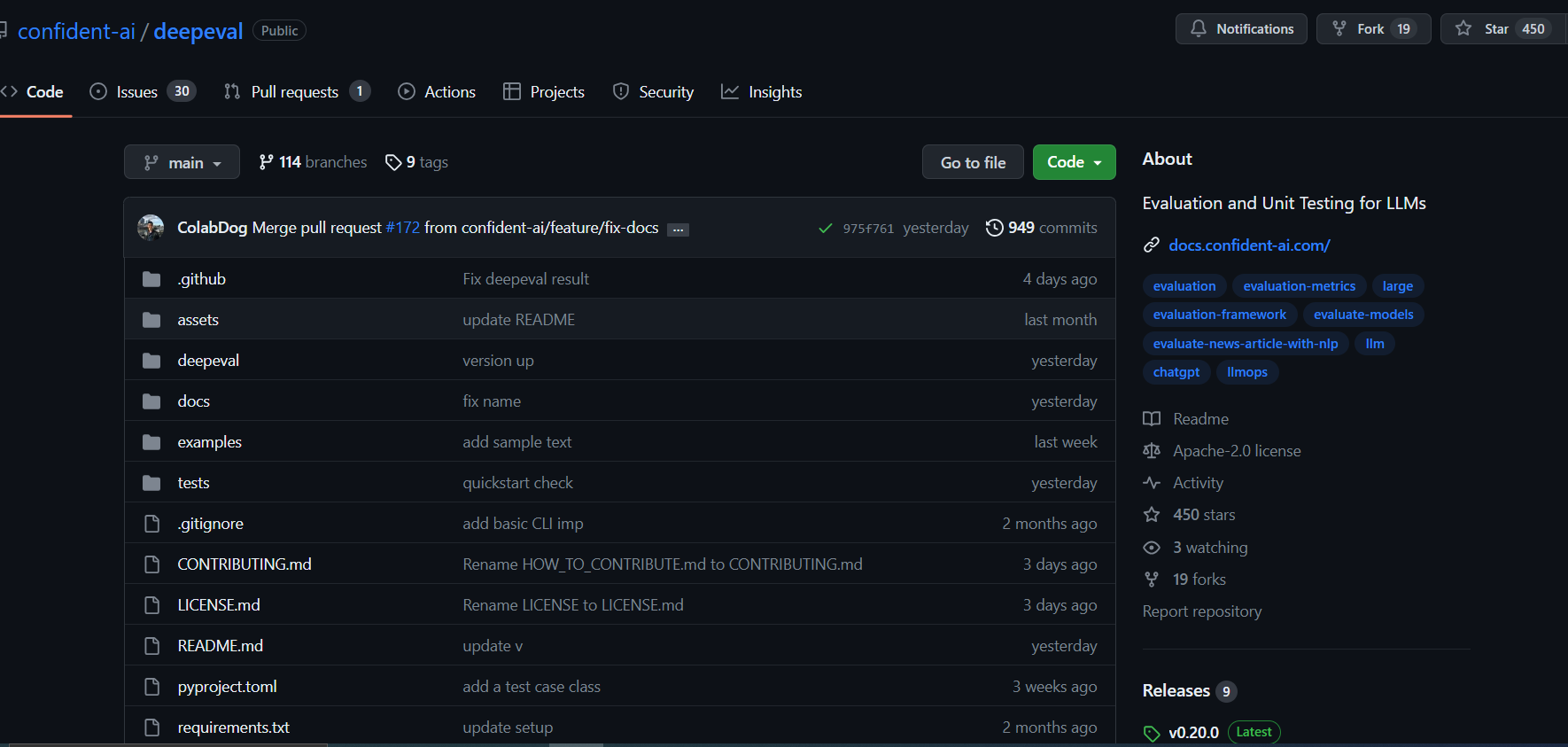

Dashboard