DeepSeek-R1-Zero inference model

DeepSeek-R1-Zero is an inference model developed by the DeepSeek team. This model focuses on enhancing the model’s reasoning capabilities through reinforcement learning. It exhibits powerful reasoning behaviors such as self-verification, reflection, and generation of long-chain reasoning without the need for supervised fine-tuning.

Main advantages

Efficient reasoning ability: Able to achieve efficient reasoning in various tasks.

No pre-training required: It can be used directly without pre-training steps.

Outstanding performance: Excellent performance in math, coding, and reasoning tasks, near the top of the industry.

Application scenarios

academic research

Used to explore the potential of reinforcement learning in improving model reasoning capabilities.

Programming competition

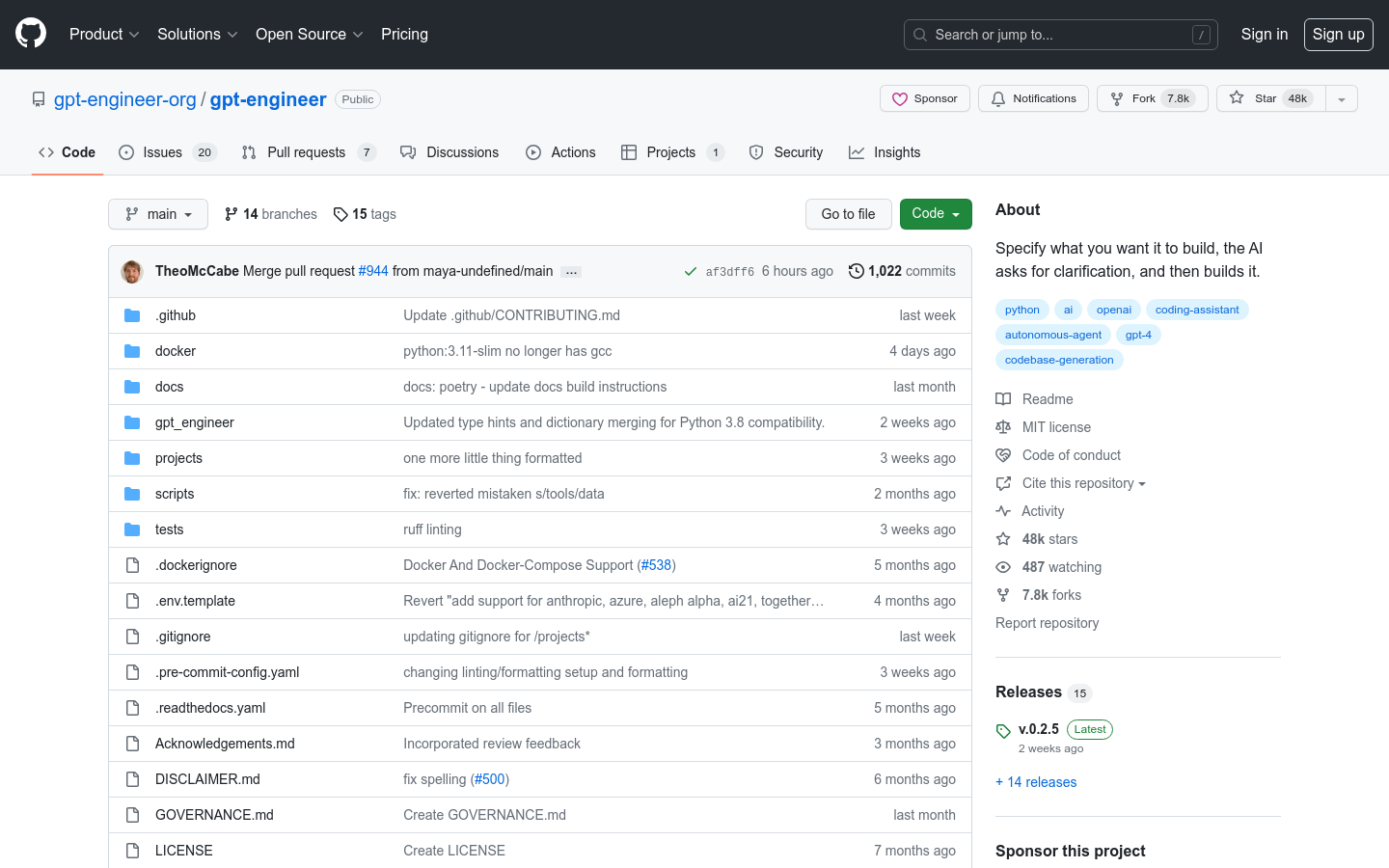

Help developers quickly generate high-quality code and improve competition performance.

Education field

Assist students to solve complex mathematical problems and improve learning efficiency.

Product features

Reinforcement learning training: Large-scale reinforcement learning training can be used without supervised fine-tuning.

Chain reasoning for complex problems: Supports chain reasoning for complex problems and can generate long chain reasoning paths.

Self-verification and reflection: Have the ability to self-verify and reflect to improve the accuracy and reliability of reasoning.

Multi-tasking support: Excel at math, coding, and reasoning tasks.

Open source model weights: Provide open source model weights to support further research and development by the community.

Multiple model variants: Provides multiple model variants, including distillation models, to meet the needs of different application scenarios.

Flexible deployment: supports local operation and use through API platform, flexible deployment.

Tutorial

Download model

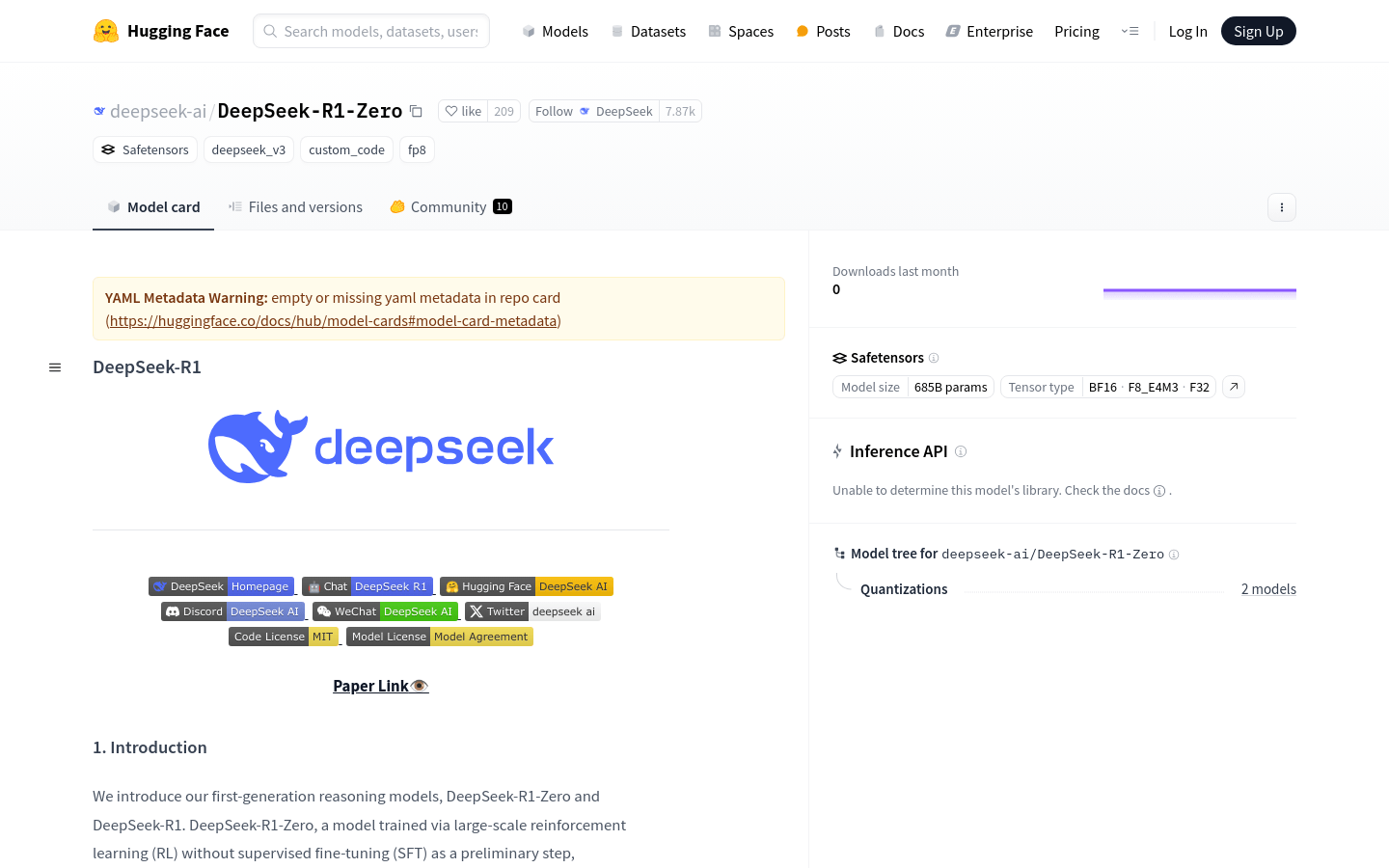

Visit the Hugging Face page to download the DeepSeek-R1-Zero model file.

Start local service

Choose appropriate reasoning tasks according to your needs, such as mathematical reasoning, code generation, etc.

Use open source tools (such as vLLM) to start local services and set appropriate parameters (such as temperature, maximum build length).

call model

Directly call the model through API platform (such as DeepSeek Platform) for inference.

Adjust the model configuration according to task requirements and optimize the inference effect.

Run models in your local environment or integrate into existing systems via API.

Monitor and optimize

Monitor model output to ensure inference results are as expected.

Fine-tune if necessary to further optimize performance.