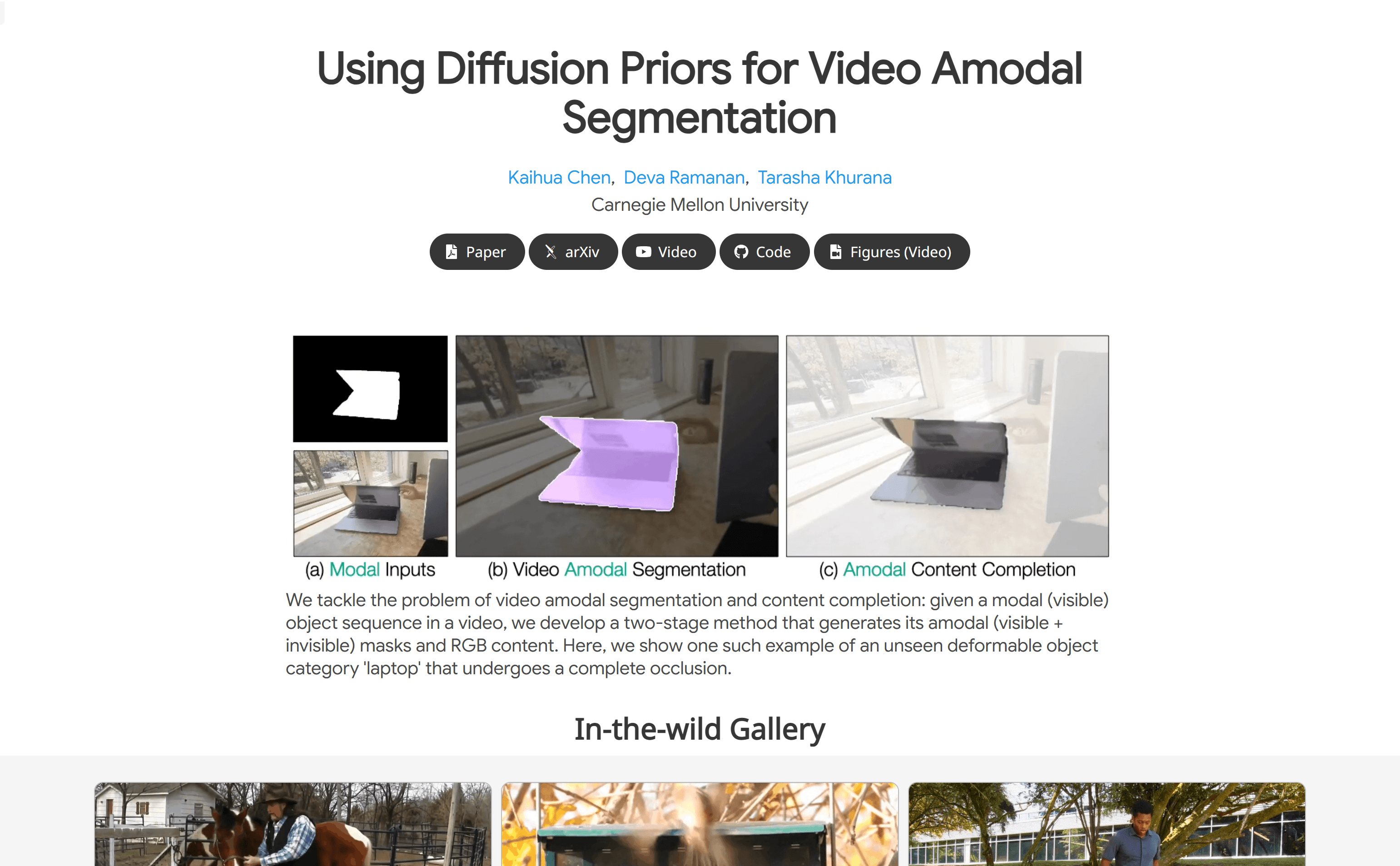

What is Diffusion-Vas ?

Diffusion-Vas is an advanced video processing model developed by Carnegie Mellon University, specifically used to solve the problem of objects being blocked in video. It can intelligently identify and divide obscured objects in the video and automatically complete the missing parts of these objects, thereby restoring the complete appearance of the object. This technology is of great significance to improving the accuracy and reliability of video analysis.

Demand population:

Diffusion-Vas is mainly aimed at researchers and developers in the field of computer vision, especially those who focus on video content analysis, object segmentation and scene understanding. Whether you are engaged in surveillance system development, film post-production, or research on autonomous driving technology, Diffusion-Vas can provide you with powerful technical support to help you better deal with occlusion problems in videos.

Example of usage scenarios:

1. Surveillance video analysis: In complex surveillance scenarios, Diffusion-Vas can identify and segment out obstructed pedestrians or vehicles, significantly improving the safety and efficiency of the monitoring system.

2. Movie post-production: During the film production process, this model can be used to repair or complete the part of the scene that is blocked due to shooting angle problems, improving the visual effect of the film.

3. Autonomous driving: In the field of autonomous driving, Diffusion-Vas can help the system better understand occlusion objects in complex traffic scenarios, and improve driving safety and decision-making accuracy.

Product Features:

Intelligent object segmentation: It can accurately identify and divide the obscured object parts in the video, maintaining high accuracy even under highly occlusion.

Content completion: Automatically fill the obstructed object area to restore the complete appearance of the object and ensure the consistency of the video content.

Advanced 3D UNet Network: Using 3D UNet backbone networks significantly improve segmentation and completion accuracy.

Multi-dataset verification: Extensive testing was conducted on multiple datasets, with excellent performance performance, especially in the non-visible segmentation of the obstructed area of the object, with a performance improvement of up to 13%.

Zero sample learning ability: Even when trained only on synthetic data, the model can generalize well to real-world scenarios, showing strong adaptability.

No additional input required: The model does not rely on additional inputs such as camera pose or optical flow, maintaining high robustness and ease of use.

Tutorials for use:

1. Prepare video data: Ensure that the video data is of good quality and contains objects that need to be divided and completed.

2. Run the model: Enter the video data into the Diffusion-Vas model, and the model will automatically process and generate a non-visible object mask.

3. Content completion: Use the second stage of the model to complete the occluded area to restore the complete appearance of the object.

4. Results evaluation: Comparison of the non-visible object mask output by the model and the actual object mask to evaluate the accuracy of segmentation.

5. Application scenario: According to the actual application scenario, apply the output of the model to the corresponding system, such as monitoring, movie post-production or autonomous driving.

6. Performance optimization: Adjust and optimize the model according to actual usage feedback to adapt to different video content and scenarios.

Through the above steps, you can make full use of the powerful features of Diffusion-Vas to improve the effectiveness and efficiency of video processing. Whether you are a beginner or a professional, Diffusion-Vas can provide you with reliable technical support to help you make greater breakthroughs in the field of computer vision.