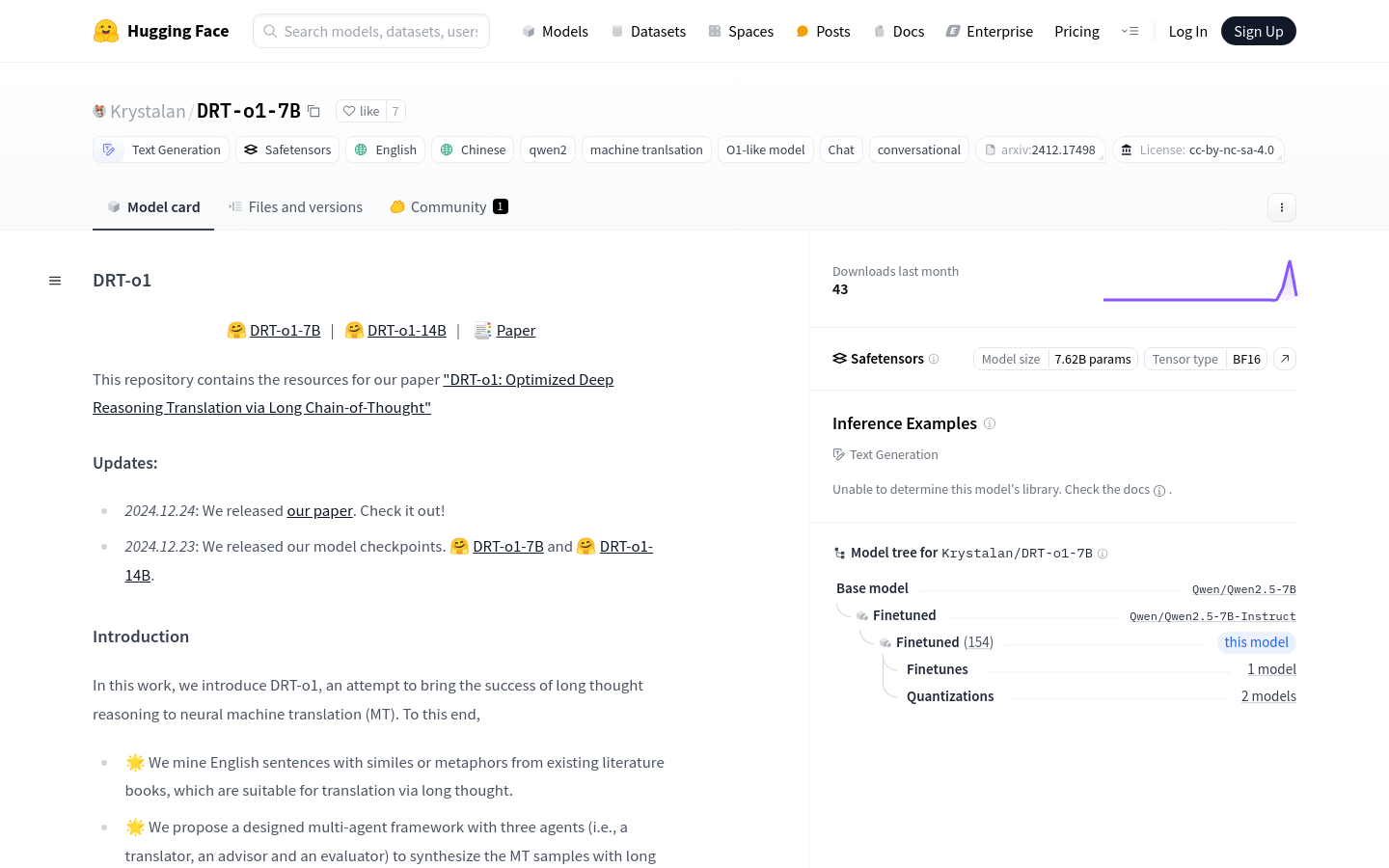

DRT-o1-7B is a model dedicated to the successful application of long-thinking reasoning to neural machine translation (MT). The model synthesizes MT samples by mining English sentences suitable for long-thinking translation and proposes a multi-agent framework with three roles: translator, consultant, and evaluator. DRT-o1-7B and DRT-o1-14B are trained using Qwen2.5-7B-Instruct and Qwen2.5-14B-Instruct as backbone networks. The main advantage of this model is its ability to handle complex language structures and deep semantic understanding, which is crucial to improving the accuracy and nature of machine translation.

Demand population:

"The target audience of DRT-o1-7B model is researchers, developers, and machine translation service providers in the field of natural language processing. The model is suitable for them because it provides a new, deep reasoning-based approach to improving the quality of machine translation, especially when dealing with complex language structures. In addition, it can facilitate research on the application of long-thinking reasoning in machine translation."

Example of usage scenarios:

Case 1: Translate English literary works containing metaphors into Chinese using DRT-o1-7B model.

Case 2: Apply DRT-o1-7B to a cross-cultural exchange platform to provide high-quality automatic translation services.

Case 3: DRT-o1-7B model was used in academic research to analyze and compare the performance of different machine translation models.

Product Features:

• Long-term thinking reasoning is applied to machine translation: improve translation quality through long-chain thinking.

• Multi-agent framework design: includes three roles: translator, consultant and evaluator to synthesize MT samples.

• Based on Qwen2.5-7B-Instruct and Qwen2.5-14B-Instruct training: Use advanced pre-trained models as the basis.

• Support for English and Chinese translation: Ability to handle machine translation tasks between Chinese and English.

• Suitable for complex language structures: Ability to process complex sentences containing metaphors or metaphors.

• Provides model checkpoints: easy for researchers and developers to use and further research.

• Support Huggingface Transformers and vllm deployment: easy to integrate and use.

Tutorials for use:

1. Visit the Huggingface official website and navigate to DRT-o1-7B model page.

2. Import the necessary libraries and modules according to the code examples provided on the page.

3. Set the model name to 'Krystalan/ DRT-o1-7B ' and load the model and word participle.

4. Prepare to enter text, such as English sentences that need to be translated.

5. Use word participler to convert input text into a format acceptable to the model.

6. Enter the converted text into the model and set the generation parameters, such as the maximum number of new tokens.

7. After the model generates the translation results, use the word participle to decode the generated token to obtain the translated text.

8. Output and evaluate the translation results, and follow-up processing as needed.