Ideal for researchers and developers who need to process large amounts of text data.

It is suitable for language tasks such as long text generation, summarization, and translation.

Attractive to enterprise users pursuing high performance and resource optimization.

Generating summaries of the Harry Potter books using Gemma 2B - 10M Context.

In education, automatically generate summaries for academic papers.

In the business world, automatically generate text content for product descriptions and market analysis.

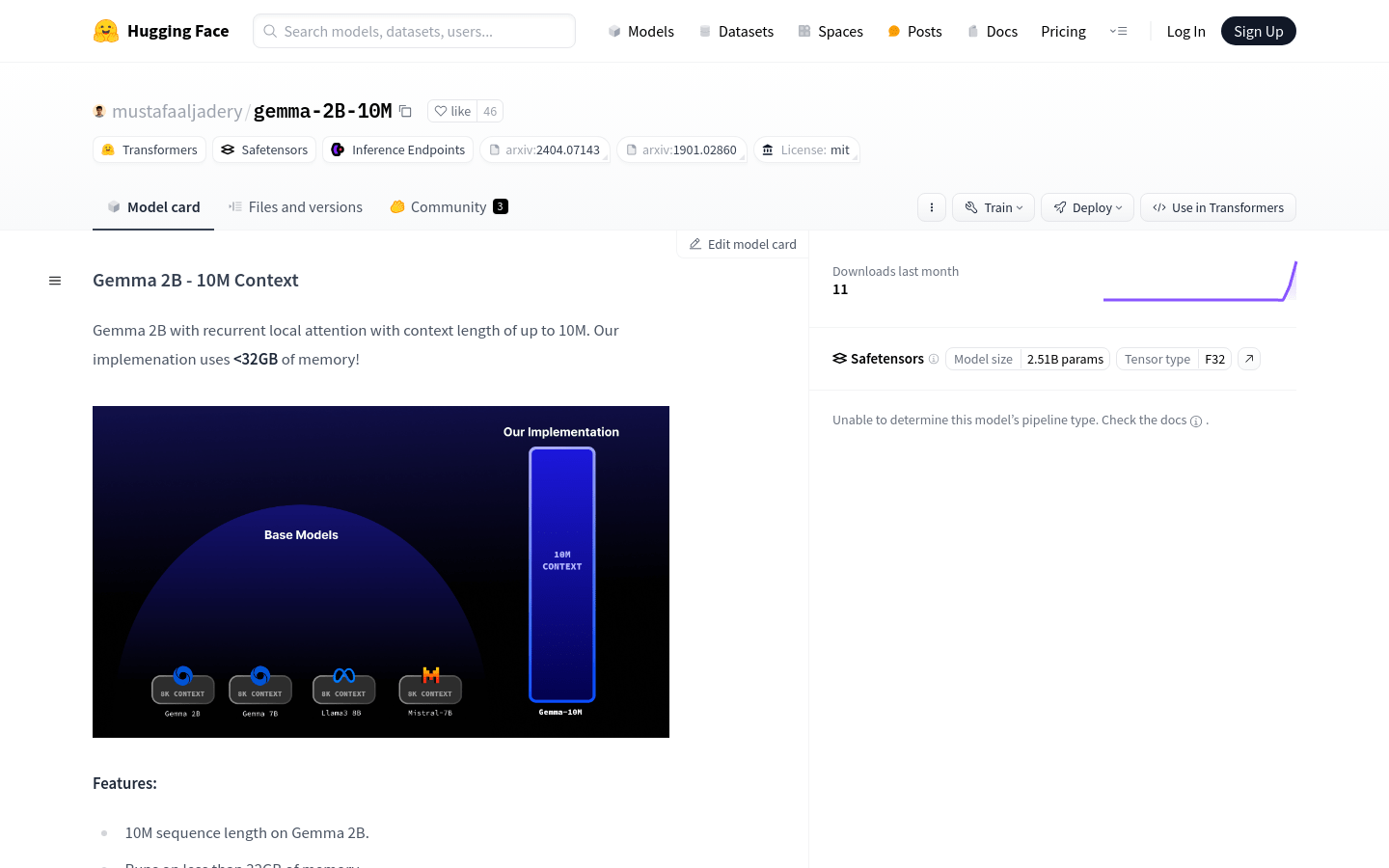

Supports text processing capabilities of 10M sequence length.

Runs with less than 32GB of memory to optimize resource usage.

Native inference performance optimized for CUDA.

Looping local attention achieves O(N) memory complexity.

200-step early checkpoint, and plans to train more tokens to improve performance.

Use AutoTokenizer and GemmaForCausalLM for text generation.

Step 1: Install the model and get the Gemma 2B - 10M Context model from huggingface.

Step 2: Modify the inference code in main.py to adapt to the specific prompt text.

Step 3: Use AutoTokenizer.from_pretrained to load the model's tokenizer.

Step 4: Use GemmaForCausalLM.from_pretrained to load the model and specify the data type as torch.bfloat16.

Step 5: Set the prompt text, for example 'Summarize this harry potter book... '.

Step 6: Use the generate function to generate text without calculating gradients.

Step 7: Print the generated text to see the results.